-

Notifications

You must be signed in to change notification settings - Fork 10.3k

Data Protection Refresh causing site hang #30752

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Comments

|

You're using the AzureExtensions package? You'll need to post in their repo I'm afraid, we can't transfer issues over |

|

This issue has been resolved and has not had any activity for 1 day. It will be closed for housekeeping purposes. See our Issue Management Policies for more information. |

|

This looks like a thread pool starvation caused by data protection taking a lock on the request thread to wait for the refresh to complete. Blocked request threads starve threadpool and continuations for blob requests can not run. cc @davidfowl |

|

the gift that keeps on giving 😢 |

|

DataProtection needs asyncification. |

|

Maybe we can do something like configuration and fire a change token when new data is ready. I need to investigate this. I don't think it makes sense changing Protect and Unprotect to be async. |

|

What if we add a |

|

Some more background into our particular issue because it doesn't make any sense to me: We have this issue pop up here and there where 500 errors just spike on an instance for a while now, but usually auto-heal goes ahead and recycles the instance and we are fine. Not that I love that it happens at all, but we couldn't figure out the cause and the impact to customers was limited. Last week, we had an issue with 2 of our apps at different times just spike, and it didn't matter what we did, restart the app, stop and start it, ... we always had an instance that would just return 500 errors. Auto heal would just fire constantly so we had to turn it off. This reminded me of the early days of aspnet core where we had an issue with data protection starting under heavy load causing thread pool starvation. We had a work around to encrypt something before leaving the startup class so that it got initialized before requests actually started hitting the service, that worked but I noticed in one of the releases it was fixed and so we removed that work around. After opening a support case with azure support, they asked us to change auto-heal to get a memory dump when it heals instead of just restarting, we did and that is how I noticed that it was to do with the refresh code, the confusing part is that it would happen immediately after restart and hitting load. That made no sense and I was unable to repo it locally using load testing. I even downloaded parts of your code and replaced your services locally (this was never deployed) to change the refresh interval to every 1 minute instead of the hard coded 24 hours and put a delay in the blob request of 10 seconds but no luck. What we ended up doing, and this makes no sense at all, is putting our old work around back into the startup to encrypt a string. We deployed it, swapped, and the sites started running perfectly. This was last Friday, we have not had a single issue all week. I can grantee we didn't see a drop of load at this time, but you can clearly see the drop in server errors at 3:07PM when we deployed the change. As a side note, we did find that swapping the slots one day did fix the issue temporarily, but on this day we swapped 3 or 4 times on this site before making the code change with no resolution. It was only after we deployed the encryption on startup trick did it solve the issue. I know it makes no sense, especially since the refresh shouldn't happen at startup at all, and the stack trace is clearly showing it is coming from the refresh and not the initial startup. |

It actually makes all kinds of sense. When do run encrypt in a startup the key management operations that usually take request lock happen before the service receives any requests avoiding the thread pool starvation. Otherwise, as soon as the service starts it tries to load/rotate keys under load ending up with a bunch of requests taking up threads and not allowing the rotation/loading process to finish. |

|

@pakrym Why I say this makes no sense, is that at startup the hosted service does the initialization and from my tests, it appears to be working correctly. |

|

You are right. This should've mitigated the issue on startup. |

|

This issue has been resolved and has not had any activity for 1 day. It will be closed for housekeeping purposes. See our Issue Management Policies for more information. |

|

We've moved this issue to the Backlog milestone. This means that it is not going to be worked on for the coming release. We will reassess the backlog following the current release and consider this item at that time. To learn more about our issue management process and to have better expectation regarding different types of issues you can read our Triage Process. |

|

What if we add a TryRollKeyRingAsync() method that we unconditionally run as part of every request. This would make sure that during request all protect and unprotect calls won't make any async calls. This seems like an easy DoS, you want to minimize calls to roll keys as much as possible, we throttle them by design. Blocking on startup is also by design, there's no point in letting other requests run until the keyring is filled. The block is, of course, fine with the other providers, because they're all local resource hits. @pakrym maybe you fudge it by doing a protect inside your own initialization once it's all set? |

We would only roll/refresh when the key is close to expiry and the subsequent Protect/Uprotect call would've rolled it anyway.

Nope, we don't use protect inside the key provider. |

|

Our site is also hanging from this sometimes. We see the same call stack. We run Even with the

We will try a work-around as well, like this:

That sounds sensible. In times of key rotation, it seems like the new key will be available a good while before the previous one expires, anyway. So it sounds nice to get the new key ring ready in the background, instead of letting requests grab that lock😅 Cheers |

|

I'm going to close this issue. Please post additional feedback in #36157 so we can track it centrally and don't drop any. |

Describe the bug

Our site is hanging, sometimes unrecoverable during a refresh of the data protection provider. I was able to get a memory dump while it was occurring one time. I have been trying to load test locally and duplicate the issue without any luck. It seems that most of the times this happens our response time goes way up

our 500 count

and thread count

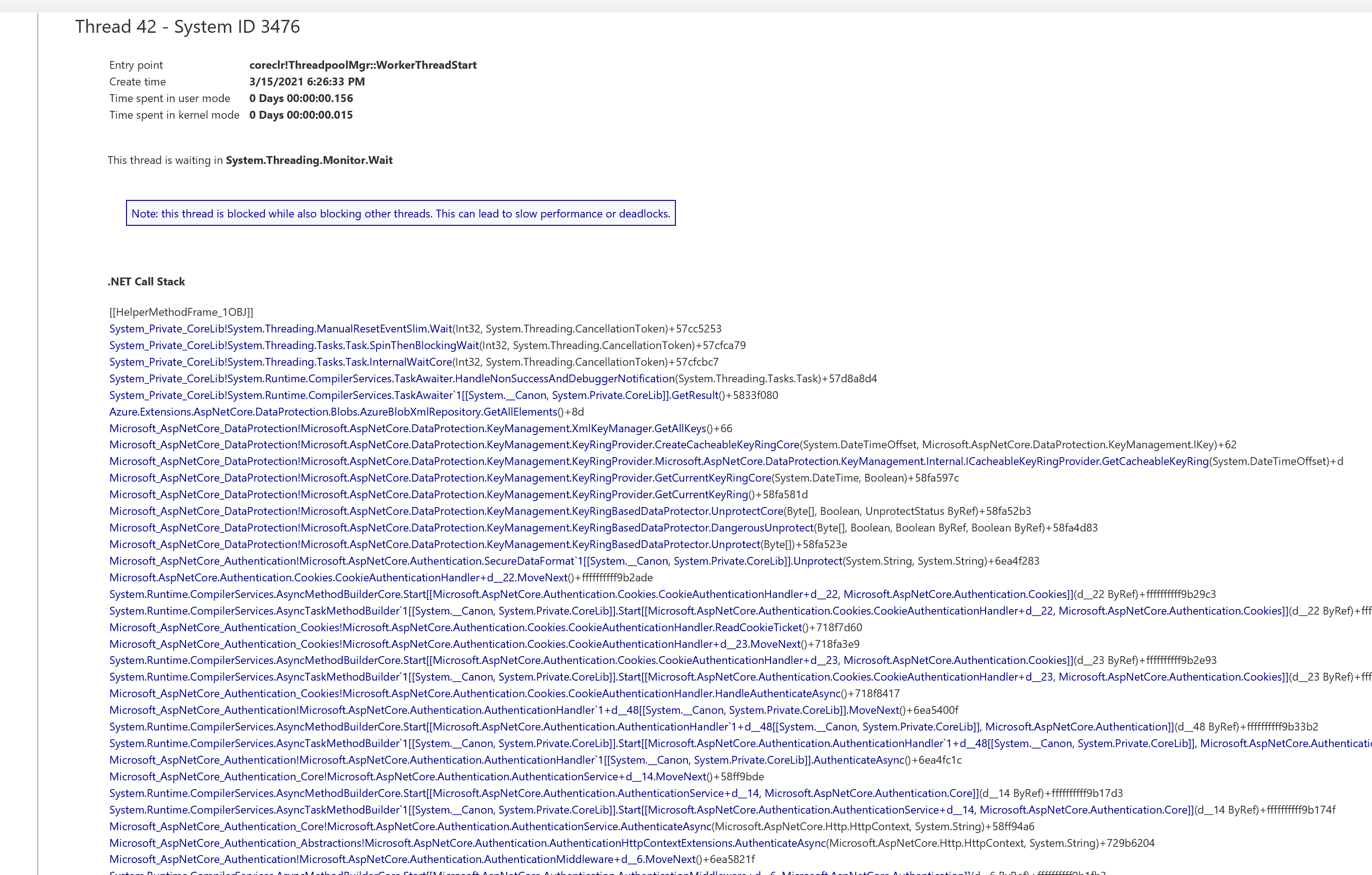

Here is a picture of the stack trace from the memory dump:

and one of the threads waiting for that one:

To Reproduce

Sorry, but I've not been able to repo this in a testing environment

Further technical details

.NET SDK (reflecting any global.json):

Version: 5.0.103

Commit: 72dec52dbd

Runtime Environment:

OS Name: Windows

OS Version: 10.0.14393

OS Platform: Windows

RID: win10-x86

Base Path: D:\Program Files (x86)\dotnet\sdk\5.0.103\

Host (useful for support):

Version: 5.0.3

Commit: c636bbdc8a

.NET SDKs installed:

1.1.14 [D:\Program Files (x86)\dotnet\sdk]

2.1.518 [D:\Program Files (x86)\dotnet\sdk]

2.1.521 [D:\Program Files (x86)\dotnet\sdk]

2.2.109 [D:\Program Files (x86)\dotnet\sdk]

3.1.111 [D:\Program Files (x86)\dotnet\sdk]

3.1.112 [D:\Program Files (x86)\dotnet\sdk]

5.0.103 [D:\Program Files (x86)\dotnet\sdk]

.NET runtimes installed:

Microsoft.AspNetCore.All 2.1.25 [D:\Program Files (x86)\dotnet\shared\Microsoft.AspNetCore.All]

Microsoft.AspNetCore.All 2.2.14 [D:\Program Files (x86)\dotnet\shared\Microsoft.AspNetCore.All]

Microsoft.AspNetCore.App 2.1.25 [D:\Program Files (x86)\dotnet\shared\Microsoft.AspNetCore.App]

Microsoft.AspNetCore.App 2.2.14 [D:\Program Files (x86)\dotnet\shared\Microsoft.AspNetCore.App]

Microsoft.AspNetCore.App 3.0.3 [D:\Program Files (x86)\dotnet\shared\Microsoft.AspNetCore.App]

Microsoft.AspNetCore.App 3.1.8 [D:\Program Files (x86)\dotnet\shared\Microsoft.AspNetCore.App]

Microsoft.AspNetCore.App 3.1.12 [D:\Program Files (x86)\dotnet\shared\Microsoft.AspNetCore.App]

Microsoft.AspNetCore.App 5.0.3 [D:\Program Files (x86)\dotnet\shared\Microsoft.AspNetCore.App]

Microsoft.NETCore.App 1.0.16 [D:\Program Files (x86)\dotnet\shared\Microsoft.NETCore.App]

Microsoft.NETCore.App 1.1.13 [D:\Program Files (x86)\dotnet\shared\Microsoft.NETCore.App]

Microsoft.NETCore.App 2.0.9 [D:\Program Files (x86)\dotnet\shared\Microsoft.NETCore.App]

Microsoft.NETCore.App 2.1.25 [D:\Program Files (x86)\dotnet\shared\Microsoft.NETCore.App]

Microsoft.NETCore.App 2.2.14 [D:\Program Files (x86)\dotnet\shared\Microsoft.NETCore.App]

Microsoft.NETCore.App 3.0.3 [D:\Program Files (x86)\dotnet\shared\Microsoft.NETCore.App]

Microsoft.NETCore.App 3.1.12 [D:\Program Files (x86)\dotnet\shared\Microsoft.NETCore.App]

Microsoft.NETCore.App 5.0.3 [D:\Program Files (x86)\dotnet\shared\Microsoft.NETCore.App]

The text was updated successfully, but these errors were encountered: