-

Notifications

You must be signed in to change notification settings - Fork 1.9k

PermutationFeatureImportance not working with AutoML API #5247

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Comments

|

Hi, @jacobthamblett . So, I think I have the solution for your first attempt, although I'm not sure of how to fix your second attempt. Still, I think that if my suggestion for your first attempt works, it will be enough to fix your problem and close this issue. 😄 About your first attempt (using AutoML with PFI) // Extract the predictor.

var lastTransformer = ((TransformerChain <ITransformer>)PFI_model).LastTransformer;

var linearPredictor = (ISingleFeaturePredictionTransformer<object>)lastTransformer;

// Compute the permutation metrics for the linear model using the

// normalized data.

var permutationMetrics = mlContext.MulticlassClassification

.PermutationFeatureImportance(linearPredictor, transformedData,

permutationCount: 30);I think that should solve your issue. (NOTE: In some rare cases you might not need to extract the LastTransformer, i.e. it would be enough with Also, notice that you don't need to retrain your var PFI_model = bestRun.Model // No need to retrain the bestRun.Estimator.However, you can train your About your second attempt (without AutoML) But just by looking at the stacktrace, it looks like a problem with the Since you tried out this attempt only because your first attempt didn't work, I think it's ok not to look much into this other attempt, if my suggestion for the first attempt works for you 😄 |

|

Thanks @antoniovs1029 , Extracting the already trained model was my initial hope, however the example above was just following the documentation - perhaps another argument correlating with your documentation issue. I'm not sure whether my solution falls into your highly unlikely category, but the above suggestion throws an InvalidCastException error: |

|

Judging by the exception message, it seems that AutoML is adding a To do this, you'll need to do the following: using System.Linq; // Add this to the file where you're trying to use PFI

....

// Cast your model into a TransformerChain:

TransformerChain<ITransformer> modelAsChain = (TransformerChain<ITransformer>)bestRun.Model;

// Convert your TransformerChain into an array of ITransformers

ITransformer[] transformersArray = modelAsChain.ToArray();

// Get the prediction transformer from the array

// I suspect it will be in the second-to-last position, so that's why I've used "transformersArray.Length - 2" as index

// But it might not be there, and you might need to use the debugger to see where in the Array your Prediction Transformer is

var predictionTransformer = transformersArray[transformersArray.Length - 2];

// Finally cast it to ISingleFeaturePredictionTransformer to use it for PFI

var linearPredictor = (ISingleFeaturePredictionTransformer<object>)predictionTransformer;Please, let us know if this works for you. If it doesn't, please share the model you're trying to use with PFI. Thanks! |

|

I see. Sorry, I wasn't aware that AutoML was capable of returning this kind of model, with the prediction transformer so hidden inside the TransformerChain. So, the goal is still the same: to get the using System.Linq; // Add this to the file where you're trying to use PFI

....

TransformerChain<ITransformer> modelAsChain = (TransformerChain<ITransformer>)bestRun.Model;

ITransformer[] transformersArray = modelAsChain.ToArray();

// Get the prediction transformer, since it is inside a Transformer Chain inside the Transformer Chain

// as you've showed on your capture,

// then you can get the predictionTransformer like this:

var predictionTransformer = ((TransformerChain<ITransformer>)transformersArray[0]).LastTransformer;

// Finally cast it to ISingleFeaturePredictionTransformer to use it for PFI

var linearPredictor = (ISingleFeaturePredictionTransformer<object>)predictionTransformer; |

|

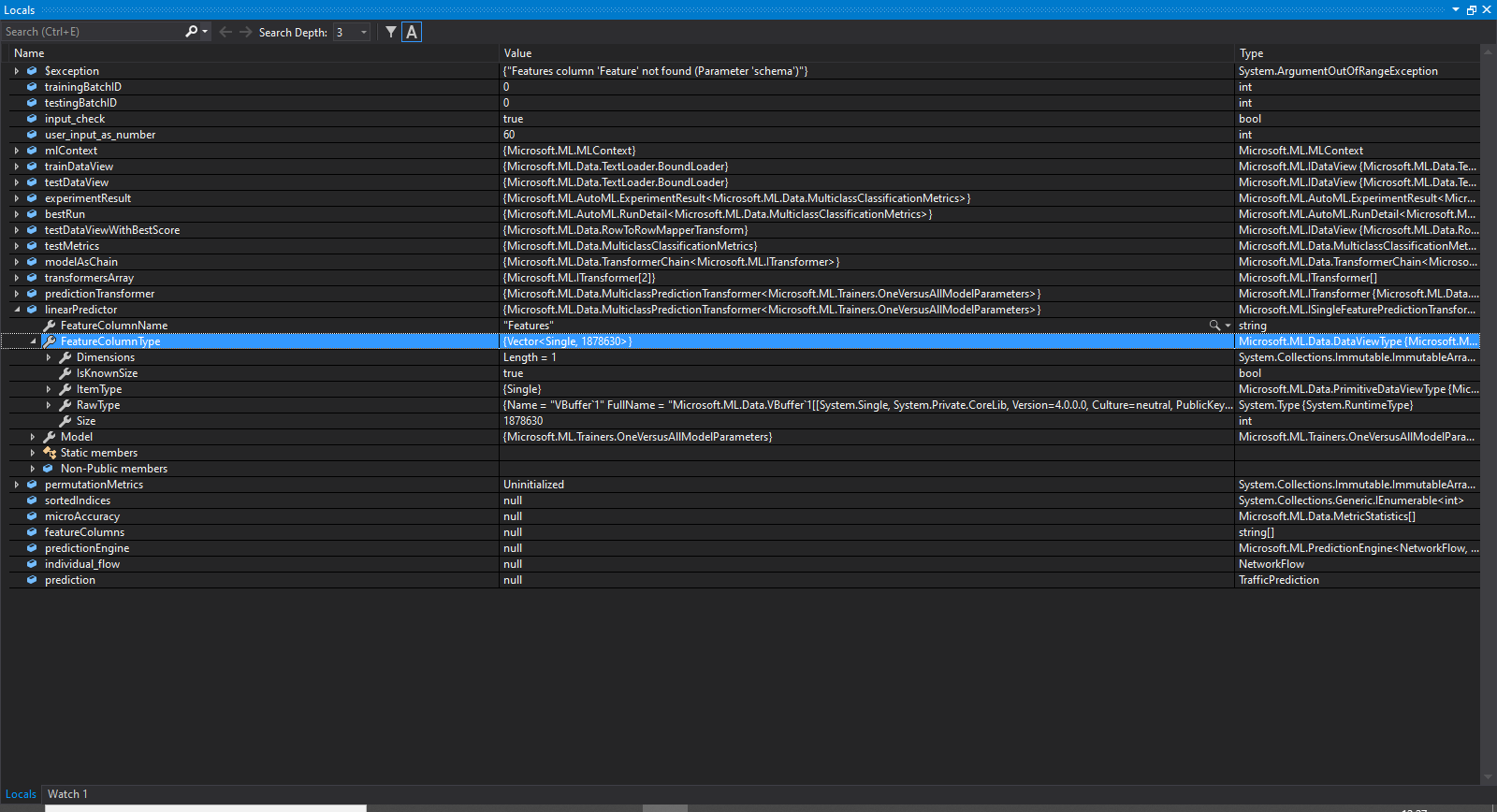

Thanks @antoniovs1029 , that's great - it would appear that the your solution has extracted the linear prediction transformer from the transformer chain inside the array of transformer chains. However... Still following the documentation on PFI multiclassification: ...I could be wrong, but it sounds like the definition of permutationMetrics variable with multiclassClassification requires a single feature from within the linearPredictor, whereas the linearPredictor extracted from the AutoML's bestRun model has a vector of features: This is throwing the following error: |

|

Hi, @jacobthamblett . Looking at the code you shared, local variables, and the stacktrace, I think the problem might be that you need to transform the // Transform the dataset.

var transformedData = PFI_model.Transform(testDataView);

// Compute the permutation metrics for the linear model using the

// normalized data.

var permutationMetrics = mlContext.MulticlassClassification

.PermutationFeatureImportance(linearPredictor, transformedData,

permutationCount: 30);This applies to Multiclass classification transformers, but also to the other prediction transformers that use PFI. The error you got was because the "Features" column doesn't exist in your |

|

Thank-you @antoniovs1029 , that appears to all now be working. The PFI code is now running using the AutoML's BestRun model, it is taking a long time however for the PFI metrics to be produced, but I guess that's normal? |

|

Glad to hear that you've solved your issue trying to get PFI running! 😄 I will close this issue now. It's normal for PFI to take some time, as the algorithm needs to to do permutations with every column in features, and it retrains a model from scratch with each permutation. Seeing that you have many columns that you're using as features, I wouldn't be surprised that it takes a couple of hours, as it is a resource intensive algorithm. On the other hand, you could explore lowering the |

|

Thanks @antoniovs1029 for the suggestions around The code complies and runs, but seems to take forever to execute the following line: So far, it's taken just over 24 hours to run 2 permutations with a limit of 10 examples, and it has still not finished executing. I know PFI is resource intensive and can take a while, but should it really be this long? |

UPDATE: The PFI code has now finished executing. It's taken roughly 34 hours to complete 2 permutations using 10 examples (this may have taken less time, but not sure what time it finished running over night). The results seem to output mostly, however there's an out of bounds error accessing the featureColumns array in the final for loop: This should hopefully be a simple fix, I may just have to wait another 34 hours to get to that point in execution again. |

|

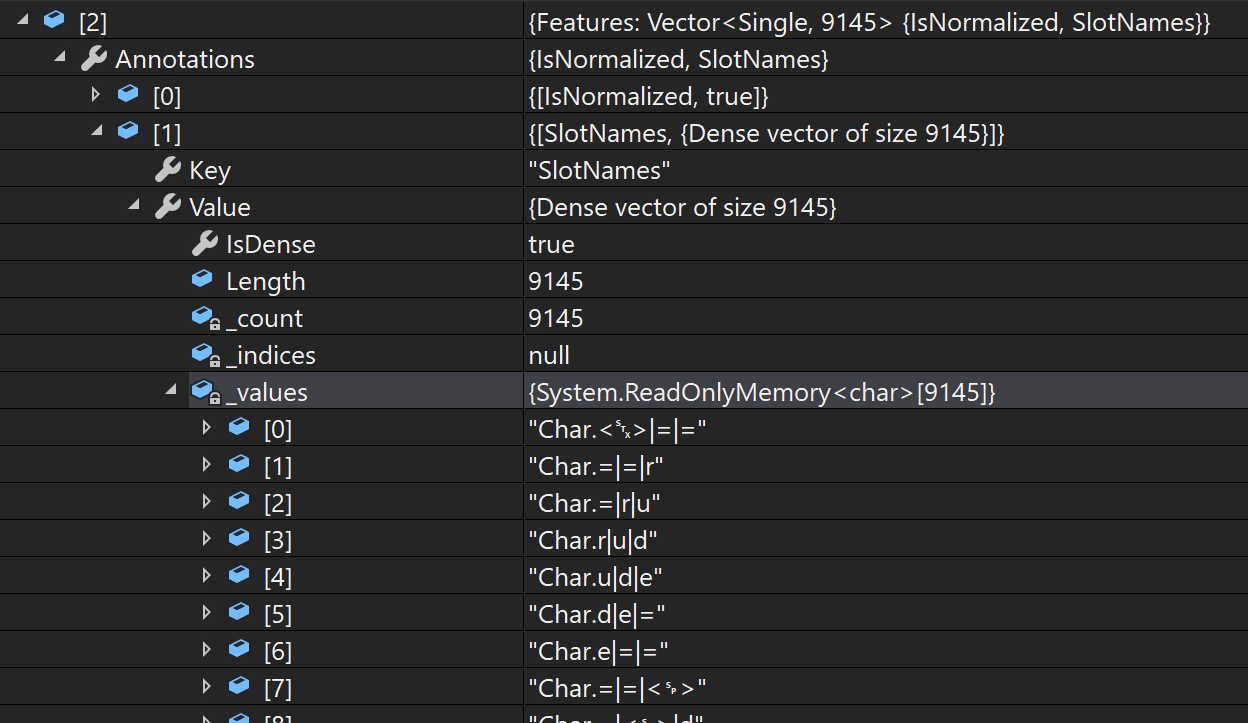

Hi, @jacobthamblett . Sorry to hear that running PFI is taking so long. I'd recommend opening a new Issue for this, on how PFI is taking so long, as that issue wouldn't be related to the problems you had with extracting the predictor from an AutoML model (which is the topic of this issue). This way others might be able to give their opinions if they've also had a similar experience, and we can take a closer look to the problem. If possible, on that other issue, please include the model you're trying to use with PFI. Simply save it to disk with: mlContext.Model.Save(PFI_model, testDataView.Schema, "C:\path\to\file\model.zip");And share the model.zip with us. Describing the types of your columns in featureColumns would also be helpful. But it would be even more helpful if you were able to provide a full repro of your scenario (including the code on how you're loading data, and your parameters for the AutoML experiment), and also including a sample dataset to actually run your code. This way we can have more information to decide if PFI taking so long is a problem with PFI, or with something else. But simply sharing your model might be good enough for us to start looking into the problem. Now, I'm assuming you're using the same columns you showed us on your code (i.e. the var featureColumns = new string[]{...}), and so I've counted 86 columns there. I've actually never personally worked with so many input columns and PFI, so I wouldn't know for sure... but taking 30+ hours to run PFI with only 2 permutations and 10 examples sounds that there's something wrong. Only once I've had a similar issue, and it was because my pipeline included a TextFeaturizingEstimator, which created a Features vector with thousands of columns, which then caused PFI to take a lot of time to run (I cancelled it after the first hour, when I realized the Given the "out of bounds" error you're getting after PFI is completed, my guess is that your model is actually using more features than the ones you provided on your input dataset (i.e. that the model actually adds more columns into what is then used as the A way to confirm this is the problem, is to take a "preview" of your model's output, and inspect that (you can do this before calling PFI, so that you don't need to wait hours to confirm this): var preview = PFI_model.Transform(testData).Preview();Then inspect the Depending on your model, you'll also be able to see a descriptive name of each column inside your The capture tells me that Features[1] corresponds to the feature of the 3-gram "==r" as featurized by the Notice that the sample found on the official docs is somewhat a toy a example, as typically a model will actually add many more features than the ones that were present on your input, and the correct way to associate names to the output of PFI isn't by hardcoding the column names in a string array, but actually to try to retrieve them from the SlotNames annotation. My immediate advice if this turns out to be the problem would be to remove any non-numerical column from your input columns (if there's a string column, then it's likely that AutoML is adding a If this doesn't work, and/or you're still having issues with how much time PFI takes, then please open a new issue with the requested information. Thanks! 😄 |

System information

Issue

Using the AutoML API to generate ML multiclassification model from large network datasets stored in CSV file. The model produced by the API provides accurate prediction, with reasonable results in the following metrics: MicroAccuracy, MacroAccuracy, LogLoss & LogLossReduction. Trying to get metrics on what feature selection was implemented by the API is proving impossible however.

Following all direction & documentation on implementing the PermutationFeatureImportance method has no success. It is possible to extract the pipeline from the AutoML BestRun model, and putting together the list of features in the custom class type it is using is not a problem either. However, there would appear to be no LastTransformer attribute for the BestRun model produced by the API. According to the official documentation on how to execute the PFI method on multiclass model, this is one of the main hurdles.

Attempting to follow the specific Multiclassification PFI documentation more exact, and defining a new pipeline with single multiclassification algorithm still throws an error. This is not ideal, as the new pipeline definition with single multiclassification algorithm does not necessarily match that used by the AutoML API's model, which is the model the PFI metrics are needed for.

Source code / logs

Example code following Multiclassification PFI Implementation from ML.Net Documentation, using pipeline extracted from AutoML bestRun Model:

Error Produced: Severity Code Description Project File Line Suppression State

Error CS1061 'ITransformer' does not contain a definition for 'LastTransformer' and no accessible extension method 'LastTransformer' accepting a first argument of type 'ITransformer' could be found (are you missing a using directive or an assembly reference?)

Example code also following Multiclassification PFI Implementation from ML.Net Documentation, using a newly created pipeline and single multiclassification algorithm:

Code builds but also fails at PFI_model definition: System.ArgumentOutOfRangeException

HResult=0x80131502

Message=Schema mismatch for input column 'Features': expected vector or scalar of Single or Double, got Vector

Source=Microsoft.ML.Data

StackTrace:

at Microsoft.ML.Transforms.NormalizingEstimator.GetOutputSchema(SchemaShape inputSchema)

at Microsoft.ML.Data.EstimatorChain

1.GetOutputSchema(SchemaShape inputSchema) at Microsoft.ML.Data.EstimatorChain1.Fit(IDataView input)The text was updated successfully, but these errors were encountered: