-

Notifications

You must be signed in to change notification settings - Fork 7.1k

Inconsistent API for tensor and PIL image for CenterCrop #3297

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Comments

|

P.S. A lot of the functionality needed is similar to RandomCrop, which provides flexibility of padding, so would be a good idea to consider integration of those options to CenterCrop, since beside the offset computation, rest cropping part would be same. Applying RandomCrop on both PIL image and tensor image shows same content(but with different offset). Big HP Fan :D, He-Who-Must-Not-Be-Named. |

|

One last thing: I can work on its PR. Let me know if its alright by you. |

That sounds great! Let's wait for the green light from a member. |

|

@saurabheights Thanks for reporting and for making the issue reproducible. That's definitely a bug. We are happy to accept a PR that solves the problem. Concerning the proposal of extending the functionality and adjusting the API, I think this should be discussed on a separate issue because we need to assess the backward compatibility. @vfdev-5 Any thoughts on this? |

|

Fun bug with PIL and Tensors. I think it would make sense to raise an error saying that we can not crop to a larger size. import torch

import torchvision.transforms as transforms

import torchvision.transforms.functional as TF

import numpy as np

pil_x = TF.to_pil_image(127 * torch.ones(3, 100, 100))

print(transforms.CenterCrop(150)(pil_x).size) # PIL image size is 50x50

print(np.asarray(pil_x).shape)

tensor_x = torch.randn(3, 100, 100)

print(transforms.CenterCrop(150)(tensor_x).shape)

> (150, 150)

> (100, 100, 3)

> torch.Size([3, 24, 24])EDIT: My bad about pillow output size as 100x100. It is 150x150 and output image is padded. |

|

@vfdev-5 You mentioned:-

Did you mean "150x150" here? PIL seems to give correct output so far, so raising error will cause backward incompatibility. Will that be fine? |

I mean, something like # x = PIL_image or tensor

with pytest.raises(ValueError, match=r"Crop size should be smaller than input size"):

transforms.CenterCrop(150)(x)

No, PIL is incorrect also as data is still 100x100. I mean it didn't pad the image. |

|

@vfdev-5 First, I am really sorry if I am mistaken/confused and would really appreciate for your time and explaination here.

There is padding happening to output and not to the input to CenterCrop method, which is expected, the input should not be changed. I say should after glossing over various transform, and I didnt see any inplace ops to modify input. The first image I1 shows a sample image from PASCAL and CenterCrop output with cropsize larger than image size. It does the padding to output. The second image I2 shows that even when cropsize is smaller than imagesize, input image to CenterCrop method is not modified. I only ran this test to see if my assumption was correct regarding transform not changing input. |

@saurabheights No worries, I also appreciate your time spending on this issue and checking things ! Thanks a lot ! It is very interesting example and this is not what I got in my tests. Let me recheck. Meanwhile, what is the version of PIL you have ? EDIT: Well, it is a bug on tensor input to fix. We already did something similar for padding if padding requires crop:

|

|

No worries and thank you for pad_symmetric, it would work fine here. Tested on PIL version '6.2.1'(found this is too old, so retested on newer versions :D) and '7.2.0'. And yep, there was padding in both. Regarding ETA - I will hopefully make changes in 3/4 days(its only few hour work but working first time on vision lib, so might get delayed in setup by a little). |

|

Sounds good! Please, take a look at our contributing guide which may help you with the dev setup etc. A draft PR is also good such that we can iterate over the implementation if needed and do not hesitate to ask questions here for details. |

|

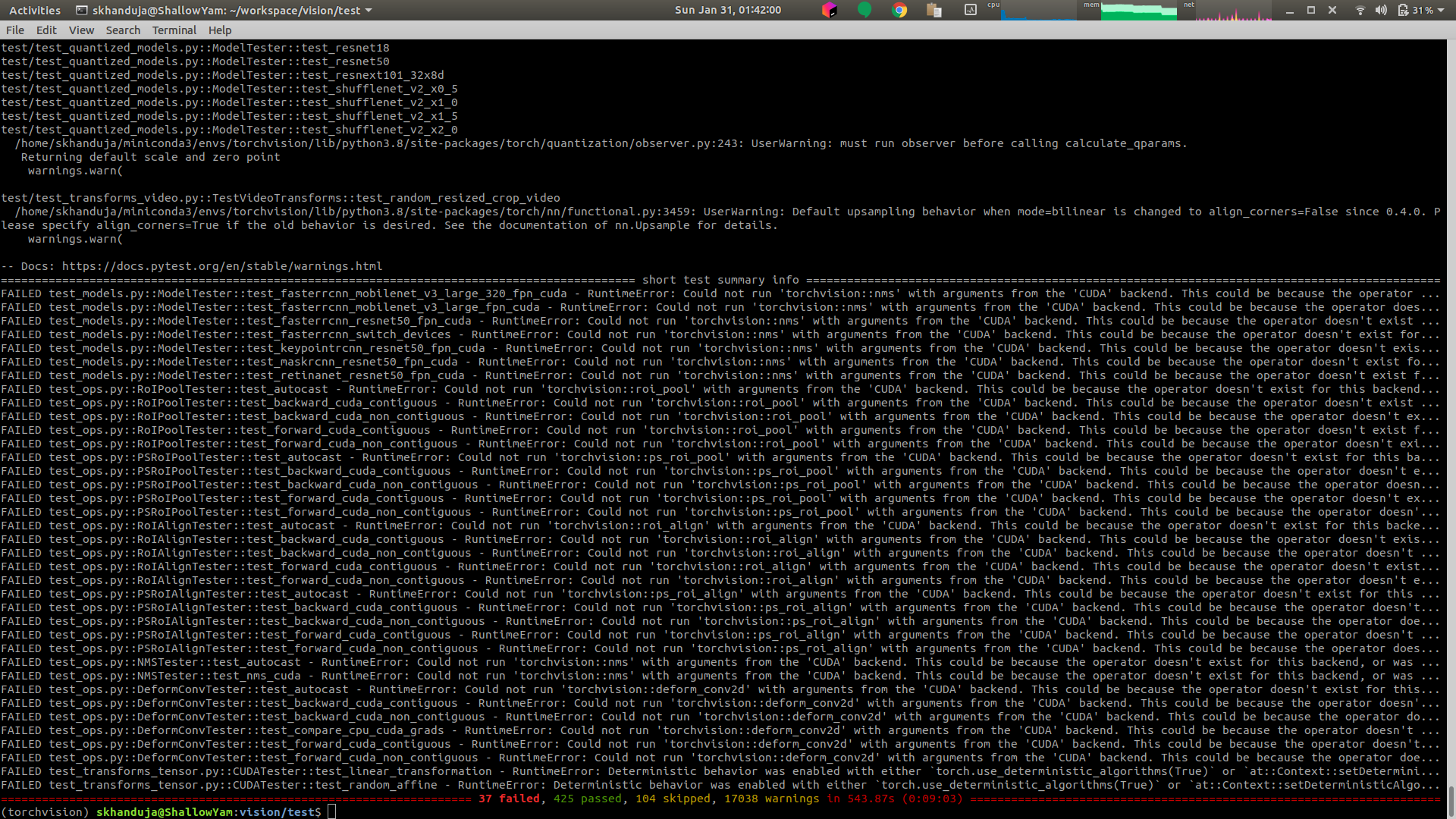

I have finished the changes here:- master...saurabheights:bugfix/center-cropsize-greater-imgsize I have conda env, with pytorch-nightly installed. I built torchvision from source code+my_fix. AFAIK, none of any failed test is related to my changes. Shall I make the PR or fix these issues first? |

|

@saurabheights The errors you get on the screenshot seem to be related to your development environment, not to your changes. You can try fixing these so that you can contribute easier on the future but if you don't have the time to fix this locally you just confirm that unit-tests that are relevant to your changes pass and send a PR. Our CI will run all the tests anyway, so we will be able to see if anything breaks. |

|

Thanks. |

🐛 Bug

CenterCrop transform crops image center. However, when crop size is smaller than image size(in any dimension), the results are different for PIL.Image input and tensor input.

For PIL.Image, it does padding, while for tensor, the results are completely wrong, due to incorrect crop location computation(negative position values computed).

To Reproduce

Steps to reproduce the behavior:

Expected behavior

Mainly, API should be consistent for both inputs or there should be two different methods for PIL & Tensor input(which is IMO less appealing).

Side note - docs should be updated for what happens when crop_size is greater than image size. This condition, I believe is missing in documentation of other crop methods as well.

Environment

cc @vfdev-5

The text was updated successfully, but these errors were encountered: