diff --git a/.github/styles/Vocab/Timescale/accept.txt b/.github/styles/Vocab/Timescale/accept.txt

index 0f12e77ca5..cd7dfb7f28 100644

--- a/.github/styles/Vocab/Timescale/accept.txt

+++ b/.github/styles/Vocab/Timescale/accept.txt

@@ -2,6 +2,7 @@

Aiven

Alertmanager

API

+api

Anthropic

async

[Aa]utoscal(?:e|ing)

@@ -16,6 +17,7 @@ https?

BSD

[Cc]allouts?

COPY

+[Cc]opy

[Cc]lickstreams?

Cloudformation

Cloudwatch

diff --git a/.github/styles/config/vocabularies/Timescale/accept.txt b/.github/styles/config/vocabularies/Timescale/accept.txt

new file mode 100644

index 0000000000..cd7dfb7f28

--- /dev/null

+++ b/.github/styles/config/vocabularies/Timescale/accept.txt

@@ -0,0 +1,159 @@

+[Aa]ccessor

+Aiven

+Alertmanager

+API

+api

+Anthropic

+async

+[Aa]utoscal(?:e|ing)

+[Bb]ackfill(?:ing)?

+cron

+csv

+https?

+(?i)bigint

+[Bb]itemporal

+[Bb]lockchains?

+[Bb]oolean

+BSD

+[Cc]allouts?

+COPY

+[Cc]opy

+[Cc]lickstreams?

+Cloudformation

+Cloudwatch

+config

+[Cc]onstif(?:y|ied)

+[Cc]rypto

+[Cc]ryptocurrenc(?:y|ies)

+Datadog

+[Dd]efragment(:?s|ed)?

+Django

+distincts

+Docker

+[Dd]ownsampl(?:e|ing)

+erroring

+Ethereum

+[Ff]ailover

+[Ff]inalizers?

+[Ff]orex

+[Gg]apfill(?:ing)?

+[Gg]eospatial

+GitHub

+GNU

+Grafana

+GUC

+gsed

+gzip(?:ped)?

+Hasura

+HipChat

+[Hh]ostname

+href

+[Hh]yperloglog

+[Hh]yperfunctions?

+[Hh]ypertables?

+[Hh]ypershift

+img

+Indri

+[Ii]nserter

+[Ii]ntraday

+[Iin]validation

+ivfflat

+jpg

+JDBC

+JDK

+JSON

+Kafka

+Kaggle

+Kinesis

+[Ll]ambda

+LangChain

+LlamaIndex

+LLMs

+[Ll]ookups?

+loopback

+[Mm]atchers?

+[Mm]aterializer

+(?i)MST

+matplotlib

+[Mm]utators?

+Nagios

+[Nn]amespaces?

+[Nn]ullable

+Outflux

+[Pp]ageviews?

+[Pp]aralleliza(?:ble|tion)

+[Pp]athname

+Patroni

+Paulo

+[Pp]erformant

+pg_dump

+pg_restore

+[Pp]gvector

+[Pp]laintext

+Plotly

+pre

+POSIX

+PostgreSQL

+[Pp]ooler?

+Prometheus

+PromLens

+PromQL

+Promscale

+Protobuf

+psql

+[Qq]uantiles?

+qStudio

+RDS

+[Rr]edistribut(?:e|able)

+[Rr]eindex(?:ed)?

+reltuples

+[Rr]eusability

+[Rr]unbooks?

+[Ss]crollable

+Sequelize

+[Ss]ignups?

+[Ss]iloed

+(?i)smallint

+sed

+src

+[Ss]ubquer(?:y|ies)

+[Ss]ubsets?

+[Ss]upersets?

+[Tt]ablespaces?

+Telegraf

+Thanos

+Threema

+[Tt]iering

+[Tt]imevectors?

+Timescale(?:DB)?

+tobs

+[Tt]ransactionally

+tsdbadmin

+Uber

+[Uu]nary

+[Uu]ncomment

+[Uu]nencrypted

+Unix

+[Uu]nmaterialized

+[Uu]nregister

+[Uu]nthrottled?

+[Uu]ntier

+[Uu]pserts?

+[Rr]ebalanc(?:e|ing)

+[Rr]epos?

+[Rr]ollups?

+[Ss]erverless

+[Ss]hard(?:s|ing)?

+SkipScan

+(?i)timestamptz

+URLs?

+URIs?

+UUID

+versionContent

+[Vv]irtualenv

+WAL

+[Ww]ebsockets?

+Worldmap

+www

+Zabbix

+Zipkin

diff --git a/_partials/_add-data-blockchain.md b/_partials/_add-data-blockchain.md

index 924f31d044..5e359ee738 100644

--- a/_partials/_add-data-blockchain.md

+++ b/_partials/_add-data-blockchain.md

@@ -1,16 +1,14 @@

## Load financial data

-This tutorial uses Bitcoin transactions from the past five days.

-

-## Ingest the dataset

+The dataset contains around 1.5 million Bitcoin transactions, the trades for five days. It includes

+information about each transaction, along with the value in [satoshi][satoshi-def]. It also states if a

+trade is a [coinbase][coinbase-def] transaction, and the reward a coin miner receives for mining the coin.

To ingest data into the tables that you created, you need to download the

dataset and copy the data to your database.

-### Ingesting the dataset

-

1. Download the `bitcoin_sample.zip` file. The file contains a `.csv`

file that contains Bitcoin transactions for the past five days. Download:

@@ -37,3 +35,6 @@ dataset and copy the data to your database.

resources.

+

+[satoshi-def]: https://www.pcmag.com/encyclopedia/term/satoshi

+[coinbase-def]: https://www.pcmag.com/encyclopedia/term/coinbase-transaction

diff --git a/_partials/_add-data-energy.md b/_partials/_add-data-energy.md

index a10dc6b3e6..c42420ae13 100644

--- a/_partials/_add-data-energy.md

+++ b/_partials/_add-data-energy.md

@@ -5,8 +5,6 @@ into the `metrics` hypertable.

-### Loading energy consumption data

-

This is a large dataset, so it might take a long time, depending on your network

connection.

diff --git a/_partials/_add-data-nyctaxis.md b/_partials/_add-data-nyctaxis.md

index 60b9f5231a..8f5d1313e6 100644

--- a/_partials/_add-data-nyctaxis.md

+++ b/_partials/_add-data-nyctaxis.md

@@ -5,8 +5,6 @@ When you have your database set up, you can load the taxi trip data into the

-### Loading trip data

-

This is a large dataset, so it might take a long time, depending on your network

connection.

diff --git a/_partials/_add-data-twelvedata-crypto.md b/_partials/_add-data-twelvedata-crypto.md

index 5e35ecb48a..3021b352d4 100644

--- a/_partials/_add-data-twelvedata-crypto.md

+++ b/_partials/_add-data-twelvedata-crypto.md

@@ -1,29 +1,29 @@

## Load financial data

This tutorial uses real-time cryptocurrency data, also known as tick data, from

-[Twelve Data][twelve-data]. A direct download link is provided below.

+[Twelve Data][twelve-data]. To ingest data into the tables that you created, you need to

+download the dataset, then upload the data to your $SERVICE_LONG.

-### Ingest the dataset

+

-To ingest data into the tables that you created, you need to download the

-dataset and copy the data to your database.

-

+1. Unzip [crypto_sample.zip](https://assets.timescale.com/docs/downloads/candlestick/crypto_sample.zip) to a ``.

-#### Ingesting the dataset

+ This test dataset contains second-by-second trade data for the most-traded crypto-assets

+ and a regular table of asset symbols and company names.

-1. Download the `crypto_sample.zip` file. The file contains two `.csv`

- files; one with company information, and one with real-time stock trades for

- the past month. Download:

- [crypto_sample.zip](https://assets.timescale.com/docs/downloads/candlestick/crypto_sample.zip)

-

+ To import up to 100GB of data directly from your current PostgreSQL based database,

+ [migrate with downtime][migrate-with-downtime] using native PostgreSQL tooling. To seamlessly import 100GB-10TB+

+ of data, use the [live migration][migrate-live] tooling supplied by $COMPANY. To add data from non-PostgreSQL

+ data sources, see [Import and ingest data][data-ingest].

-1. In a new terminal window, run this command to unzip the `.csv` files:

- ```bash

- unzip crypto_sample.zip

- ```

+

+1. In Terminal, navigate to `` and connect to your $SERVICE_SHORT.

+ ```bash

+ psql -d "postgres://:@:/"

+ ```

+ The connection information for a $SERVICE_SHORT is available in the file you downloaded when you created it.

1. At the `psql` prompt, use the `COPY` command to transfer data into your

Timescale instance. If the `.csv` files aren't in your current directory,

@@ -44,3 +44,6 @@ dataset and copy the data to your database.

[twelve-data]: https://twelvedata.com/

+[migrate-with-downtime]: /migrate/:currentVersion:/pg-dump-and-restore/

+[migrate-live]: /migrate/:currentVersion:/live-migration/

+[data-ingest]: /use-timescale/:currentVersion:/ingest-data/

diff --git a/_partials/_add-data-twelvedata-stocks.md b/_partials/_add-data-twelvedata-stocks.md

index 7be487e717..fb3a13b13d 100644

--- a/_partials/_add-data-twelvedata-stocks.md

+++ b/_partials/_add-data-twelvedata-stocks.md

@@ -3,15 +3,11 @@

This tutorial uses real-time stock trade data, also known as tick data, from

[Twelve Data][twelve-data]. A direct download link is provided below.

-## Ingest the dataset

-

To ingest data into the tables that you created, you need to download the

dataset and copy the data to your database.

-#### Ingesting the dataset

-

1. Download the `real_time_stock_data.zip` file. The file contains two `.csv`

files; one with company information, and one with real-time stock trades for

the past month. Download:

diff --git a/_partials/_caggs-intro.md b/_partials/_caggs-intro.md

index 220c6053fc..1215a8f69f 100644

--- a/_partials/_caggs-intro.md

+++ b/_partials/_caggs-intro.md

@@ -1,12 +1,12 @@

-Time-series data usually grows very quickly. And that means that aggregating the

-data into useful summaries can become very slow. Continuous aggregates makes

-aggregating data lightning fast.

+In modern applications, data usually grows very quickly. This means that aggregating

+it into useful summaries can become very slow. $CLOUD_LONG continuous aggregates make

+aggregating data lightning fast, accurate, and easy.

If you are collecting data very frequently, you might want to aggregate your

-data into minutes or hours instead. For example, if you have a table of

-temperature readings taken every second, you can find the average temperature

+data into minutes or hours instead. For example, if an IoT device takes

+temperature readings every second, you might want to find the average temperature

for each hour. Every time you run this query, the database needs to scan the

-entire table and recalculate the average every time.

+entire table and recalculate the average.

Continuous aggregates are a kind of hypertable that is refreshed automatically

in the background as new data is added, or old data is modified. Changes to your

@@ -21,10 +21,10 @@ means that you can get on with working your data instead of maintaining your

database.

Because continuous aggregates are based on hypertables, you can query them in

-exactly the same way as your other tables, and enable [Hypercore][hypercore]

-or [tiered storage][data-tiering] on your continuous aggregates. You can even

+exactly the same way as your other tables, and enable [compression][compression]

+or [tiered storage][data-tiering] on them. You can even

create

-[continuous aggregates on top of your continuous aggregates][hierarchical-caggs].

+[continuous aggregates on top of your continuous aggregates][hierarchical-caggs] - for an even more fine-tuned aggregation.

By default, querying continuous aggregates provides you with real-time data.

Pre-aggregated data from the materialized view is combined with recent data that

diff --git a/_partials/_cloud-create-connect-tutorials.md b/_partials/_cloud-create-connect-tutorials.md

index 55bae061e2..a96cbf3892 100644

--- a/_partials/_cloud-create-connect-tutorials.md

+++ b/_partials/_cloud-create-connect-tutorials.md

@@ -6,9 +6,7 @@ command-line utility. If you've used PostgreSQL before, you might already have

-### Create a Timescale service and connect to the service

-

-1. In the [Timescale portal][timescale-portal], click `Create service`.

+1. In the [$CONSOLE][timescale-portal], click `Create service`.

1. Click `Download the cheatsheet` to download an SQL file that contains the

login details for your new service. You can also copy the details directly

from this page. When you have copied your password,

diff --git a/_partials/_create-hypertable-blockchain.md b/_partials/_create-hypertable-blockchain.md

index 84761f1e02..9d277e6130 100644

--- a/_partials/_create-hypertable-blockchain.md

+++ b/_partials/_create-hypertable-blockchain.md

@@ -1,5 +1,5 @@

-## Create a hypertable

+## Optimize time-series data in hypertables

Hypertables are the core of Timescale. Hypertables enable Timescale to work

efficiently with time-series data. Because Timescale is PostgreSQL, all the

@@ -9,8 +9,6 @@ with Timescale tables similar to standard PostgreSQL.

-### Creating a hypertable

-

1. Create a standard PostgreSQL table to store the Bitcoin blockchain data

using `CREATE TABLE`:

diff --git a/_partials/_create-hypertable-energy.md b/_partials/_create-hypertable-energy.md

index 235a90d788..ff75bf552e 100644

--- a/_partials/_create-hypertable-energy.md

+++ b/_partials/_create-hypertable-energy.md

@@ -1,4 +1,4 @@

-## Create a hypertable

+## Optimize time-series data in hypertables

Hypertables are the core of Timescale. Hypertables enable Timescale to work

efficiently with time-series data. Because Timescale is PostgreSQL, all the

@@ -8,8 +8,6 @@ with Timescale tables similar to standard PostgreSQL.

-### Creating a hypertable

-

1. Create a standard PostgreSQL table to store the energy consumption data

using `CREATE TABLE`:

diff --git a/_partials/_create-hypertable-nyctaxis.md b/_partials/_create-hypertable-nyctaxis.md

index b41a579de4..3400e849b6 100644

--- a/_partials/_create-hypertable-nyctaxis.md

+++ b/_partials/_create-hypertable-nyctaxis.md

@@ -1,17 +1,16 @@

-## Create a hypertable

+## Optimize time-series data in hypertables

-Hypertables are the core of Timescale. Hypertables enable Timescale to work

-efficiently with time-series data. Because Timescale is PostgreSQL, all the

-standard PostgreSQL tables, indexes, stored procedures and other objects can be

-created alongside your Timescale hypertables. This makes creating and working

-with Timescale tables similar to standard PostgreSQL.

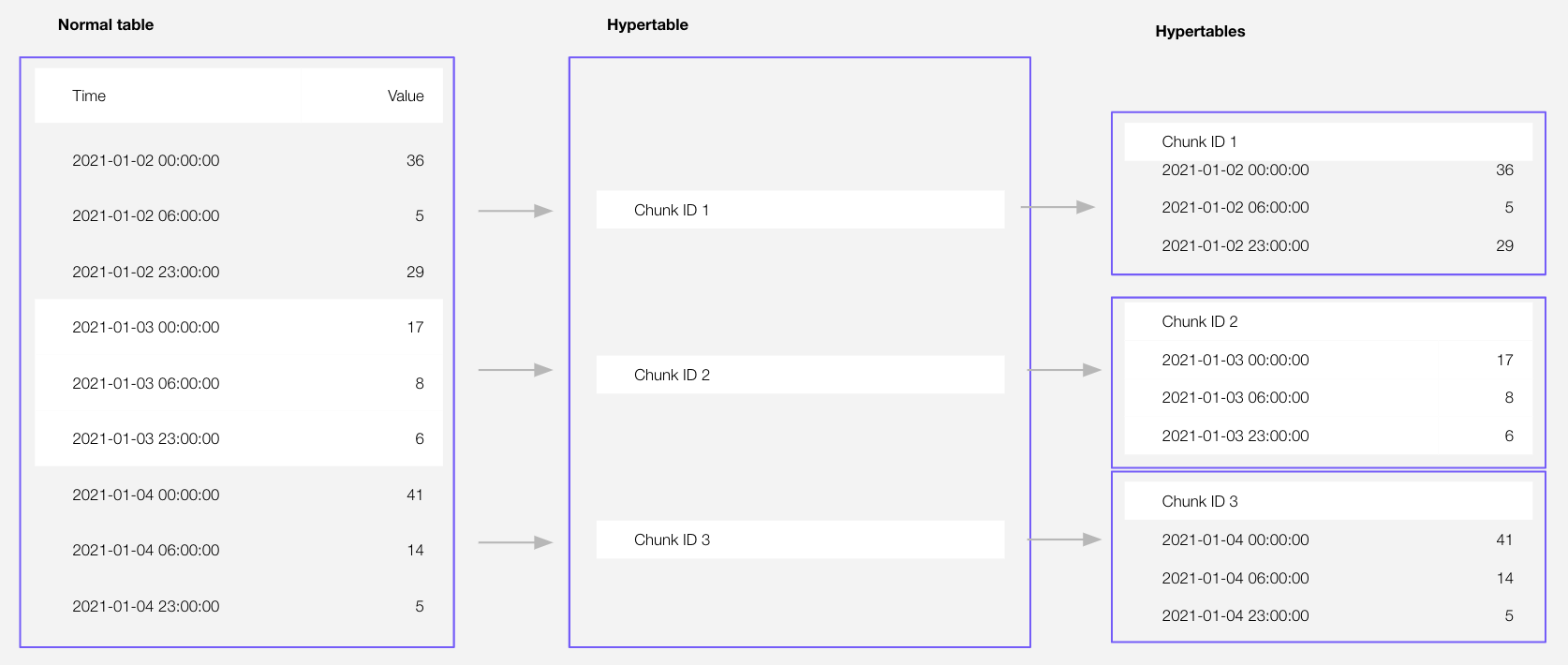

+Time-series data represents how a system, process, or behavior changes over time. [Hypertables][hypertables-section]

+are PostgreSQL tables that help you improve insert and query performance by automatically partitioning your data by

+time. Each hypertable is made up of child tables called chunks. Each chunk is assigned a range of time, and only

+contains data from that range.

-

+Hypertables exist alongside regular PostgreSQL tables. You use regular PostgreSQL tables for relational data, and

+interact with hypertables and regular PostgreSQL tables in the same way.

-### Creating a hypertable

+

-1. Create a standard PostgreSQL table to store the taxi trip data

- using `CREATE TABLE`:

+1. **Create a standard PostgreSQL table to store the taxi trip data**

```sql

CREATE TABLE "rides"(

@@ -36,7 +35,8 @@ with Timescale tables similar to standard PostgreSQL.

);

```

-1. Convert the standard table into a hypertable partitioned on the `time`

+1. **Convert the standard table into a hypertable**

+ Partitioned on the `time`

column using the `create_hypertable()` function provided by Timescale. You

must provide the name of the table and the column in that table that holds

the timestamp data to use for partitioning:

@@ -69,8 +69,6 @@ there are two other tables of data, called `payment_types` and `rates`.

-### Creating standard PostgreSQL tables

-

1. Add a table to store the payment types data:

```sql

@@ -117,3 +115,6 @@ the `psql` command line. You should see this:

public | rides | table | tsdbadmin

(3 rows)

```

+

+

+[hypertables-section]: /use-timescale/:currentVersion:/hypertables/

diff --git a/_partials/_create-hypertable-twelvedata-crypto.md b/_partials/_create-hypertable-twelvedata-crypto.md

index 4412295ea7..05739038d2 100644

--- a/_partials/_create-hypertable-twelvedata-crypto.md

+++ b/_partials/_create-hypertable-twelvedata-crypto.md

@@ -1,18 +1,22 @@

-## Create a hypertable

+## Optimize time-series data in a hypertable

-Hypertables are the core of Timescale. Hypertables enable Timescale to work

-efficiently with time-series data. Because Timescale is PostgreSQL, all the

-standard PostgreSQL tables, indexes, stored procedures, and other objects can be

-created alongside your Timescale hypertables. This makes creating and working

-with Timescale tables similar to standard PostgreSQL.

+Hypertables are the core of $TIMESCALE_DB, they enable $CLOUD_LONG to work

+efficiently with time-series data. Hypertables are PostgreSQL tables that automatically

+partition your time-series data by time. When you run a query, $CLOUD_LONG identifies the

+correct partition and runs the query on it, instead of going through the entire table.

+

+Because $TIMESCALE_DB is 100% PostgreSQL, you can create standard PostgreSQL tables, indexes, stored

+procedures, and other objects alongside your Timescale hypertables. This makes creating and working

+with hypertables similar to standard PostgreSQL.

-### Creating a hypertable

+1. Connect to your $SERVICE_LONG.

+

+ In [$CONSOLE][services-portal] open an [SQL editor][in-console-editors]. You can also connect to your service using [psql][connect-using-psql].

-1. Create a standard PostgreSQL table to store the real-time cryptocurrency data

- using `CREATE TABLE`:

+1. Create a standard PostgreSQL table to store the real-time cryptocurrency data:

```sql

CREATE TABLE crypto_ticks (

@@ -32,23 +36,16 @@ with Timescale tables similar to standard PostgreSQL.

SELECT create_hypertable('crypto_ticks', by_range('time'));

```

-

- The `by_range` dimension builder is an addition to TimescaleDB 2.13.

-

-

-## Create standard PostgreSQL tables for relational data

+## Create a standard PostgreSQL table for relational data

-When you have other relational data that enhances your time-series data, you can

-create standard PostgreSQL tables just as you would normally. For this dataset,

-there is one other table of data called `crypto_assets`.

+When you have relational data that enhances your time-series data, store that data in

+standard PostgreSQL relational tables.

-### Creating standard PostgreSQL tables

-

-1. Add a table to store the company name and symbol for the stock trade data:

+1. Add a table to store the asset symbol and name in a relational table:

```sql

CREATE TABLE crypto_assets (

@@ -57,7 +54,11 @@ there is one other table of data called `crypto_assets`.

);

```

-1. You now have two tables within your Timescale database. One hypertable

- named `crypto_ticks`, and one normal PostgreSQL table named `crypto_assets`.

-

+

+You now have two tables within your $SERVICE_LONG. A hypertable named `crypto_ticks`, and a normal

+PostgreSQL table named `crypto_assets`.

+

+[in-console-editors]: /getting-started/:currentVersion:/run-queries-from-console/

+[services-portal]: https://console.cloud.timescale.com/dashboard/services

+[connect-using-psql]: /use-timescale/:currentVersion:/integrations/query-admin/psql#connect-to-your-service

diff --git a/_partials/_create-hypertable-twelvedata-stocks.md b/_partials/_create-hypertable-twelvedata-stocks.md

index 85727a9fdc..6e06273e9b 100644

--- a/_partials/_create-hypertable-twelvedata-stocks.md

+++ b/_partials/_create-hypertable-twelvedata-stocks.md

@@ -1,5 +1,5 @@

-## Create a hypertable

+## Optimize time-series data in hypertables

Hypertables are the core of Timescale. Hypertables enable Timescale to work

efficiently with time-series data. Because Timescale is PostgreSQL, all the

@@ -9,8 +9,6 @@ with Timescale tables similar to standard PostgreSQL.

-### Creating a hypertable

-

1. Create a standard PostgreSQL table to store the real-time stock trade data

using `CREATE TABLE`:

diff --git a/_partials/_financial-industry-data-analysis.md b/_partials/_financial-industry-data-analysis.md

new file mode 100644

index 0000000000..bfd6293928

--- /dev/null

+++ b/_partials/_financial-industry-data-analysis.md

@@ -0,0 +1 @@

+The financial industry is extremely data-heavy and relies on real-time and historical data for decision-making, risk assessment, fraud detection, and market analysis. Timescale simplifies management of these large volumes of data, while also providing you with meaningful analytical insights and optimizing storage costs.

\ No newline at end of file

diff --git a/_partials/_grafana-connect.md b/_partials/_grafana-connect.md

index fdc3619001..0bce6cd58b 100644

--- a/_partials/_grafana-connect.md

+++ b/_partials/_grafana-connect.md

@@ -1,14 +1,6 @@

-## Prerequisites

+## Connect Grafana to $CLOUD_LONG

-import IntegrationPrereqs from "versionContent/_partials/_integration-prereqs.mdx";

-

-

-

-* Install [self-managed Grafana][grafana-self-managed] or sign up for [Grafana Cloud][grafana-cloud].

-

-## Add your $SERVICE_SHORT as a data source

-

-To connect the data in your $SERVICE_SHORT to Grafana:

+To visualize the results of your queries, enable Grafana to read the data in your $SERVICE_SHORT:

@@ -21,15 +13,16 @@ To connect the data in your $SERVICE_SHORT to Grafana:

1. Open `Connections` > `Data sources`, then click `Add new data source`.

1. Select `PostgreSQL` from the list.

1. Configure the connection:

- - `Host URL`, `Username`, `Password`, and `Database`: configure using your [connection details][connection-info].

- - `Database name`: provide the name for your dataset.

- - `TLS/SSL Mode`: select `require`.

- - `PostgreSQL options`: enable `TimescaleDB`.

- - Leave the default setting for all other fields.

-

- 1. **Click `Save & test`**

+ - `Host URL`, `Database name`, `Username`, and `Password`

+

+ Configure using your [connection details][connection-info]. `Host URL` is in the format `:`.

+ - `TLS/SSL Mode`: select `require`.

+ - `PostgreSQL options`: enable `TimescaleDB`.

+ - Leave the default setting for all other fields.

+

+ 1. Click `Save & test`.

- Grafana checks that your details are set correctly.

+ Grafana checks that your details are set correctly.

diff --git a/_partials/_graphing-ohlcv-data.md b/_partials/_graphing-ohlcv-data.md

index 2e7a51d53b..272ac3d156 100644

--- a/_partials/_graphing-ohlcv-data.md

+++ b/_partials/_graphing-ohlcv-data.md

@@ -9,12 +9,12 @@ up to connect to your TimescaleDB database.

### Graphing OHLCV data

1. Ensure you have Grafana installed, and you are using the TimescaleDB

- database that contains the Twelve Data stocks dataset set up as a

+ database that contains the Twelve Data dataset set up as a

data source.

1. In Grafana, from the `Dashboards` menu, click `New Dashboard`. In the

`New Dashboard` page, click `Add a new panel`.

1. In the `Visualizations` menu in the top right corner, select `Candlestick`

- from the list. Ensure you have set the Twelve Data stocks dataset as

+ from the list. Ensure you have set the Twelve Data dataset as

your data source.

1. Click `Edit SQL` and paste in the query you used to get the OHLCV values.

1. In the `Format as` section, select `Table`.

diff --git a/_partials/_high-availability-setup.md b/_partials/_high-availability-setup.md

new file mode 100644

index 0000000000..5eb9eb15d1

--- /dev/null

+++ b/_partials/_high-availability-setup.md

@@ -0,0 +1,13 @@

+

+

+1. In [Timescale Console][cloud-login], select the service to enable replication for.

+1. Click `Operations`, then select `High availability`.

+1. Choose your replication strategy, then click `Change configuration`.

+

+

+

+1. In `Change high availability configuration`, click `Change config`.

+

+

+

+[cloud-login]: https://console.cloud.timescale.com

diff --git a/_partials/_hypercore_policy_workflow.md b/_partials/_hypercore_policy_workflow.md

index c980d6907d..1d762ff278 100644

--- a/_partials/_hypercore_policy_workflow.md

+++ b/_partials/_hypercore_policy_workflow.md

@@ -1,5 +1,7 @@

import EarlyAccess from "versionContent/_partials/_early_access.mdx";

+

+

1. **Connect to your $SERVICE_LONG**

In [$CONSOLE][services-portal] open an [SQL editor][in-console-editors]. You can also connect to your service using [psql][connect-using-psql].

@@ -79,6 +81,7 @@ import EarlyAccess from "versionContent/_partials/_early_access.mdx";

```

See [alter_table_hypercore][alter_table_hypercore].

+

[job]: /api/:currentVersion:/actions/add_job/

[alter_table_hypercore]: /api/:currentVersion:/hypercore/alter_table/

diff --git a/_partials/_hypertables-intro.md b/_partials/_hypertables-intro.md

index 5b3d450076..fb2d811fc3 100644

--- a/_partials/_hypertables-intro.md

+++ b/_partials/_hypertables-intro.md

@@ -1,15 +1,12 @@

-Hypertables are PostgreSQL tables that automatically partition your data by

-time. You interact with hypertables in the same way as regular PostgreSQL

-tables, but with extra features that makes managing your time-series data much

-easier.

-

-In Timescale, hypertables exist alongside regular PostgreSQL tables. Use

-hypertables to store time-series data. This gives you improved insert and query

-performance, and access to useful time-series features. Use regular PostgreSQL

-tables for other relational data.

-

-With hypertables, Timescale makes it easy to improve insert and query

-performance by partitioning time-series data on its time parameter. Behind the

-scenes, the database performs the work of setting up and maintaining the

-hypertable's partitions. Meanwhile, you insert and query your data as if it all

-lives in a single, regular PostgreSQL table.

+$CLOUD_LONG supercharges your real-time analytics by letting you run complex queries continuously, with near-zero latency. Under the hood, this is achieved by using hypertables - PostgreSQL tables that automatically partition your time-series data by time and optionally by space. When you run a query, $CLOUD_LONG identifies the correct partition and runs the query on it, instead of going through the entire table.

+

+Hypertables offer a range of other features, such as [skipping partitions][chunk-skipping] or running [hyperfunctions][hyperfunctions], that boost the performance of your analytical queries even more.

+

+To top it all, there is no added complexity - you interact with hypertables in the same way as you would with regular PostgreSQL tables. All the optimization magic happens behind the scenes.

+

+[chunk-skipping]: /use-timescale/:currentVersion:/hypertables/improve-query-performance/

+[hyperfunctions]: /use-timescale/:currentVersion:/hyperfunctions/

+

+

+

+

diff --git a/_partials/_import-data-iot.md b/_partials/_import-data-iot.md

new file mode 100644

index 0000000000..61a977f004

--- /dev/null

+++ b/_partials/_import-data-iot.md

@@ -0,0 +1,86 @@

+Time-series data represents how a system, process, or behavior changes over time. [Hypertables][hypertables-section]

+are PostgreSQL tables that help you improve insert and query performance by automatically partition your data by

+time, speeding up queries for real-time analytics and other challenging workloads. Each hypertable is made up of child

+tables called chunks. Each chunk is assigned a range of time, and only contains data from that range.

+

+

+

+1. **Import time-series data into a hypertable**

+

+ 1. Unzip [metrics.csv.gz](https://assets.timescale.com/docs/downloads/metrics.csv.gz) to a ``.

+

+ This test dataset contains energy consumption data.

+

+ To import up to 100GB of data directly from your current PostgreSQL based database,

+ [migrate with downtime][migrate-with-downtime] using native PostgreSQL tooling. To seamlessly import 100GB-10TB+

+ of data, use the [live migration][migrate-live] tooling supplied by $COMPANY. To add data from non-PostgreSQL

+ data sources, see [Import and ingest data][data-ingest].

+

+ 1. In Terminal, navigate to `` and update the following string with [your connection details][connection-info]

+ to connect to your $SERVICE_SHORT.

+

+ ```bash

+ psql -d "postgres://:@:/?sslmode=require"

+ ```

+

+ 1. Create tables to import time-series data:

+

+ 1. In your sql client, create a normal PostgreSQL table:

+

+ ```sql

+ CREATE TABLE "metrics"(

+ created timestamp with time zone default now() not null,

+ type_id integer not null,

+ value double precision not null

+ );

+ ```

+

+ 1. Convert `metrics` to a hypertable and partitioned on time:

+ ```sql

+ SELECT create_hypertable('metrics', by_range('created'));

+ ```

+ To more fully understand how hypertables work, and how to optimize them for performance by

+ tuning chunk intervals and enabling chunk skipping, see [the hypertables documentation][hypertables-section].

+

+ 1. Upload the dataset to your $SERVICE_SHORT

+ ```sql

+ \COPY metrics FROM metrics.csv CSV;

+ ```

+ To more fully understand how hypertables work, and how to optimize them for performance by

+ tuning chunk intervals and enabling chunk skipping, see [the hypertables documentation][hypertables-section].

+

+1. **Have a quick look at your data**

+

+ You query hypertables in exactly the same way as you would a relational PostgreSQL table.

+ Use one of the following SQL editors to run a query and see the data you uploaded:

+ - **Data mode**: write queries, visualize data, and share your results in [$CONSOLE][portal-data-mode] for all your $SERVICE_LONGs.

+ - **SQL editor**: write, fix, and organize SQL faster and more accurately in [$CONSOLE][portal-ops-mode] for a $SERVICE_LONG.

+ - **psql**: easily run queries on your $SERVICE_LONGs or self-hosted TimescaleDB deployment from Terminal.

+

+ ```sql

+ SELECT time_bucket('1 day', created, 'Europe/Berlin') AS "time",

+ round((last(value, created) - first(value, created)) * 100.) / 100. AS value

+ FROM metrics

+ WHERE type_id = 5

+ GROUP BY 1;

+ ```

+

+ On this amount of data, this query on data in the rowstore takes about 3.6 seconds. You see something like:

+

+ | Time | value |

+ |------------------------------|-------|

+ | 2023-05-29 22:00:00+00 | 23.1 |

+ | 2023-05-28 22:00:00+00 | 19.5 |

+ | 2023-05-30 22:00:00+00 | 25 |

+ | 2023-05-31 22:00:00+00 | 8.1 |

+

+

+

+[hypertables-section]: /use-timescale/:currentVersion:/hypertables/

+[portal-ops-mode]: https://console.cloud.timescale.com/dashboard/services

+[portal-data-mode]: https://console.cloud.timescale.com/dashboard/services?popsql

+[connection-info]: /use-timescale/:currentVersion:/integrations/find-connection-details/

+[migrate-with-downtime]: /migrate/:currentVersion:/pg-dump-and-restore/

+[migrate-live]: /migrate/:currentVersion:/live-migration/

+[data-ingest]: /use-timescale/:currentVersion:/ingest-data/

+

diff --git a/_partials/_import-data-nyc-taxis.md b/_partials/_import-data-nyc-taxis.md

new file mode 100644

index 0000000000..dca210b2cf

--- /dev/null

+++ b/_partials/_import-data-nyc-taxis.md

@@ -0,0 +1,168 @@

+Time-series data represents how a system, process, or behavior changes over time. [Hypertables][hypertables-section]

+are PostgreSQL tables that help you improve insert and query performance by automatically partition your data by

+time, speeding up queries for real-time analytics and other challenging workloads. Each hypertable is made up of child

+tables called chunks. Each chunk is assigned a range of time, and only contains data from that range.

+

+

+

+1. **Import time-series data into a hypertable**

+

+ 1. Unzip [nyc_data.tar.gz](https://assets.timescale.com/docs/downloads/nyc_data.tar.gz) to a ``.

+

+ This test dataset contains historical data from New York's yellow taxi network.

+

+ To import up to 100GB of data directly from your current PostgreSQL based database,

+ [migrate with downtime][migrate-with-downtime] using native PostgreSQL tooling. To seamlessly import 100GB-10TB+

+ of data, use the [live migration][migrate-live] tooling supplied by $COMPANY. To add data from non-PostgreSQL

+ data sources, see [Import and ingest data][data-ingest].

+

+ 1. In Terminal, navigate to `` and update the following string with [your connection details][connection-info]

+ to connect to your $SERVICE_SHORT.

+

+ ```bash

+ psql -d "postgres://:@:/?sslmode=require"

+ ```

+

+ 1. Create tables to import time-series data:

+

+ 1. In your sql client, create a normal PostgreSQL table:

+

+ ```sql

+ CREATE TABLE "rides"(

+ vendor_id TEXT,

+ pickup_datetime TIMESTAMP WITHOUT TIME ZONE NOT NULL,

+ dropoff_datetime TIMESTAMP WITHOUT TIME ZONE NOT NULL,

+ passenger_count NUMERIC,

+ trip_distance NUMERIC,

+ pickup_longitude NUMERIC,

+ pickup_latitude NUMERIC,

+ rate_code INTEGER,

+ dropoff_longitude NUMERIC,

+ dropoff_latitude NUMERIC,

+ payment_type INTEGER,

+ fare_amount NUMERIC,

+ extra NUMERIC,

+ mta_tax NUMERIC,

+ tip_amount NUMERIC,

+ tolls_amount NUMERIC,

+ improvement_surcharge NUMERIC,

+ total_amount NUMERIC

+ );

+ ```

+

+ 1. Convert `rides` to a hypertable and partitioned on time:

+ ```sql

+ SELECT create_hypertable('rides', by_range('pickup_datetime'), create_default_indexes=>FALSE);

+ SELECT add_dimension('rides', by_hash('payment_type', 2));

+ ```

+ To more fully understand how hypertables work, and how to optimize them for performance by

+ tuning chunk intervals and enabling chunk skipping, see [the hypertables documentation][hypertables-section].

+

+ 1. Create an index to support efficient queries by vendor, rate code, and passenger count:

+ ```sql

+ CREATE INDEX ON rides (vendor_id, pickup_datetime DESC);

+ CREATE INDEX ON rides (rate_code, pickup_datetime DESC);

+ CREATE INDEX ON rides (passenger_count, pickup_datetime DESC);

+ ```

+

+ 1. Create tables for relational data:

+

+ 1. Add a table to store the payment types data:

+

+ ```sql

+ CREATE TABLE IF NOT EXISTS "payment_types"(

+ payment_type INTEGER,

+ description TEXT

+ );

+ INSERT INTO payment_types(payment_type, description) VALUES

+ (1, 'credit card'),

+ (2, 'cash'),

+ (3, 'no charge'),

+ (4, 'dispute'),

+ (5, 'unknown'),

+ (6, 'voided trip');

+ ```

+

+ 1. Add a table to store the rates data:

+

+ ```sql

+ CREATE TABLE IF NOT EXISTS "rates"(

+ rate_code INTEGER,

+ description TEXT

+ );

+ INSERT INTO rates(rate_code, description) VALUES

+ (1, 'standard rate'),

+ (2, 'JFK'),

+ (3, 'Newark'),

+ (4, 'Nassau or Westchester'),

+ (5, 'negotiated fare'),

+ (6, 'group ride');

+ ```

+

+ 1. Upload the dataset to your $SERVICE_SHORT

+ ```sql

+ \COPY rides FROM nyc_data_rides.csv CSV;

+ ```

+ To more fully understand how hypertables work, and how to optimize them for performance by

+ tuning chunk intervals and enabling chunk skipping, see [the hypertables documentation][hypertables-section].

+

+1. **Have a quick look at your data**

+

+ You query hypertables in exactly the same way as you would a relational PostgreSQL table.

+ Use one of the following SQL editors to run a query and see the data you uploaded:

+ - **Data mode**: write queries, visualize data, and share your results in [$CONSOLE][portal-data-mode] for all your $SERVICE_LONGs.

+ - **SQL editor**: write, fix, and organize SQL faster and more accurately in [$CONSOLE][portal-ops-mode] for a $SERVICE_LONG.

+ - **psql**: easily run queries on your $SERVICE_LONGs or self-hosted TimescaleDB deployment from Terminal.

+

+ For example:

+ - Display the number of rides for each fare type:

+ ```sql

+ SELECT rate_code, COUNT(vendor_id) AS num_trips

+ FROM rides

+ WHERE pickup_datetime < '2016-01-08'

+ GROUP BY rate_code

+ ORDER BY rate_code;

+ ```

+ This simple query runs in 3 seconds. You see something like:

+

+ | rate_code | num_trips |

+ |-----------------|-----------|

+ |1 | 2266401|

+ |2 | 54832|

+ |3 | 4126|

+ |4 | 967|

+ |5 | 7193|

+ |6 | 17|

+ |99 | 42|

+

+ - To select all rides taken in the first week of January 2016, and return the total number of trips taken for each rate code:

+ ```sql

+ SELECT rates.description, COUNT(vendor_id) AS num_trips

+ FROM rides

+ JOIN rates ON rides.rate_code = rates.rate_code

+ WHERE pickup_datetime < '2016-01-08'

+ GROUP BY rates.description

+ ORDER BY LOWER(rates.description);

+ ```

+ On this large amount of data, this analytical query on data in the rowstore takes about 59 seconds. You see something like:

+

+ | description | num_trips |

+ |-----------------|-----------|

+ | group ride | 17 |

+ | JFK | 54832 |

+ | Nassau or Westchester | 967 |

+ | negotiated fare | 7193 |

+ | Newark | 4126 |

+ | standard rate | 2266401 |

+

+

+

+

+[hypertables-section]: /use-timescale/:currentVersion:/hypertables/

+[portal-ops-mode]: https://console.cloud.timescale.com/dashboard/services

+[portal-data-mode]: https://console.cloud.timescale.com/dashboard/services?popsql

+[connection-info]: /use-timescale/:currentVersion:/integrations/find-connection-details/

+[migrate-with-downtime]: /migrate/:currentVersion:/pg-dump-and-restore/

+[migrate-live]: /migrate/:currentVersion:/live-migration/

+[data-ingest]: /use-timescale/:currentVersion:/ingest-data/

+

diff --git a/_partials/_integration-prereqs-cloud-only.md b/_partials/_integration-prereqs-cloud-only.md

index b46e842697..0b21e814f9 100644

--- a/_partials/_integration-prereqs-cloud-only.md

+++ b/_partials/_integration-prereqs-cloud-only.md

@@ -1,5 +1,10 @@

-To follow the procedure on this page, you need to:

-* Create a target [$SERVICE_LONG][create-service].

+To follow the steps on this page:

+

+* Create a target [$SERVICE_LONG][create-service] with time-series and analytics enabled.

+

+ You need your [connection details][connection-info].

+

[create-service]: /getting-started/:currentVersion:/services/

+[connection-info]: /use-timescale/:currentVersion:/integrations/find-connection-details/

diff --git a/_partials/_integration-prereqs.md b/_partials/_integration-prereqs.md

index 26a70caf25..26d94388b6 100644

--- a/_partials/_integration-prereqs.md

+++ b/_partials/_integration-prereqs.md

@@ -1,10 +1,11 @@

-To follow the procedure on this page, you need to:

+To follow the steps on this page:

-* Create a target [$SERVICE_LONG][create-service]

+* Create a target [$SERVICE_LONG][create-service] with time-series and analytics enabled.

- You need your [connection details][connection-info] to follow the steps in this page. This procedure also

+ You need [your connection details][connection-info]. This procedure also

works for [self-hosted $TIMESCALE_DB][enable-timescaledb].

+

[create-service]: /getting-started/:currentVersion:/services/

[enable-timescaledb]: /self-hosted/:currentVersion:/install/

[connection-info]: /use-timescale/:currentVersion:/integrations/find-connection-details/

diff --git a/_partials/_usage-based-storage-intro.md b/_partials/_usage-based-storage-intro.md

index 0054c6fcc0..3ef590afc9 100644

--- a/_partials/_usage-based-storage-intro.md

+++ b/_partials/_usage-based-storage-intro.md

@@ -1,7 +1,7 @@

$CLOUD_LONG charges are based on the amount of storage you use. You don't pay for

fixed storage size, and you don't need to worry about scaling disk size as your

-data grows; We handle it all for you. To reduce your data costs further,

-use [Hypercore][hypercore], a [data retention policy][data-retention], and

+data grows - we handle it all for you. To reduce your data costs further,

+combine [hypercore][hypercore], a [data retention policy][data-retention], and

[tiered storage][data-tiering].

[hypercore]: /api/:currentVersion:/hypercore/

diff --git a/_partials/_use-case-iot-create-cagg.md b/_partials/_use-case-iot-create-cagg.md

new file mode 100644

index 0000000000..7a8f13940f

--- /dev/null

+++ b/_partials/_use-case-iot-create-cagg.md

@@ -0,0 +1,87 @@

+1. **Monitor energy consumption on a day-to-day basis**

+

+ 1. Create a continuous aggregate `kwh_day_by_day` for energy consumption:

+

+ ```sql

+ CREATE MATERIALIZED VIEW kwh_day_by_day(time, value)

+ with (timescaledb.continuous) as

+ SELECT time_bucket('1 day', created, 'Europe/Berlin') AS "time",

+ round((last(value, created) - first(value, created)) * 100.) / 100. AS value

+ FROM metrics

+ WHERE type_id = 5

+ GROUP BY 1;

+ ```

+

+ 1. Add a refresh policy to keep `kwh_day_by_day` up-to-date:

+

+ ```sql

+ SELECT add_continuous_aggregate_policy('kwh_day_by_day',

+ start_offset => NULL,

+ end_offset => INTERVAL '1 hour',

+ schedule_interval => INTERVAL '1 hour');

+ ```

+

+1. **Monitor energy consumption on an hourly basis**

+

+ 1. Create a continuous aggregate `kwh_hour_by_hour` for energy consumption:

+

+ ```sql

+ CREATE MATERIALIZED VIEW kwh_hour_by_hour(time, value)

+ with (timescaledb.continuous) as

+ SELECT time_bucket('01:00:00', metrics.created, 'Europe/Berlin') AS "time",

+ round((last(value, created) - first(value, created)) * 100.) / 100. AS value

+ FROM metrics

+ WHERE type_id = 5

+ GROUP BY 1;

+ ```

+

+ 1. Add a refresh policy to keep the continuous aggregate up-to-date:

+

+ ```sql

+ SELECT add_continuous_aggregate_policy('kwh_hour_by_hour',

+ start_offset => NULL,

+ end_offset => INTERVAL '1 hour',

+ schedule_interval => INTERVAL '1 hour');

+ ```

+

+1. **Analyze your data**

+

+ Now you have made continuous aggregates, it could be a good idea to use them to perform analytics on your data.

+ For example, to see how average energy consumption changes during weekdays over the last year, run the following query:

+ ```sql

+ WITH per_day AS (

+ SELECT

+ time,

+ value

+ FROM kwh_day_by_day

+ WHERE "time" at time zone 'Europe/Berlin' > date_trunc('month', time) - interval '1 year'

+ ORDER BY 1

+ ), daily AS (

+ SELECT

+ to_char(time, 'Dy') as day,

+ value

+ FROM per_day

+ ), percentile AS (

+ SELECT

+ day,

+ approx_percentile(0.50, percentile_agg(value)) as value

+ FROM daily

+ GROUP BY 1

+ ORDER BY 1

+ )

+ SELECT

+ d.day,

+ d.ordinal,

+ pd.value

+ FROM unnest(array['Sun', 'Mon', 'Tue', 'Wed', 'Thu', 'Fri', 'Sat']) WITH ORDINALITY AS d(day, ordinal)

+ LEFT JOIN percentile pd ON lower(pd.day) = lower(d.day);

+ ```

+

+ You see something like:

+

+ | day | ordinal | value |

+ | --- | ------- | ----- |

+ | Mon | 2 | 23.08078714975423 |

+ | Sun | 1 | 19.511430831944395 |

+ | Tue | 3 | 25.003118897837307 |

+ | Wed | 4 | 8.09300571759772 |

diff --git a/_partials/_use-case-setup-blockchain-dataset.md b/_partials/_use-case-setup-blockchain-dataset.md

new file mode 100644

index 0000000000..c1ddd2669f

--- /dev/null

+++ b/_partials/_use-case-setup-blockchain-dataset.md

@@ -0,0 +1,18 @@

+

+

+import CreateHypertableBlockchain from "versionContent/_partials/_create-hypertable-blockchain.mdx";

+import AddDataBlockchain from "versionContent/_partials/_add-data-blockchain.mdx";

+import IntegrationPrereqs from "versionContent/_partials/_integration-prereqs.mdx";

+

+# Ingest data into a $SERVICE_LONG

+

+This tutorial uses a dataset that contains Bitcoin blockchain data for

+the past five days, in a hypertable named `transactions`.

+

+## Prerequisites

+

+

+

+

+

+

diff --git a/_partials/_use-case-transport-geolocation.md b/_partials/_use-case-transport-geolocation.md

new file mode 100644

index 0000000000..8156143720

--- /dev/null

+++ b/_partials/_use-case-transport-geolocation.md

@@ -0,0 +1,88 @@

+

+### Setup your data for geospatial queries

+

+To add geospatial analysis to your ride count visualization, you need geospatial data to work out which trips

+originated where. As $TIMESCALE_DB is compatible with all PostgreSQL extensions, use [PostGIS][postgis] to slice

+data by time and location.

+

+

+

+1. Connect to your [$SERVICE_LONG][in-console-editors] and add the PostGIS extension:

+

+ ```sql

+ CREATE EXTENSION postgis;

+ ```

+

+1. Add geometry columns for pick up and drop off locations:

+

+ ```sql

+ ALTER TABLE rides ADD COLUMN pickup_geom geometry(POINT,2163);

+ ALTER TABLE rides ADD COLUMN dropoff_geom geometry(POINT,2163);

+ ```

+

+1. Convert the latitude and longitude points into geometry coordinates that work with PostGIS.

+

+ ```sql

+ UPDATE rides SET pickup_geom = ST_Transform(ST_SetSRID(ST_MakePoint(pickup_longitude,pickup_latitude),4326),2163),

+ dropoff_geom = ST_Transform(ST_SetSRID(ST_MakePoint(dropoff_longitude,dropoff_latitude),4326),2163);

+ ```

+ This updates 10,906,860 rows of data on both columns, it takes a while. Coffee is your friend.

+

+

+

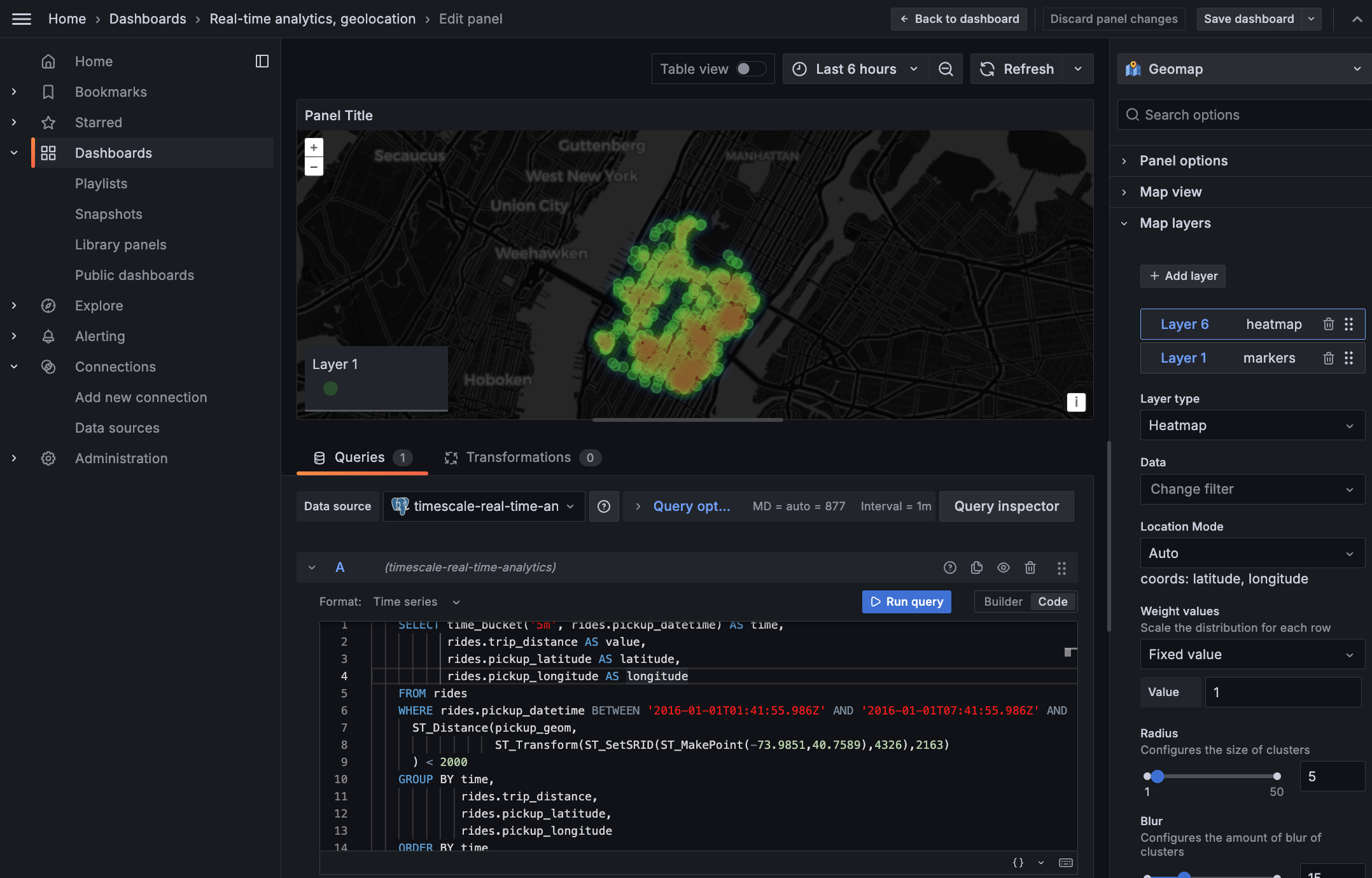

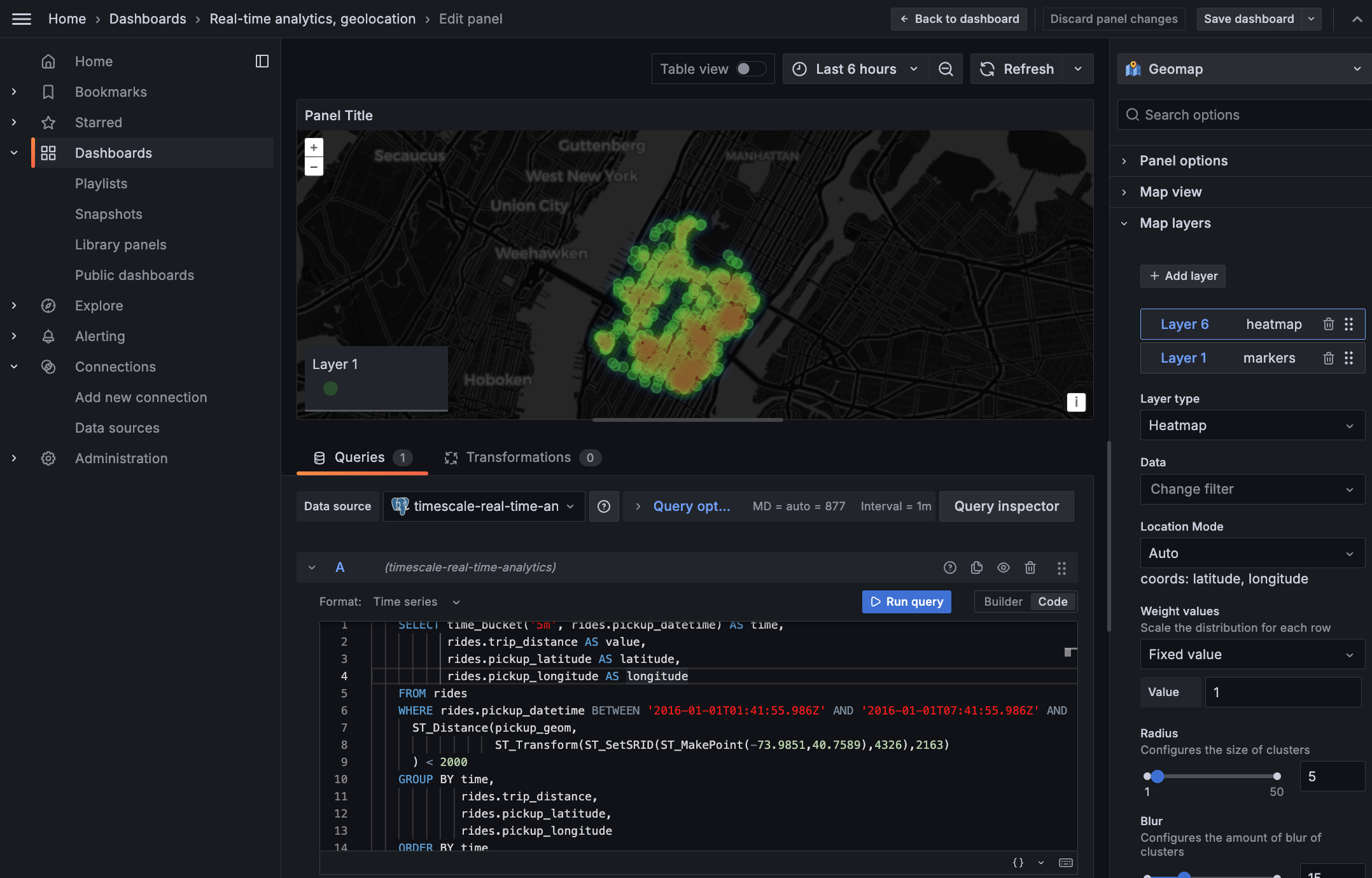

+### Visualize the area where you can make the most money

+

+In this section you visualize a query that returns rides longer than 5 miles for

+trips taken within 2 km of Times Square. The data includes the distance travelled and

+is `GROUP BY` `trip_distance` and location so that Grafana can plot the data properly.

+

+This enables you to see where a taxi driver is most likely to pick up a passenger who wants a longer ride,

+and make more money.

+

+

+

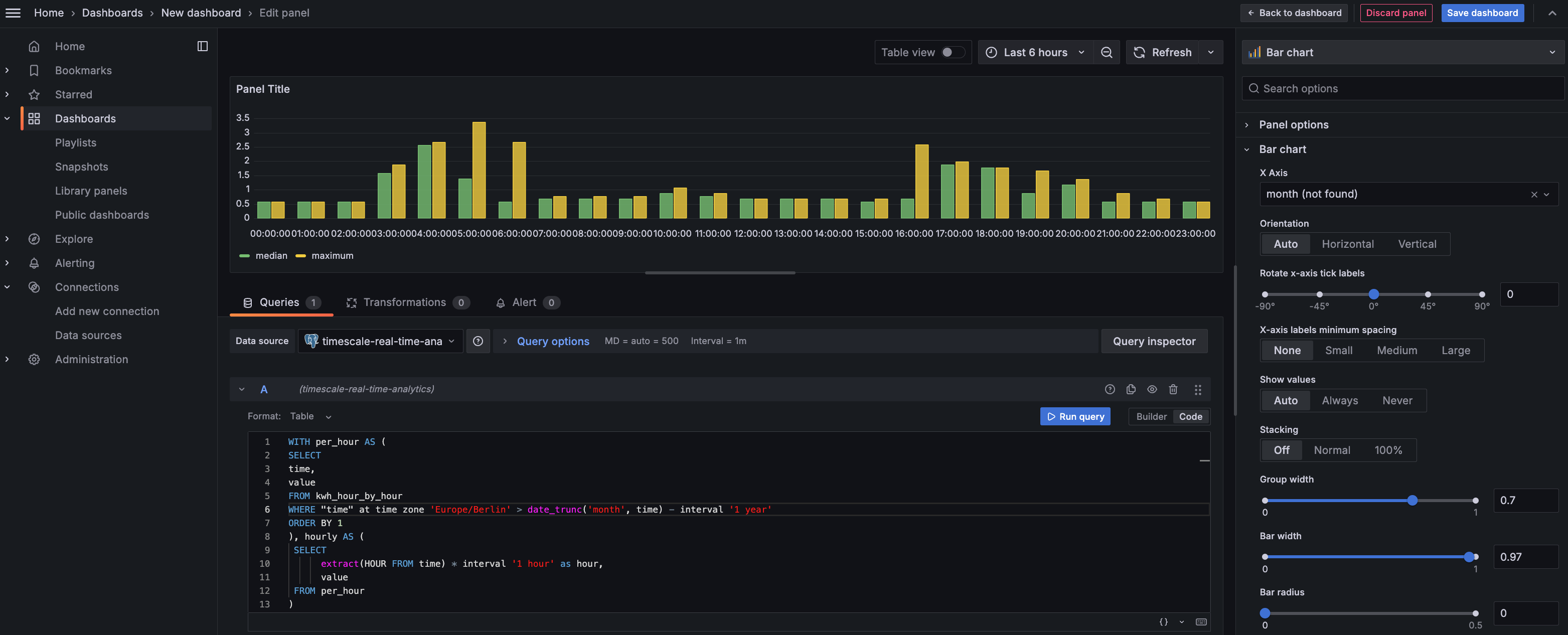

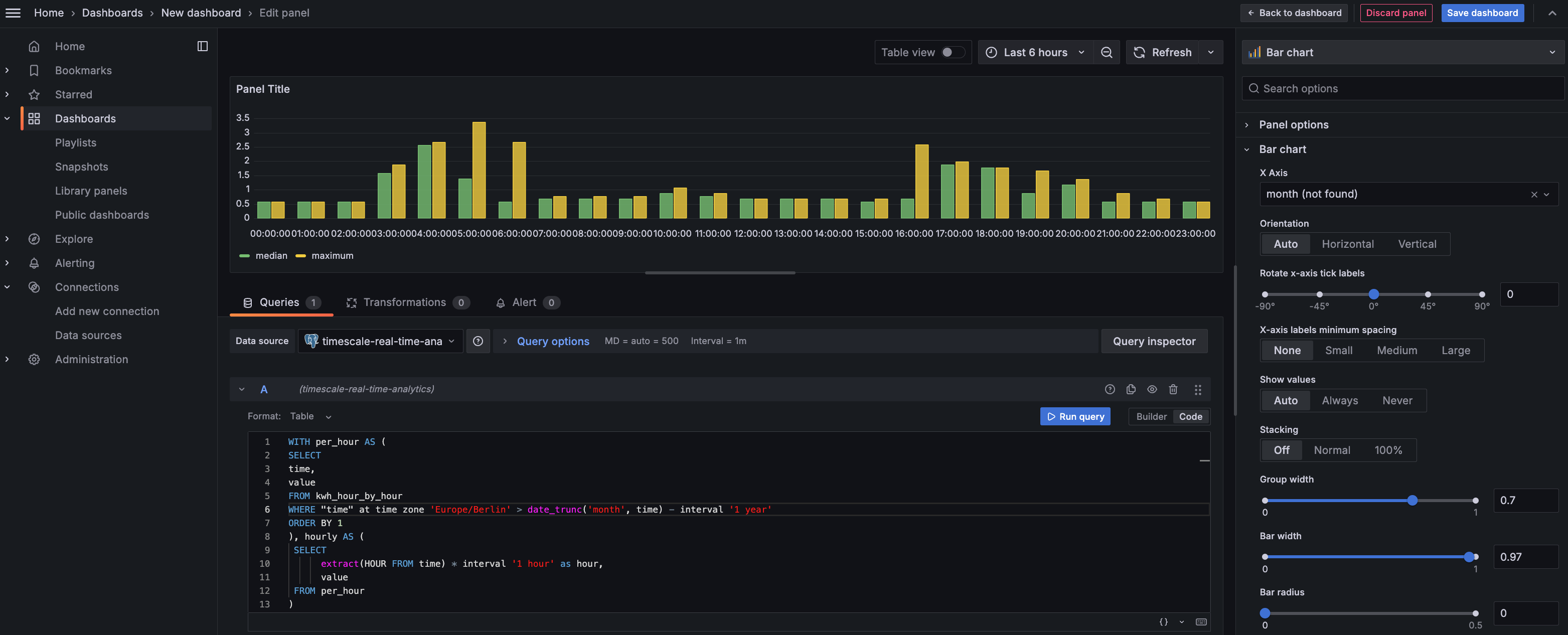

+1. **Create a geolocalization dashboard**

+

+ 1. In Grafana, create a new dashboard that is connected to your $SERVICE_LONG data source with a Geomap

+ visualization.

+

+ 1. In the `Queries` section, select `Code`, then select the Time series `Format`.

+

+

+

+ 1. To find rides longer than 5 miles in Manhattan, paste the following query:

+

+ ```sql

+ SELECT time_bucket('5m', rides.pickup_datetime) AS time,

+ rides.trip_distance AS value,

+ rides.pickup_latitude AS latitude,

+ rides.pickup_longitude AS longitude

+ FROM rides

+ WHERE rides.pickup_datetime BETWEEN '2016-01-01T01:41:55.986Z' AND '2016-01-01T07:41:55.986Z' AND

+ ST_Distance(pickup_geom,

+ ST_Transform(ST_SetSRID(ST_MakePoint(-73.9851,40.7589),4326),2163)

+ ) < 2000

+ GROUP BY time,

+ rides.trip_distance,

+ rides.pickup_latitude,

+ rides.pickup_longitude

+ ORDER BY time

+ LIMIT 500;

+ ```

+ You see a world map with a dot on New York.

+ 1. Zoom into your map to see the visualization clearly.

+

+1. **Customize the visualization**

+

+ 1. In the Geomap options, under `Map Layers`, click `+ Add layer` and select `Heatmap`.

+ You now see the areas where a taxi driver is most likely to pick up a passenger who wants a

+ longer ride, and make more money.

+

+

+

+

+

+

+

+[in-console-editors]: /getting-started/:currentVersion:/run-queries-from-console/

+[postgis]: http://postgis.net/

diff --git a/_partials/_where-to-next.md b/_partials/_where-to-next.md

index 5d1124a297..62a9c7ff4c 100644

--- a/_partials/_where-to-next.md

+++ b/_partials/_where-to-next.md

@@ -1,7 +1,11 @@

-Now you have TimescaleDB running, have a look at the:

+What next? [Try the main features offered by Timescale][try-timescale-features], see the [use case tutorials][tutorials],

+interact with the data in your $SERVICE_LONG using [your favorite programming language][connect-with-code], integrate

+your $SERVICE_LONG with a range of [third-party tools][integrations], plain old [Use Timescale][use-timescale], or dive

+into [the API][use-the-api].

-* [Tutorials][tutorials]: walk through a variety of business scenarios using example datasets.

-* [Use Timescale][tsdb-docs]: browse the features available with TimescaleDB.

-

-[tsdb-docs]: /use-timescale/:currentVersion:/

[tutorials]: /tutorials/:currentVersion:/

+[connect-with-code]: /quick-start/:currentVersion:/

+[integrations]: /use-timescale/:currentVersion:/integrations/

+[use-the-api]: /api/:currentVersion:/

+[use-timescale]: /use-timescale/:currentVersion:/

+[try-timescale-features]: /getting-started/:currentVersion:/try-key-features-timescale-products/

diff --git a/api/compression/add_compression_policy.md b/api/compression/add_compression_policy.md

index 440585e6de..7a154443c3 100644

--- a/api/compression/add_compression_policy.md

+++ b/api/compression/add_compression_policy.md

@@ -11,10 +11,6 @@ api:

import Deprecated2180 from "versionContent/_partials/_deprecated_2_18_0.mdx";

-import Deprecated2180 from "versionContent/_partials/_deprecated_2_18_0.mdx";

-

-import Deprecated2180 from "versionContent/_partials/_deprecated_2_18_0.mdx";

-

# add_compression_policy()

Replaced by add_columnstore_policy().

diff --git a/api/compression/chunk_compression_stats.md b/api/compression/chunk_compression_stats.md

index 25e9e80229..6b7014bf3b 100644

--- a/api/compression/chunk_compression_stats.md

+++ b/api/compression/chunk_compression_stats.md

@@ -11,10 +11,6 @@ api:

import Deprecated2180 from "versionContent/_partials/_deprecated_2_18_0.mdx";

-import Deprecated2180 from "versionContent/_partials/_deprecated_2_18_0.mdx";

-

-import Deprecated2180 from "versionContent/_partials/_deprecated_2_18_0.mdx";

-

# chunk_compression_stats() Community

Replaced by chunk_columnstore_stats().

diff --git a/api/compression/decompress_chunk.md b/api/compression/decompress_chunk.md

index cfa3aa8080..4cca76dd76 100644

--- a/api/compression/decompress_chunk.md

+++ b/api/compression/decompress_chunk.md

@@ -10,10 +10,6 @@ api:

import Deprecated2180 from "versionContent/_partials/_deprecated_2_18_0.mdx";

-import Deprecated2180 from "versionContent/_partials/_deprecated_2_18_0.mdx";

-

-import Deprecated2180 from "versionContent/_partials/_deprecated_2_18_0.mdx";

-

# decompress_chunk() Community

Replaced by convert_to_rowstore().

diff --git a/api/compression/hypertable_compression_stats.md b/api/compression/hypertable_compression_stats.md

index 93acde5677..39f141ff76 100644

--- a/api/compression/hypertable_compression_stats.md

+++ b/api/compression/hypertable_compression_stats.md

@@ -11,10 +11,6 @@ api:

import Deprecated2180 from "versionContent/_partials/_deprecated_2_18_0.mdx";

-import Deprecated2180 from "versionContent/_partials/_deprecated_2_18_0.mdx";

-

-import Deprecated2180 from "versionContent/_partials/_deprecated_2_18_0.mdx";

-

# hypertable_compression_stats() Community

Replaced by hypertable_columnstore_stats().

diff --git a/getting-started/index.md b/getting-started/index.md

index a607cbebdc..07d039ec6e 100644

--- a/getting-started/index.md

+++ b/getting-started/index.md

@@ -16,21 +16,24 @@ import WhereNext from "versionContent/_partials/_where-to-next.mdx";

This section shows you how to:

-1. [Create and connect to a Timescale service][services-create]

-1. [Run queries from Timescale Console][run-queries-from-console]

-1. [Ingest some real financial data into your database][ingest-data]

-1. [Construct some interesting queries][queries] Try out some live queries

-1. [Create and query a continuous aggregate][caggs]

+1. [Create and connect to a $SERVICE_LONG][services-create]: choose the capabilities that match your business and

+ engineering needs on $COMPANY's cloud-based PostgreSQL platform.

+1. [Run queries from $CONSOLE][run-queries-from-console]: securely interact your data in the $CONSOLE UI.

+1. [Try the main features in Timescale products][test-drive]: rapidly implement the features in $CLOUD_LONG that

+ enable you to ingest and query data faster while keeping prices low.

-Already know the basics? See the

-[more advanced tutorials][tutorials], or see how to

-[Use Timescale][use-timescale].

+What next? See the [use case tutorials][tutorials], interact with the data in your $SERVICE_LONG using

+[your favorite programming language][connect-with-code], integrate your $SERVICE_LONG with a range of

+[third-party tools][integrations], plain old [Use Timescale][use-timescale], or dive into [the API][use-the-api].

[tutorials]: /tutorials/:currentVersion:/

+[connect-with-code]: /quick-start/:currentVersion:/

+[integrations]: /use-timescale/:currentVersion:/integrations/

+[use-the-api]: /api/:currentVersion:/

[use-timescale]: /use-timescale/:currentVersion:/

[services-create]: /getting-started/:currentVersion:/services#create-your-timescale-account

[services-connect]: /getting-started/:currentVersion:/services/#connect-to-your-service

+[test-drive]: /getting-started/:currentVersion:/try-key-features-timescale-products/

[run-queries-from-console]: /getting-started/:currentVersion:/run-queries-from-console/

-[ingest-data]: /getting-started/:currentVersion:/time-series-data/

-[queries]: /getting-started/:currentVersion:/queries/

-[caggs]: /getting-started/:currentVersion:/aggregation/

+[ingest-data]: /getting-started/:currentVersion:/try-key-features-timescale-products/#optimize-time-series-data-in-hypertables

+

diff --git a/getting-started/page-index/page-index.js b/getting-started/page-index/page-index.js

index ef51f973f8..6ec2fac83e 100644

--- a/getting-started/page-index/page-index.js

+++ b/getting-started/page-index/page-index.js

@@ -15,29 +15,9 @@ module.exports = [

excerpt: "Run your queries securely from inside Timescale Console",

},

{

- title: "Tables and hypertables",

- href: "tables-hypertables",

- excerpt: "Create tables and hypertables for your data",

- },

- {

- title: "Time-series data",

- href: "time-series-data",

- excerpt: "Add time-series data to your database",

- },

- {

- title: "Queries",

- href: "queries",

- excerpt: "Query your data using full SQL",

- },

- {

- title: "Aggregation",

- href: "aggregation",

- excerpt: "Query aggregated data, and create a continuous aggregate",

- },

- {

- title: "Next steps",

- href: "next-steps",

- excerpt: "Get even more from your Timescale database",

+ title: "Try out key features of Timescale products",

+ href: "try-key-features-timescale-products",

+ excerpt: "Improve database performance with Hypertables, time bucketing, continuous aggregates, compression, data tiering, and high availability",

},

],

},

diff --git a/getting-started/run-queries-from-console.md b/getting-started/run-queries-from-console.md

index 51ea1b6b80..955fab154b 100644

--- a/getting-started/run-queries-from-console.md

+++ b/getting-started/run-queries-from-console.md

@@ -6,6 +6,8 @@ layout_components: [next_prev_large]

content_group: Getting started

---

+import WhereNext from "versionContent/_partials/_where-to-next.mdx";

+

# Run your queries from Timescale Console

As Timescale Cloud is based on PostgreSQL, you can use lots of [different tools][integrations] to

@@ -226,6 +228,7 @@ To use SQL editor with Timescale:

details.

+

[readreplica]: /use-timescale/:currentVersion:/ha-replicas/read-scaling/

[run-popsql]: /getting-started/:currentVersion:/run-queries-from-console/#data-mode

diff --git a/getting-started/services.md b/getting-started/services.md

index 1dfb3efa87..9ca39ab1a1 100644

--- a/getting-started/services.md

+++ b/getting-started/services.md

@@ -11,6 +11,7 @@ import Connect from "versionContent/_partials/_cloud-connect.mdx";

import CreateAHypertable from "versionContent/_partials/_create-hypertable.mdx";

import ServiceOverview from "versionContent/_partials/_service-overview.mdx";

import CloudIntro from "versionContent/_partials/_cloud-intro.mdx";

+import WhereNext from "versionContent/_partials/_where-to-next.mdx";

# Create your first $SERVICE_LONG

@@ -59,11 +60,10 @@ A $SERVICE_LONG comes with access control to its data. To be able to run queries

-## Create a hypertable

+And that is it, you are up and running. Enjoy developing with $COMPANY.

-

+

-And that is it, you are up and running. Enjoy developing with $CLOUD_LONG.

[tsc-portal]: https://console.cloud.timescale.com/

[services-how-to]: /use-timescale/:currentVersion:/services/

diff --git a/getting-started/tables-hypertables.md b/getting-started/tables-hypertables.md

index 43589aaf13..f336016e83 100644

--- a/getting-started/tables-hypertables.md

+++ b/getting-started/tables-hypertables.md

@@ -1,6 +1,6 @@

---

title: Tables and hypertables

-excerpt: Hypertables are PostgreSQL tables designed to boost the performance of your real-time analytical queries. Create a table and then convert it into a hypertable in Timescale Console

+excerpt: Create tables and hypertables in your Timescale account

products: [cloud]

keywords: [hypertables, create]

layout_components: [next_prev_large]

@@ -13,17 +13,6 @@ import HypertableIntro from "versionContent/_partials/_hypertables-intro.mdx";

-Databases are made up of tables that contain your data. In PostgreSQL, these

-tables are relational, so the data in one table relates to the data in another

-table. In Timescale, you use regular PostgreSQL relational tables, in addition

-to special time-series hypertables.

-

-Hypertables are designed specifically for time-series data, so they have a few

-special qualities that makes them different to a regular PostgreSQL table. A

-hypertable is always partitioned on time, but can also be partitioned on

-additional columns as well. The other special thing about hypertables is that

-they are broken down into smaller tables called chunks.

-

In this section, you create a hypertable for time-series data, and regular

PostgreSQL tables for relational data. You also create an index on your

hypertable, which isn't required, but can help your queries run more efficiently.

diff --git a/getting-started/try-key-features-timescale-products.md b/getting-started/try-key-features-timescale-products.md

new file mode 100644

index 0000000000..842cf1afb6

--- /dev/null

+++ b/getting-started/try-key-features-timescale-products.md

@@ -0,0 +1,392 @@

+---

+title: Try out key features of Timescale products

+excerpt: Improve database performance with hypertables, time bucketing, compression and continuous aggregates.

+products: [cloud]

+content_group: Getting started

+---

+

+import HASetup from 'versionContent/_partials/_high-availability-setup.mdx';

+import IntegrationPrereqs from "versionContent/_partials/_integration-prereqs.mdx";

+

+# Try out key features of Timescale products

+

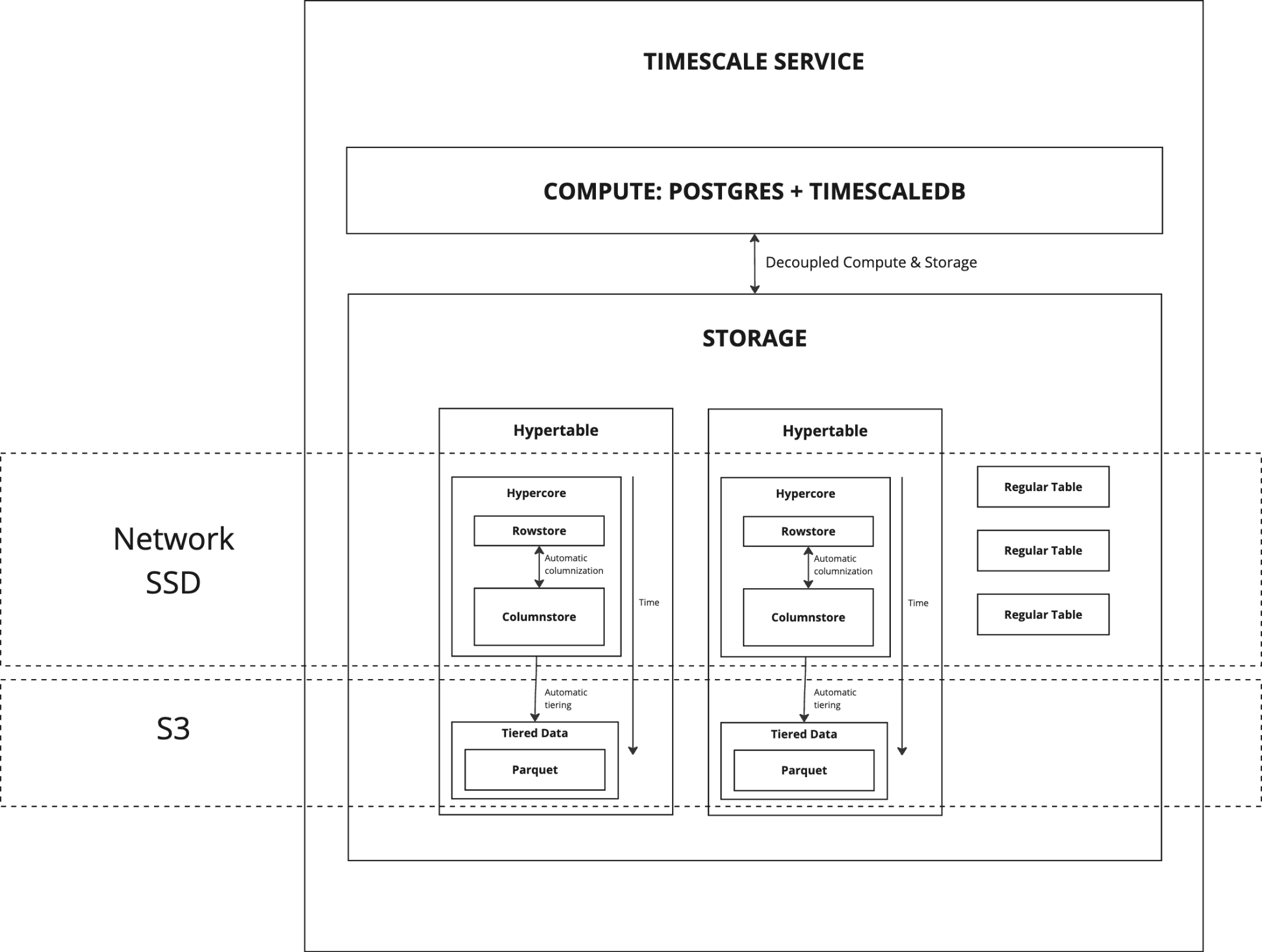

+$CLOUD_LONG scales PostgreSQL to ingest and query vast amounts of live data. $CLOUD_LONG

+provides a range of features and optimizations that supercharge your queries while keeping the

+costs down. For example:

+* The hypercore row-columnar engine makes queries up to 350x faster, ingests 44% faster, and reduces storage by 90%.

+* Tiered storage seamlessly moves your data from high performance storage for frequently access data to low cost bottomless storage for rarely accessed data.

+

+The following figure shows the main features and tiered data in $CLOUD_LONG:

+

+

+

+This page gives shows you how to rapidly implement the features in $CLOUD_LONG that enable you to

+ingest and query data faster while keeping prices low.

+

+## Prerequisites

+

+

+

+## Optimize time-series data in hypertables

+

+Time-series data represents how a system, process, or behavior changes over time. Hypertables are PostgreSQL tables

+that help you improve insert and query performance by automatically partition your data by time. Each hypertable

+is made up of child tables called chunks. Each chunk is assigned a range of time, and only

+contains data from that range. You can also tune hypertables to increase performance

+even more.

+

+

+

+Hypertables exist alongside regular PostgreSQL tables.

+You use regular PostgreSQL tables for relational data, and interact with hypertables

+and regular PostgreSQL tables in the same way.

+

+This section shows you how to create regular tables and hypertables, and import

+relational and time-series data from external files.

+

+

+

+1. **Import some time-series data into your hypertable**

+

+ 1. Unzip [real_time_stock_data.zip](https://assets.timescale.com/docs/downloads/get-started/real_time_stock_data.zip) to a ``.

+

+ This test dataset contains second-by-second stock-trade data for the top 100 most-traded symbols

+ and a regular table of company symbols and company names.

+

+ To import up to 100GB of data directly from your current PostgreSQL based database,

+ [migrate with downtime][migrate-with-downtime] using native PostgreSQL tooling. To seamlessly import 100GB-10TB+

+ of data, use the [live migration][migrate-live] tooling supplied by $COMPANY. To add data from non-PostgreSQL

+ data sources, see [Import and ingest data][data-ingest].

+

+ 1. Upload data from the CSVs to your $SERVICE_SHORT:

+

+

+

+

+

+ The $CONSOLE data upload creates the tables for you from the data you are uploading:

+ 1. In [$CONSOLE][portal-ops-mode], select the service to add data to, then click **Actions** > **Upload CSV**.

+ 1. Drag `/tutorial_sample_tick.csv` to `Upload .CSV` and change `New table name`, to `stocks_real_time`.

+ 1. Enable `hypertable partition` for the `time` column and click `Upload CSV`.

+ The upload wizard creates a hypertable containing the data from the CSV file.

+ 1. When the data is uploaded, close `Upload .CSV`.

+ If you want to have a quick look at your data, press `Run` .

+ 1. Repeat the process with `/tutorial_sample_company.csv` and rename to `company`.

+ There is no time-series data in this table, so you don't see the `hypertable partition` option.

+

+

+

+

+

+ 1. In Terminal, navigate to `` and connect to your $SERVICE_SHORT.

+ ```bash

+ psql -d "postgres://:@:/"

+ ```

+ The connection information for a $SERVICE_SHORT is available in the file you downloaded when you created it.

+

+ 2. Create tables for the data to import

+

+ - For the time-series data:

+ 1. In your sql client, create a normal PostgreSQL table:

+

+ ```sql

+ CREATE TABLE stocks_real_time (

+ time TIMESTAMPTZ NOT NULL,

+ symbol TEXT NOT NULL,

+ price DOUBLE PRECISION NULL,

+ day_volume INT NULL

+ );

+ ```

+ 1. Convert `stocks_real_time` to a hypertable:

+ ```sql

+ SELECT create_hypertable('stocks_real_time', by_range('time'));

+ ```

+ To more fully understand how hypertables work, and how to optimize them for performance by

+ tuning chunk intervals and enabling chunk skipping, see [the hypertables documentation][hypertables-section].

+

+ - For the relational data:

+

+ In your sql client, create a normal PostgreSQL table:

+ ```sql

+ CREATE TABLE company (

+ symbol TEXT NOT NULL,

+ name TEXT NOT NULL

+ );

+ ```

+

+ 3. Upload the dataset to your $SERVICE_SHORT

+ ```sql

+ \COPY stocks_real_time from './tutorial_sample_tick.csv' DELIMITER ',' CSV HEADER;

+ \COPY company from './tutorial_sample_company.csv' DELIMITER ',' CSV HEADER;

+ ```

+

+

+

+

+

+ To more fully understand how hypertables work, and how to optimize them for performance by

+ tuning chunk intervals and enabling chunk skipping, see [the hypertables documentation][hypertables-section].

+

+1. **Have a quick look at your data**

+

+ You query hypertables in exactly the same way as you would a relational PostgreSQL table.

+ Use one of the following SQL editors to run a query and see the data you uploaded:

+ - **Data mode**: write queries, visualize data, and share your results in [$CONSOLE][portal-data-mode] for all your $SERVICE_LONGs.

+ - **SQL editor**: write, fix, and organize SQL faster and more accurately in [$CONSOLE][portal-ops-mode] for a $SERVICE_LONG.

+ - **psql**: easily run queries on your $SERVICE_LONGs or self-hosted TimescaleDB deployment from Terminal.

+

+

+

+

+

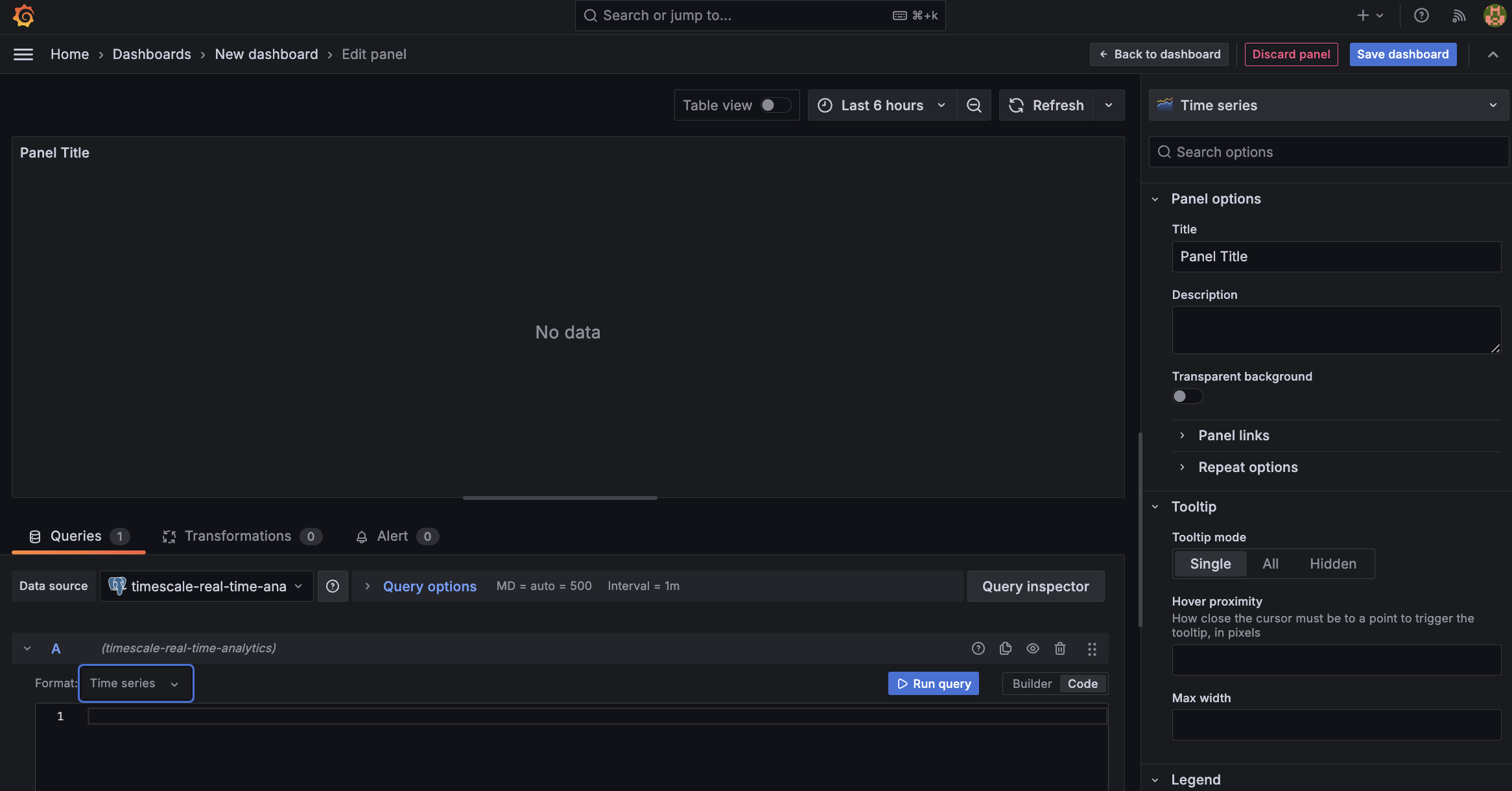

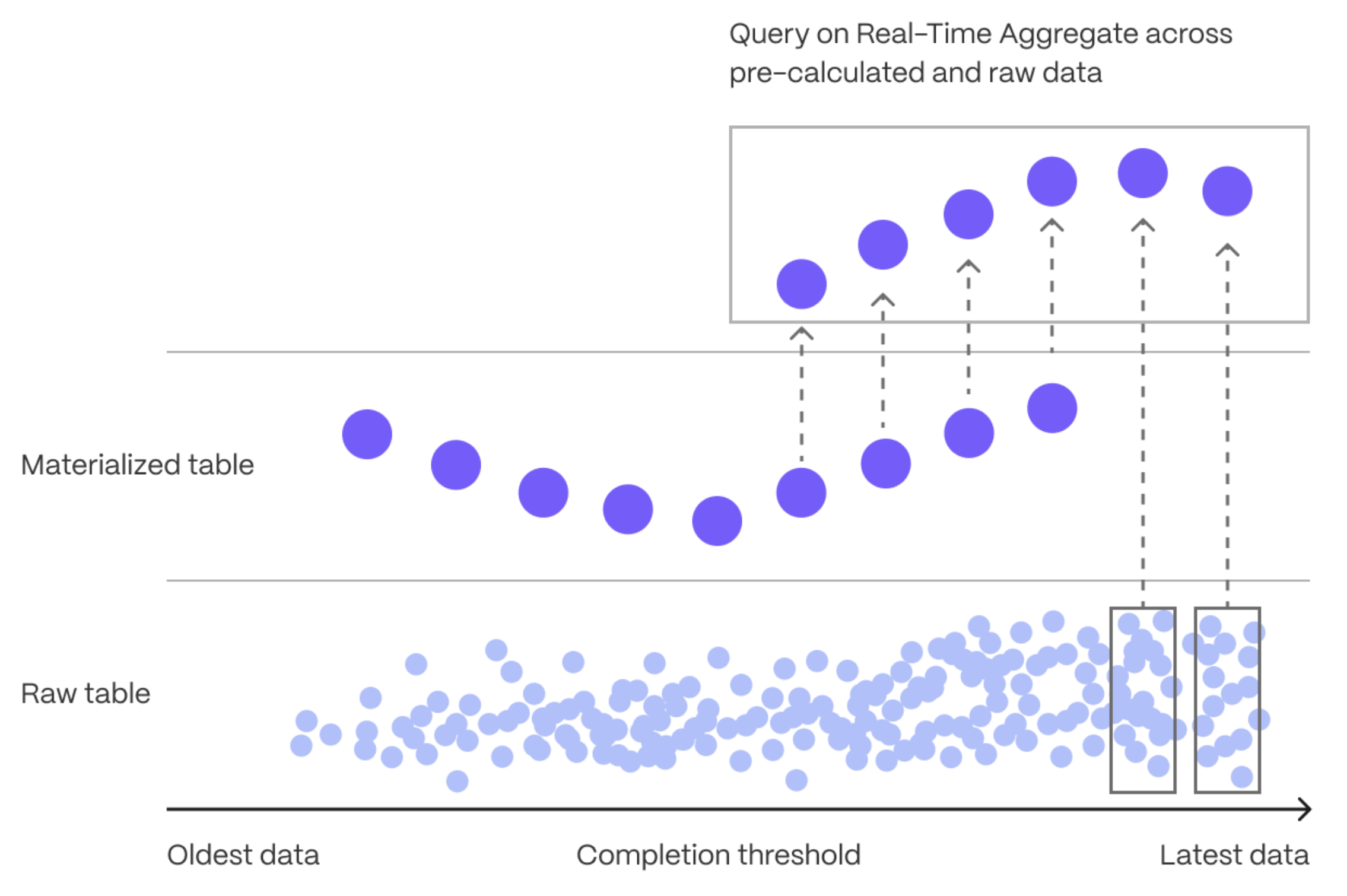

+## Write fast analytical queries on frequently access data using time buckets and continuous aggregates

+

+Aggregation is a way of combing data to get insights from it. Average, sum, and count are all

+example of simple aggregates. However, with large amounts of data aggregation slows things down, quickly.

+Continuous aggregates are a kind of hypertable that is refreshed automatically in

+the background as new data is added, or old data is modified. Changes to your dataset are tracked,

+and the hypertable behind the continuous aggregate is automatically updated in the background.

+

+

+

+You create continuous aggregates on uncompressed data in high-performance storage. They continue to work

+on [data in the columnstore][test-drive-enable-compression]

+and [rarely accessed data in tiered storage][test-drive-tiered-storage]. You can even

+create [continuous aggregates on top of your continuous aggregates][hierarchical-caggs].

+

+You use time buckets to create a continuous aggregate. Time buckets aggregate data in hypertables by time

+interval. For example, a 5-minute, 1-hour, or 3-day bucket. The data grouped in a time bucket use a single

+timestamp. Continuous aggregates minimize the number of records that you need to look up to perform your

+query.

+

+This section show you how to run fast analytical queries using time buckets and continuous aggregates in

+$CONSOLE. You can also do this using psql.

+

+

+

+

+

+

+

+1. **In [$CONSOLE][portal-ops-mode], select the service you uploaded data to, then click `SQL Editor`**.

+

+1. **Create a continuous aggregate**

+

+ For a continuous aggregate, data grouped using a time bucket is stored in a

+ PostgreSQL `MATERIALIZED VIEW` in a hypertable. `timescaledb.continuous` ensures that this data

+ is always up to date.

+ In your SQL editor, use the following code to create a continuous aggregate on the real time data in

+ the `stocks_real_time` table:

+

+ ```sql

+ CREATE MATERIALIZED VIEW stock_candlestick_daily

+ WITH (timescaledb.continuous) AS

+ SELECT

+ time_bucket('1 day', "time") AS day,

+ symbol,

+ max(price) AS high,

+ first(price, time) AS open,

+ last(price, time) AS close,

+ min(price) AS low

+ FROM stocks_real_time srt

+ GROUP BY day, symbol;

+ ```

+

+ This continuous aggregate creates the [candlestick chart][charts] data you use to visualize

+ the price change of an asset.

+

+1. **Create a policy to refresh the view every hour**

+

+ ```sql

+ SELECT add_continuous_aggregate_policy('stock_candlestick_daily',

+ start_offset => INTERVAL '3 weeks',

+ end_offset => INTERVAL '24 hours',

+ schedule_interval => INTERVAL '3 hours');

+ ```

+

+1. **Have a quick look at your data**

+

+ You query continuous aggregates exactly the same way as your other tables. To query the `stock_candlestick_daily`

+ continuous aggregate for all stocks:

+

+

+

+

+

+

+

+

+

+

+

+

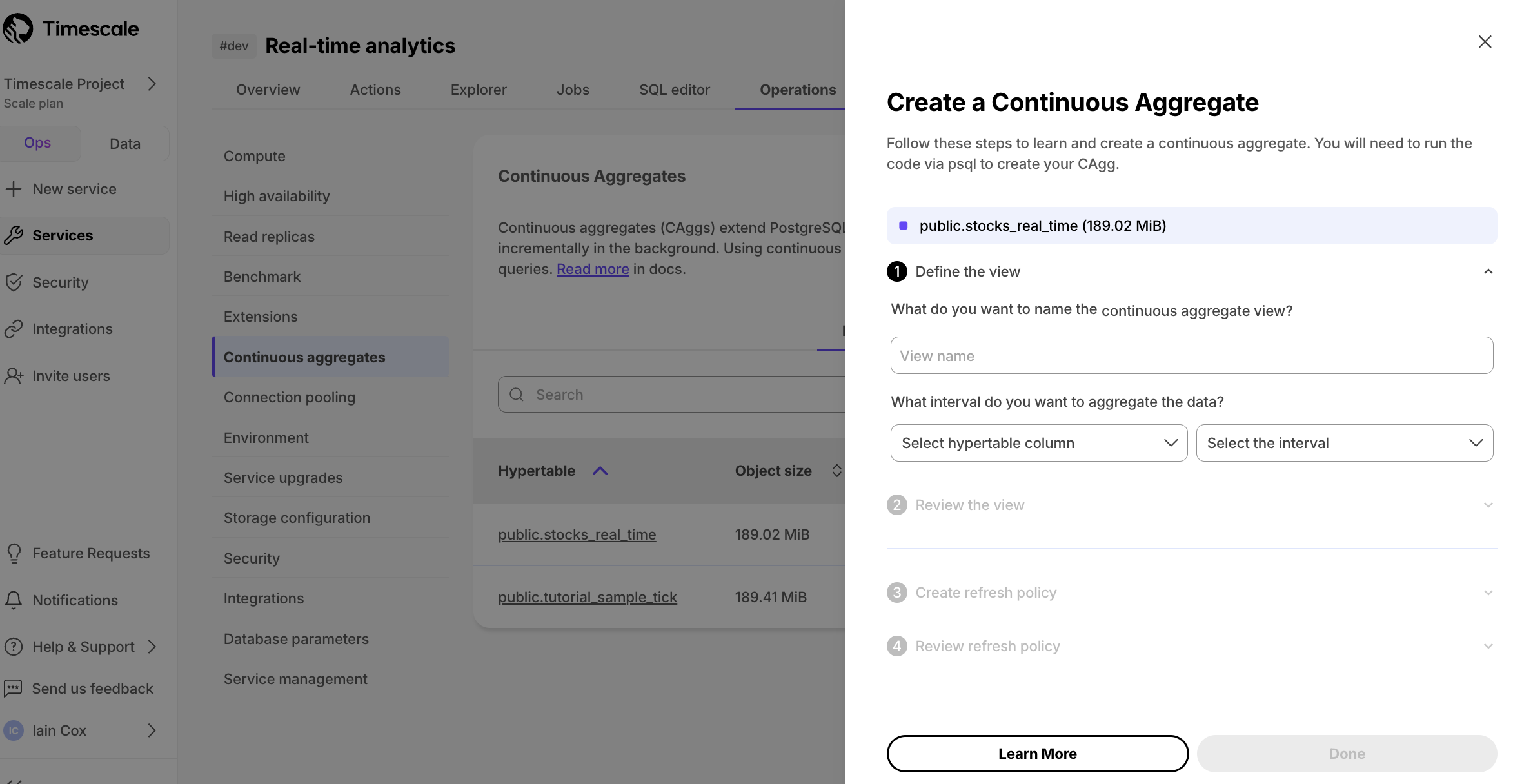

+1. **In [$CONSOLE][portal-ops-mode], select the service you uploaded data to**.

+1. **Click `Operations` > `Continuous aggregates`, select `stocks_real_time`, then click `Create continuous aggregate`**.

+

+1. **Create a view called `stock_candlestick_daily` on the `time` column with an interval of `1 day`, then click `Next step`**.

+1. **Update the view SQL with the following functions, then click `Run`**

+ ```sql

+ CREATE MATERIALIZED VIEW stock_candlestick_daily

+ WITH (timescaledb.continuous) AS

+ SELECT

+ time_bucket('1 day', "time") AS bucket,

+ symbol,

+ max(price) AS high,

+ first(price, time) AS open,

+ last(price, time) AS close,

+ min(price) AS low

+ FROM "public"."stocks_real_time" srt

+ GROUP BY bucket, symbol;

+ ```

+1. **When the view is created, click `Next step`**

+1. **Define a refresh policy with the following values, then click `Next step`**

+ - `Set the start offset`: `3 weeks`

+ - `Set the end offset`: `24 hours`

+ - `Set the schedule interval`: `3 hours`

+1. **Click `Create continuous aggregate`, then click `Run`**

+

+$CLOUD_LONG creates the continuous aggregate and displays the aggregate ID in $CONSOLE. Click `DONE` to close the wizard.

+

+

+

+

+

+

+

+To see the change in terms of query time and data returned between a regular query and

+a continuous aggregate, run the query part of the continuous aggregate

+( `SELECT ...GROUP BY day, symbol;` ) and compare the results.

+

+## Prepare your data for real-time analytics with hypercore

+

+Hypercore is the Timescale hybrid row-columnar storage engine, designed specifically for real-time analytics and

+powered by time-series data. The advantage of Hypercore is its ability to seamlessly switch between row-oriented and

+column-oriented storage. This flexibility enables Timescale Cloud to deliver the best of both worlds, solving the key

+challenges in real-time analytics.

+

+When you convert chunks from the rowstore to the columnstore, multiple records are grouped into a single row.

+The columns of this row hold an array-like structure that stores all the data. Because a single row takes up less disk

+space, you can reduce your chunk size by more than 90%, and can also speed up your queries. This saves on storage costs,

+and keeps your queries operating at lightning speed.

+

+Best practice is to compress data that is no longer needed for highest performance queries, but is still accessed regularly. For example, last week's stock

+market data.

+

+

+

+1. **Enable hypercore on a hypertable**

+

+ Create a [job][job] that automatically moves chunks in a hypertable to the columnstore at a specific time interval.

+

+ ```sql

+ ALTER TABLE stocks_real_time SET (

+ timescaledb.enable_columnstore = true,

+ timescaledb.segmentby = 'symbol');

+ ```

+

+1. **Add a policy to convert chunks to the columnstore at a specific time interval**

+

+ For example, 60 days after the data was added to the table:

+ ``` sql

+ CALL add_columnstore_policy('stocks_real_time', after => INTERVAL '60d');

+ ```

+ See [add_columnstore_policy][add_columnstore_policy].

+

+

+

+

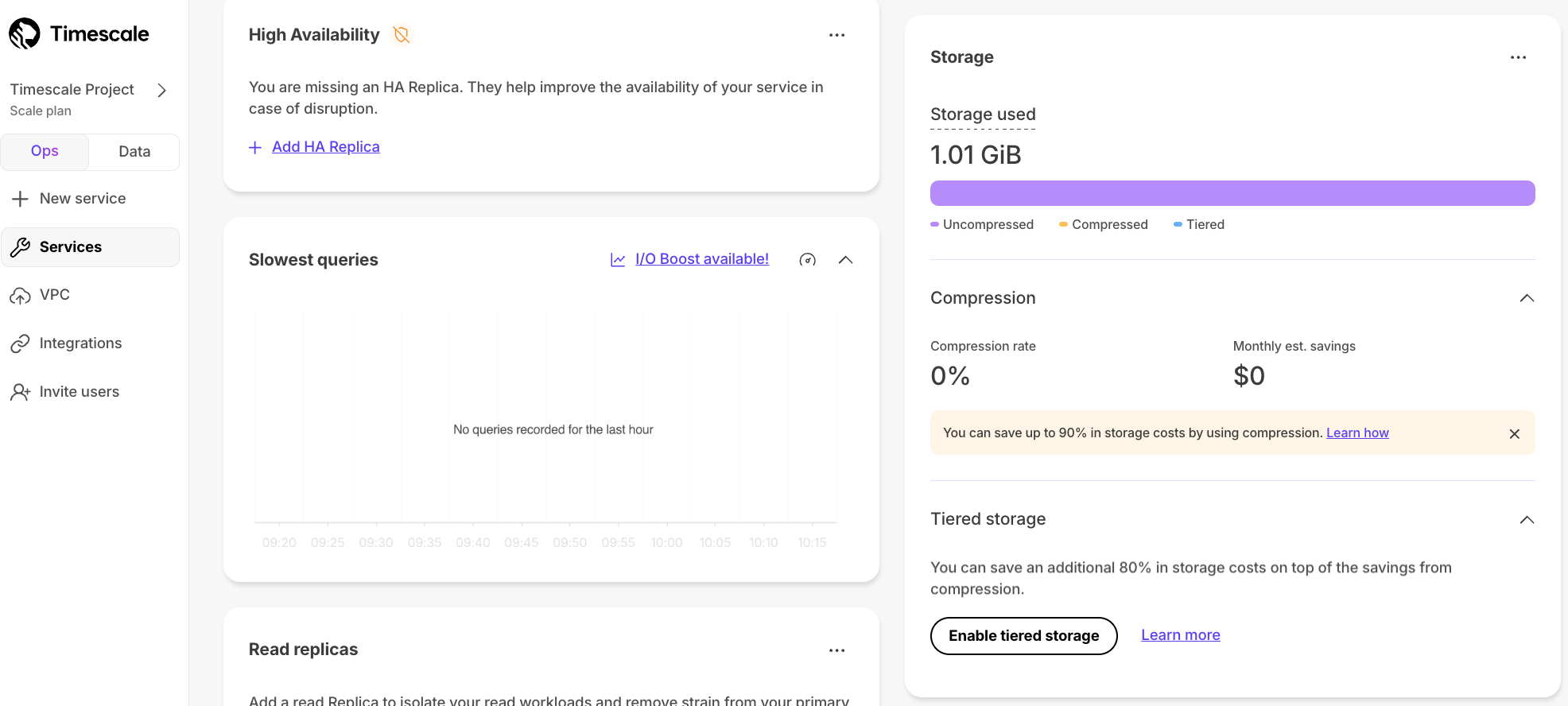

+## Reduce storage charges for rarely accessed data using tiered storage

+

+In the previous sections, you used continuous aggregates to make fast analytical queries, and

+compression to reduce storage costs on frequently accessed data. To reduce storage costs even more,

+you create tiering policies to move rarely accessed data to the object store. The object store is

+low-cost bottomless data storage built on Amazon S3. However, no matter the tier, you can

+[query your data when you need][querying-tiered-data]. $CLOUD_LONG seamlessly accesses the correct storage

+tier and generates the response.

+

+Data tiering is available in the [scale and enterprise][pricing-plans] pricing plans for $CLOUD_LONG.

+

+To setup data tiering:

+

+

+

+1. **Enable data tiering**

+

+ 1. In [$CONSOLE][portal-ops-mode], select the service to modify.

+

+ You see the `Overview` section.

+

+ 1. Scroll down, then click `Enable tiered storage`.

+

+

+

+ When tiered storage is enabled, you see the amount of data in the tiered object storage.

+

+1. **Set the time interval when data is tiered**

+

+ In $CONSOLE, click `SQL Editor`, then enable data tiering on a hypertable with the following query:

+ ```sql

+ SELECT add_tiering_policy('stock_candlestick_daily', INTERVAL '3 weeks');

+ ```

+

+1. **Qeury tiered data**

+

+ You enable reads from tiered data for each query, for a session or for all future

+ sessions. To run a single query on tiered data:

+

+ ```sql

+ set timescaledb.enable_tiered_reads = true; SELECT * FROM stocks_real_time srt LIMIT 10; set timescaledb.enable_tiered_reads = false;

+ ```

+ For more information, see [Querying tiered data][querying-tiered-data].

+

+

+

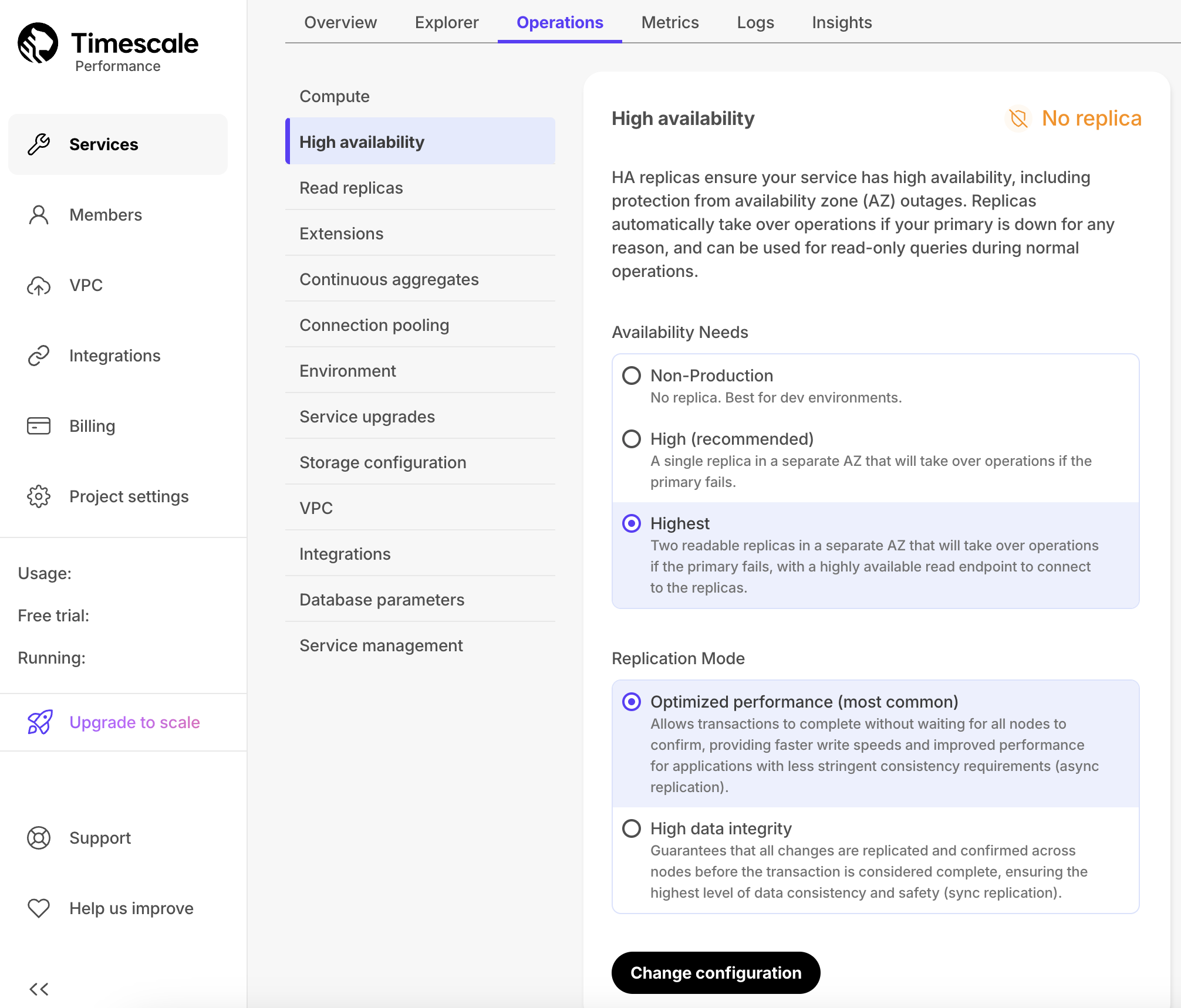

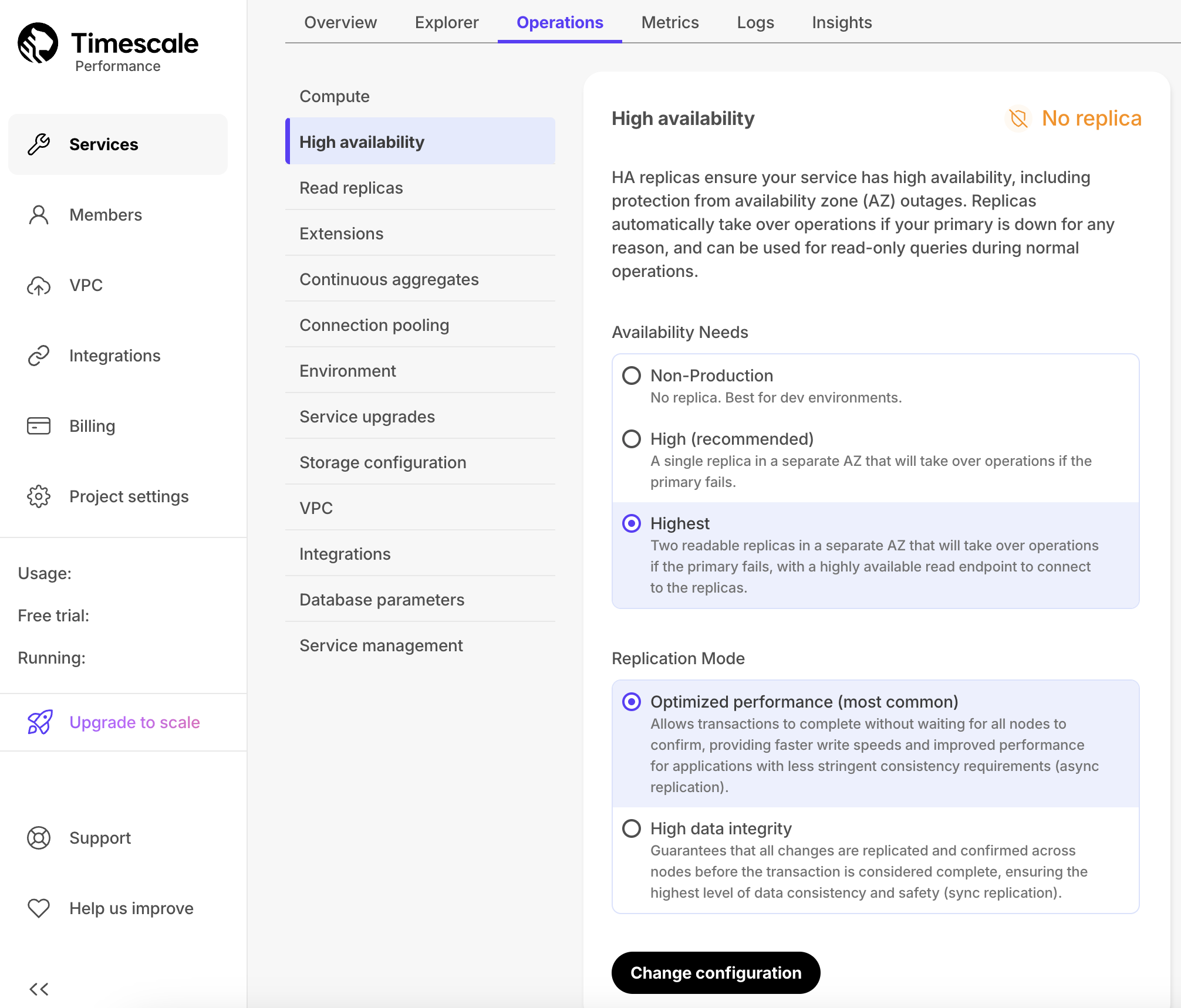

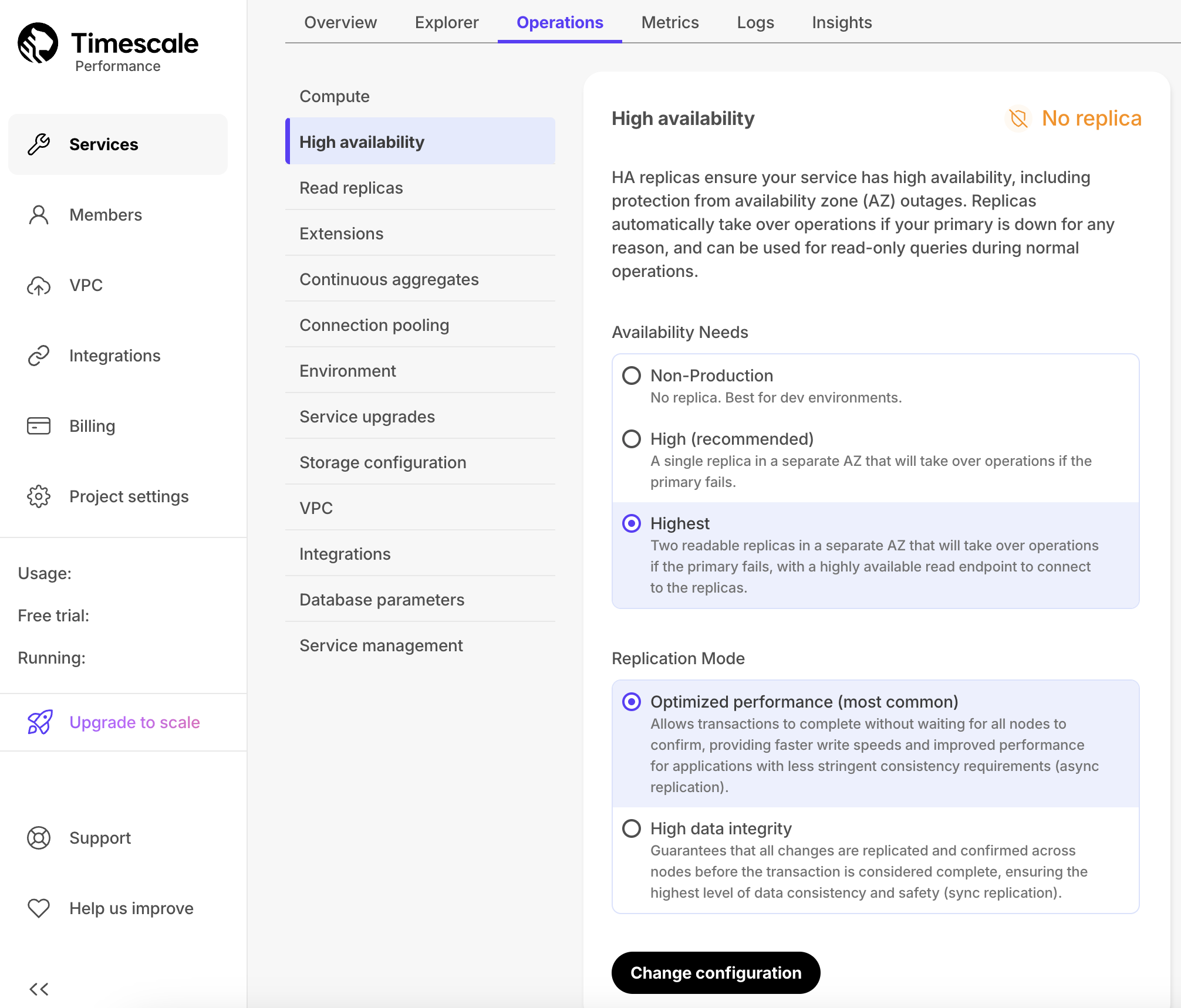

+## Reduce the risk of downtime and data loss with high availability

+

+By default, all $SERVICE_LONGs have rapid recovery enabled. However, if your app has very low tolerance

+for downtime, $CLOUD_LONG offers High Availability (HA) replicas. HA replicas are exact, up-to-date copies

+of your database hosted in multiple AWS availability zones (AZ) within the same region as your primary node.

+HA replicas automatically take over operations if the original primary data node becomes unavailable.

+The primary node streams its write-ahead log (WAL) to the replicas to minimize the chances of

+data loss during failover.

+

+High availability is available in the [scale and enterprise][pricing-plans] pricing plans for $CLOUD_LONG.

+

+

+

+For more information, see [High availability][high-availability].

+

+What next? See the [use case tutorials][tutorials], interact with the data in your $SERVICE_LONG using

+[your favorite programming language][connect-with-code], integrate your $SERVICE_LONG with a range of

+[third-party tools][integrations], plain old [Use Timescale][use-timescale], or dive into [the API][use-the-api].

+

+[tutorials]: /tutorials/:currentVersion:/

+[connect-with-code]: /quick-start/:currentVersion:/

+[integrations]: /use-timescale/:currentVersion:/integrations/