-

Notifications

You must be signed in to change notification settings - Fork 9.1k

HADOOP-17115. Replace Guava Sets usage by Hadoop's own Sets in hadoop-common and hadoop-tools #2985

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

c6b6e5b to

0db00ee

Compare

|

@aajisaka If you would like to take a look while QA is in progress (QA here is full build, hence will take 17+ hr for sure). |

This comment has been minimized.

This comment has been minimized.

This comment has been minimized.

This comment has been minimized.

This comment has been minimized.

This comment has been minimized.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Looking good so far. A few minor issues noted below.

I'd be more comfortable with this patch if we got solid runs out of QA, especially since there's a banned import rule added. I think to do that we'll need this broken up into multiple PRS. It'd be best to do import bans for the parts of the project that have been converted as we go.

- first the utility definition and changes to hadoop-common and hadoop-tools

- dependent on the first PR changes to yarn

- dependent on the first PR changes to HDFS

- dependent on the first PR changes to mapreduce

- after the rest, add the top-level banned import rule.

This also minimizes the amount of churn if nightly should fail and we need to back out a particular PR from branch(es).

hadoop-common-project/hadoop-common/src/main/java/org/apache/hadoop/util/nonguava/Sets.java

Outdated

Show resolved

Hide resolved

...dfs-project/hadoop-hdfs/src/main/java/org/apache/hadoop/hdfs/server/namenode/JournalSet.java

Outdated

Show resolved

Hide resolved

...che/hadoop/yarn/server/timelineservice/reader/TestTimelineReaderWebServicesHBaseStorage.java

Outdated

Show resolved

Hide resolved

...che/hadoop/yarn/server/timelineservice/reader/TestTimelineReaderWebServicesHBaseStorage.java

Outdated

Show resolved

Hide resolved

...che/hadoop/yarn/server/timelineservice/reader/TestTimelineReaderWebServicesHBaseStorage.java

Outdated

Show resolved

Hide resolved

...che/hadoop/yarn/server/timelineservice/reader/TestTimelineReaderWebServicesHBaseStorage.java

Outdated

Show resolved

Hide resolved

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Can we put this in org.apache.hadoop.util instead of referencing a thirdparty in the package? Doing so also avoids us needing a package-info.java for the new package.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Sounds good.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I prefer to keep nonguava package so that we have a clear view of what has been implemented to replace guava. Otherwise, it will be not straightforward to see which classes were coming from hadoop.util vs which were added recently.

Later, when moving out of guava is complete, we can move those classes back into util if necessary.

avoids us needing a package-info.java for the new package.

package-info is not a big deal to add.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

There are already classes in o.a.hadoop.util with an origin in guava. (i e. LimitInputStream) why the distinction?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Sure let me restrict this PR to only hadoop-common and hadoop-tools only and once merged, will create dependent PRs

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Sounds good.

hadoop-common-project/hadoop-common/src/main/java/org/apache/hadoop/util/nonguava/Sets.java

Outdated

Show resolved

Hide resolved

...dfs-project/hadoop-hdfs/src/main/java/org/apache/hadoop/hdfs/server/namenode/JournalSet.java

Outdated

Show resolved

Hide resolved

...che/hadoop/yarn/server/timelineservice/reader/TestTimelineReaderWebServicesHBaseStorage.java

Outdated

Show resolved

Hide resolved

@virajjasani thanks for the changes. I agree with @busbey that the PR should be split into modules. I am a little bit sure I understand why |

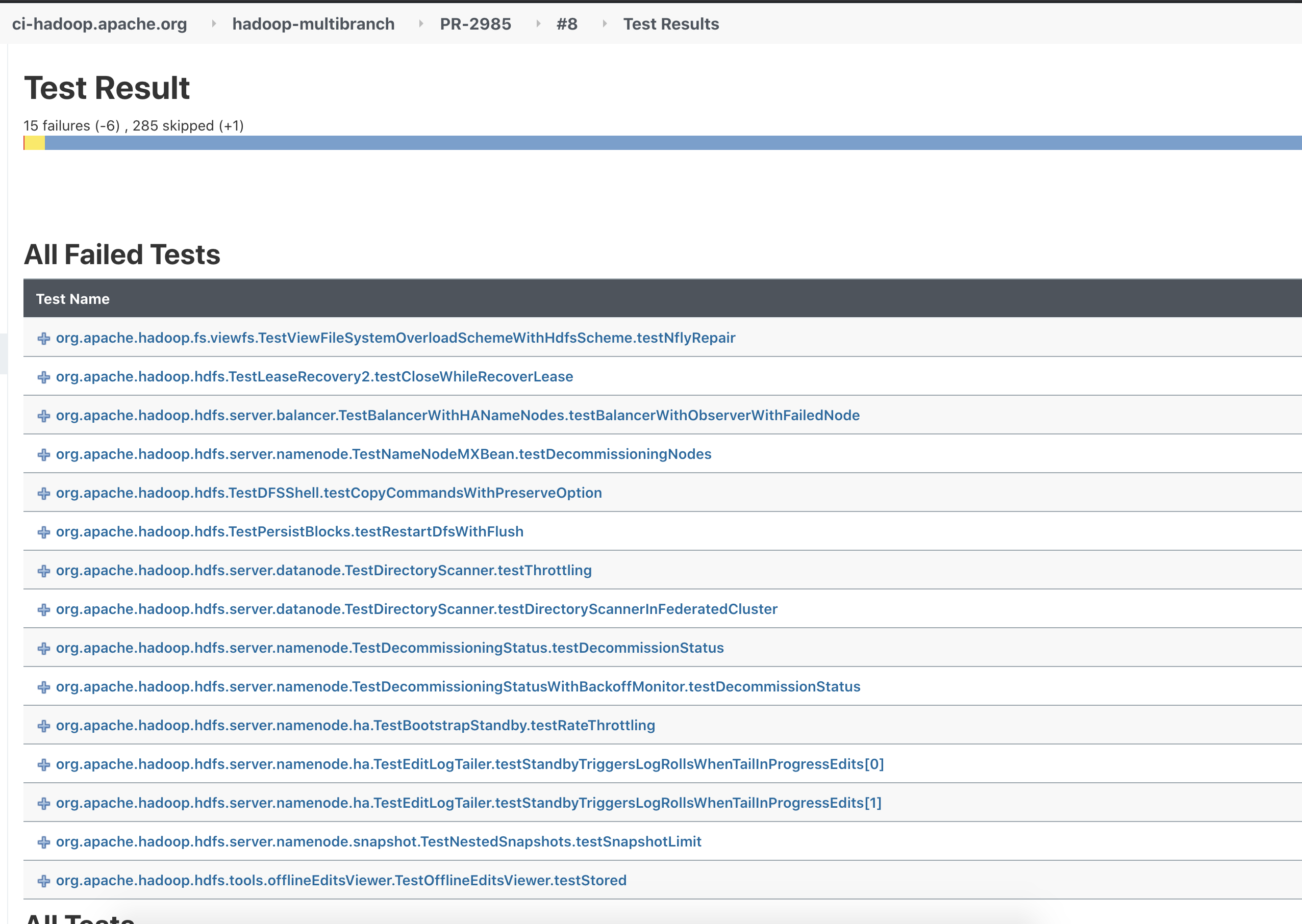

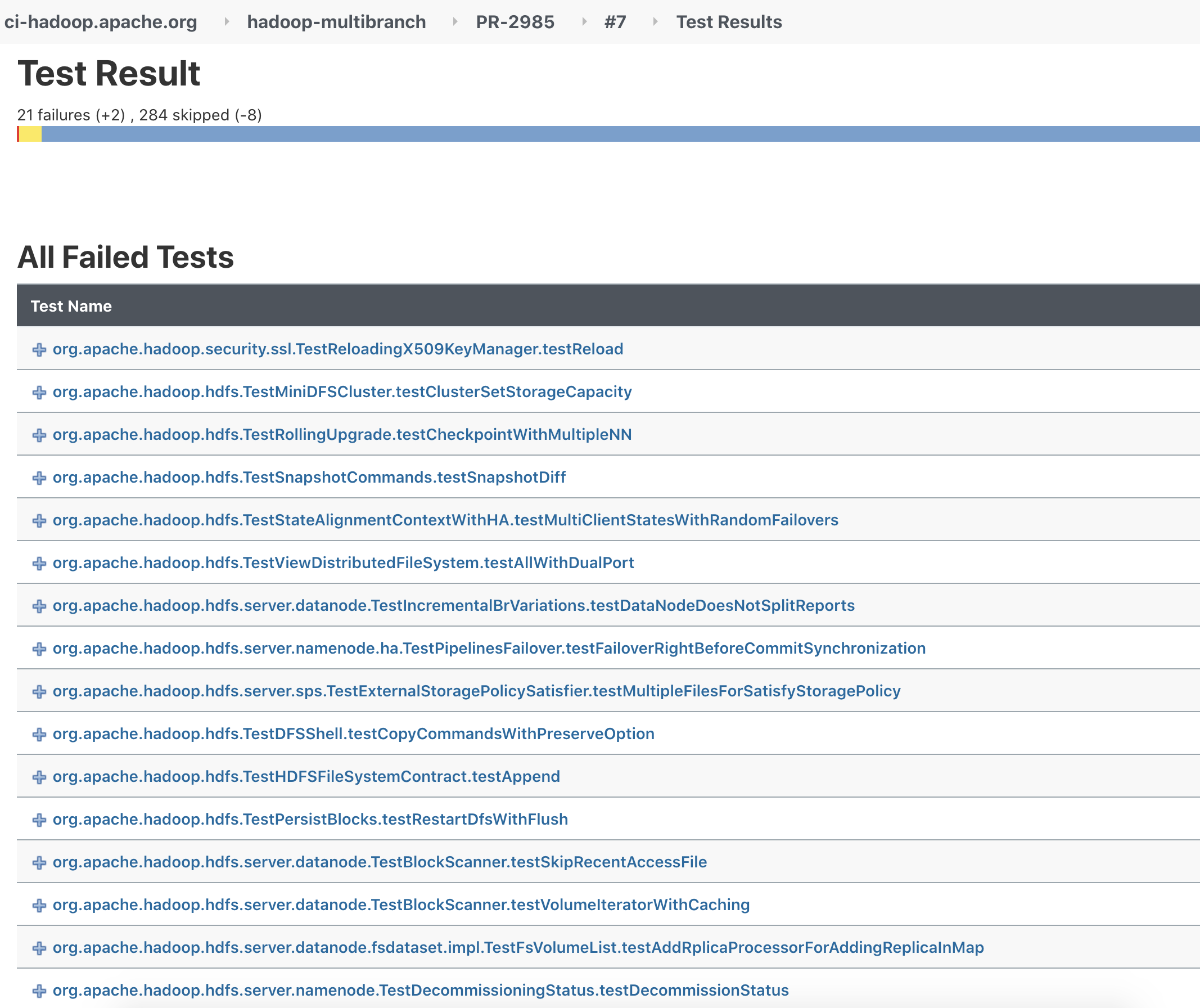

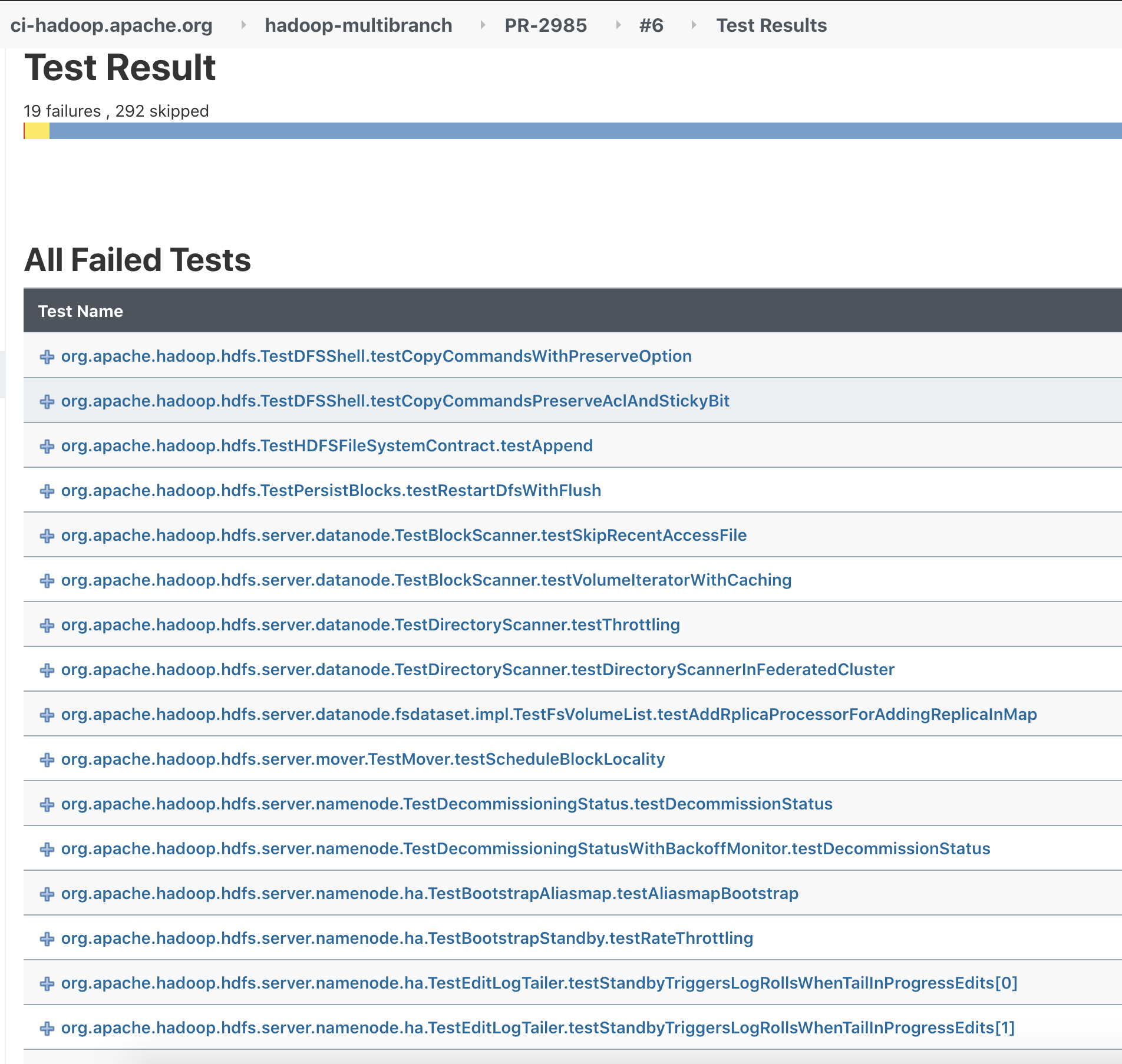

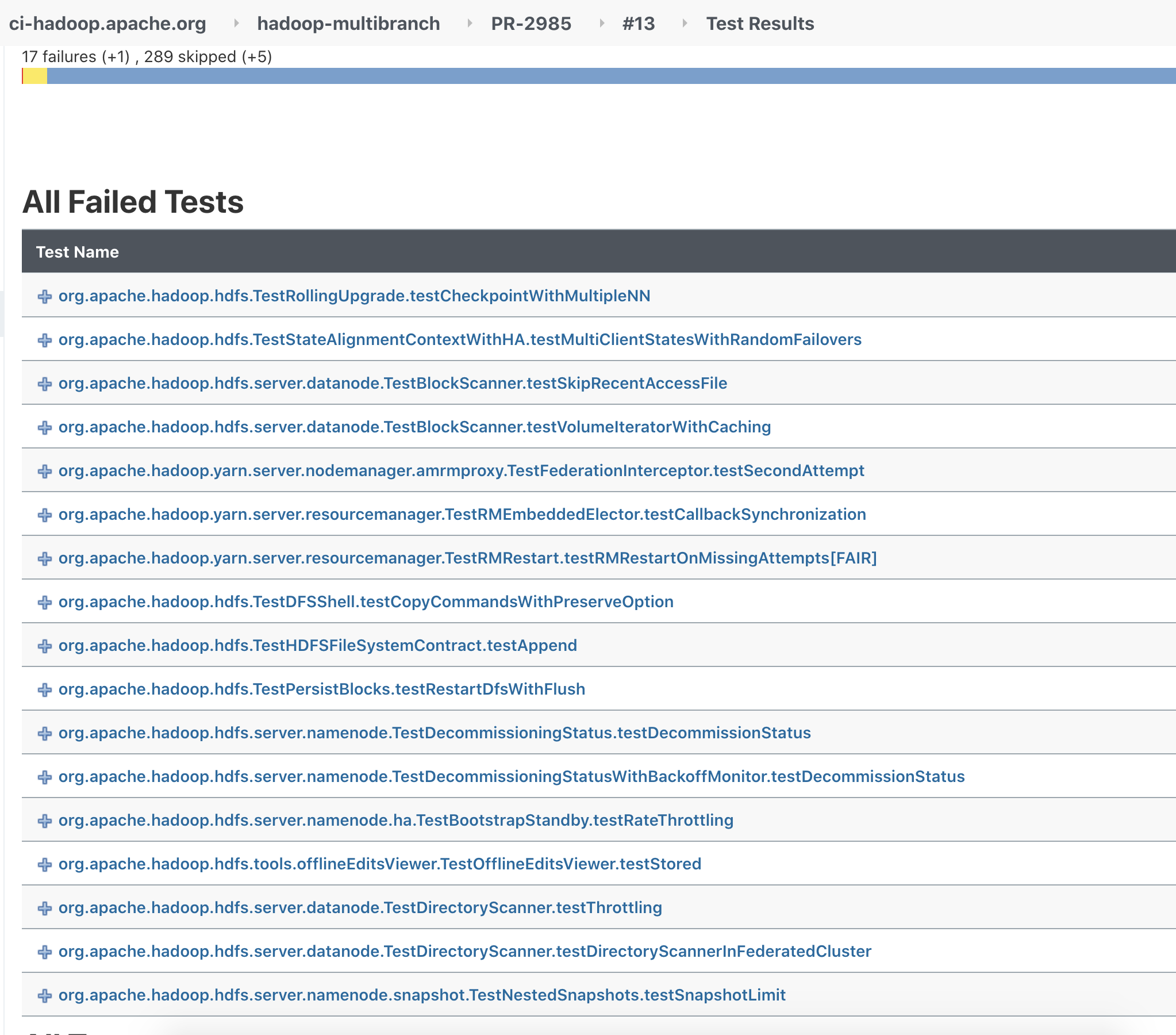

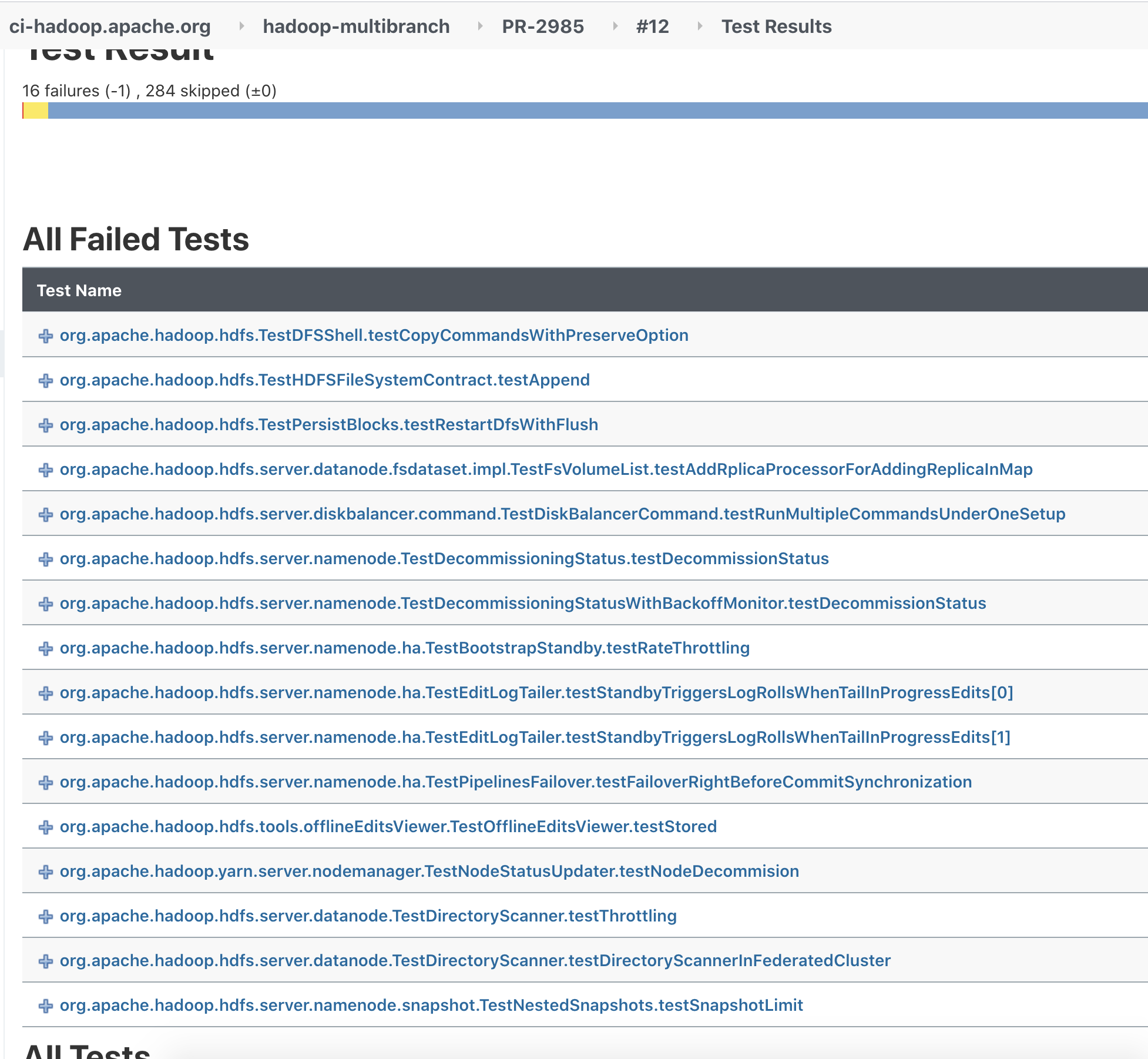

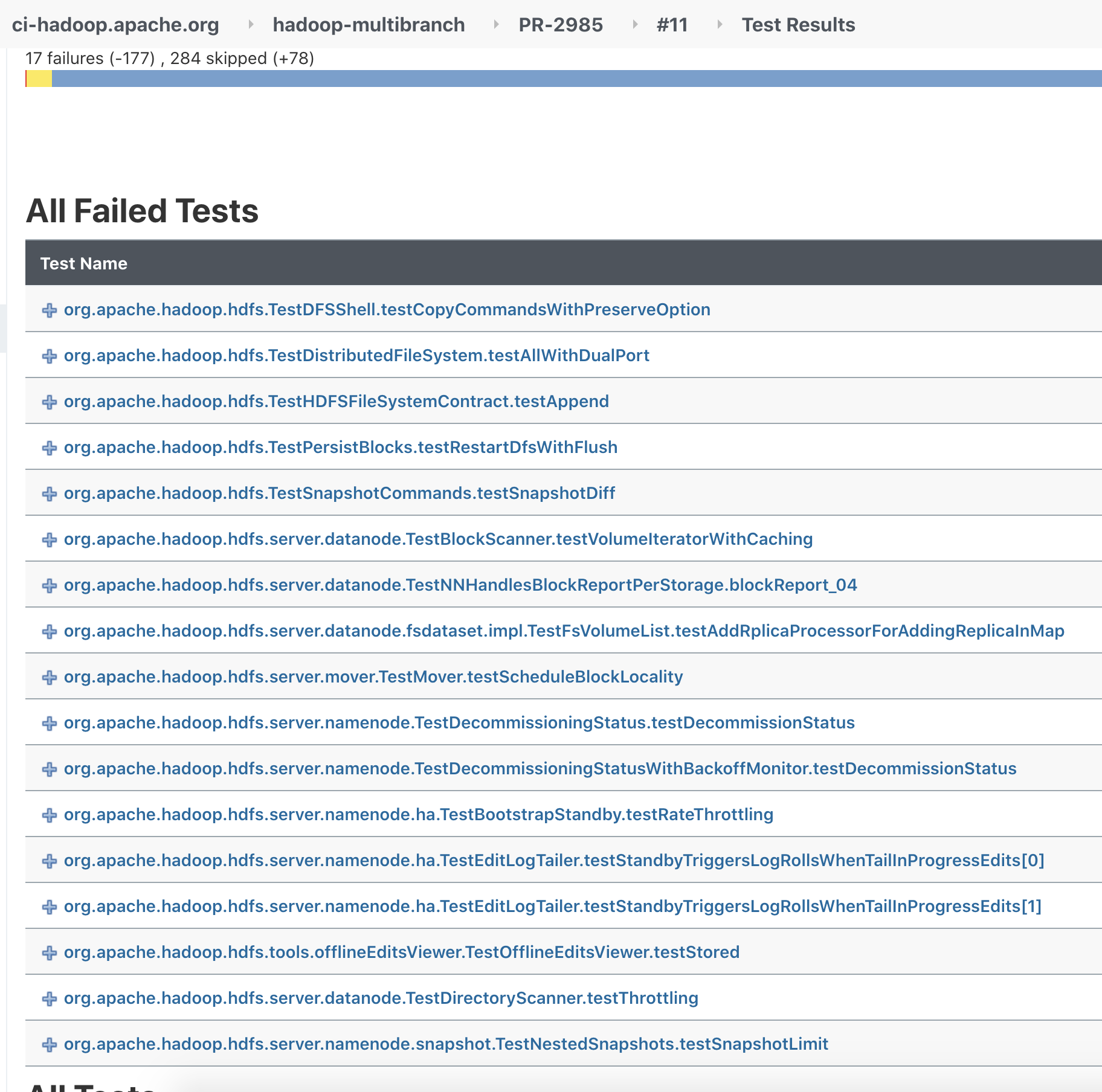

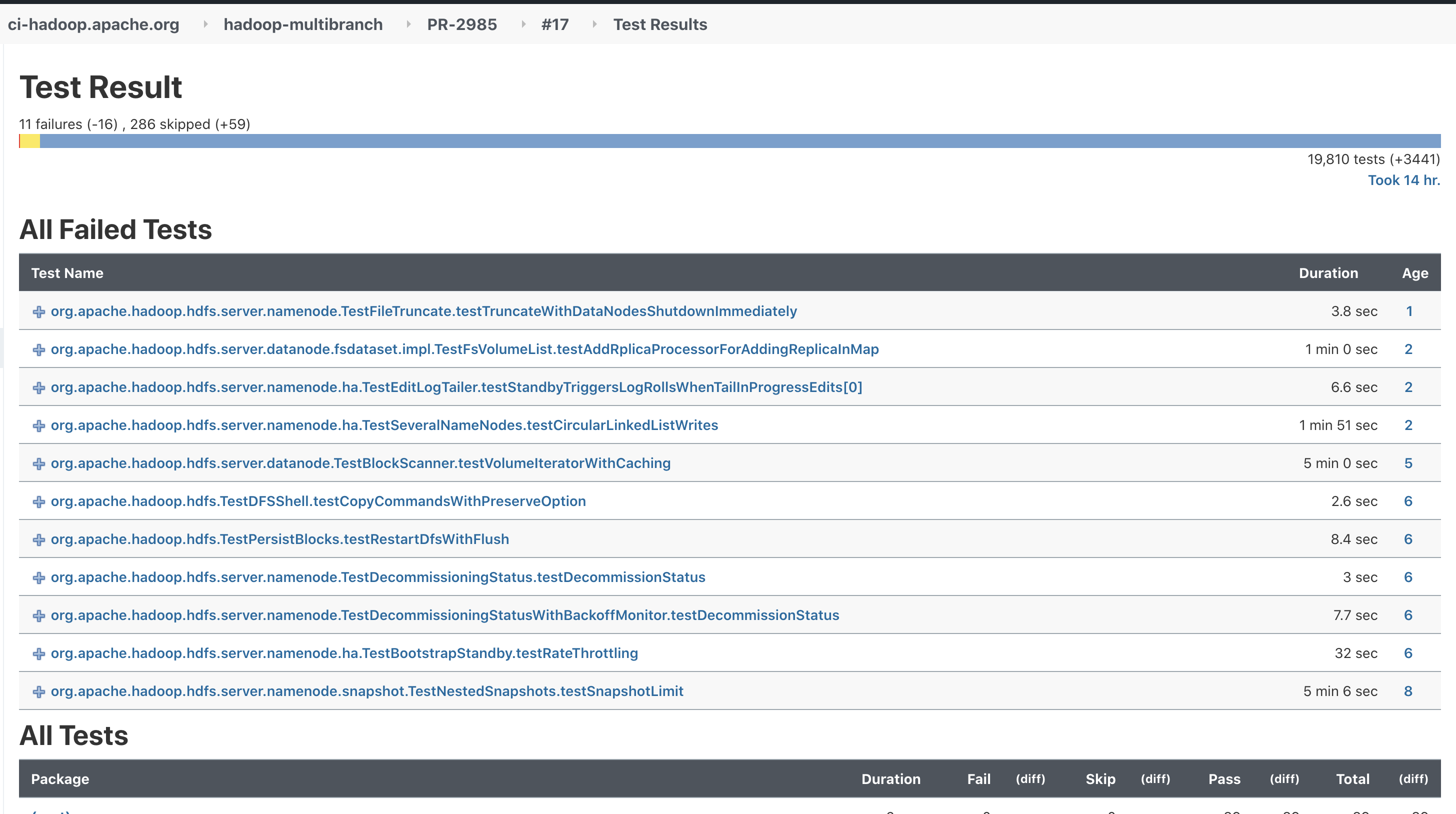

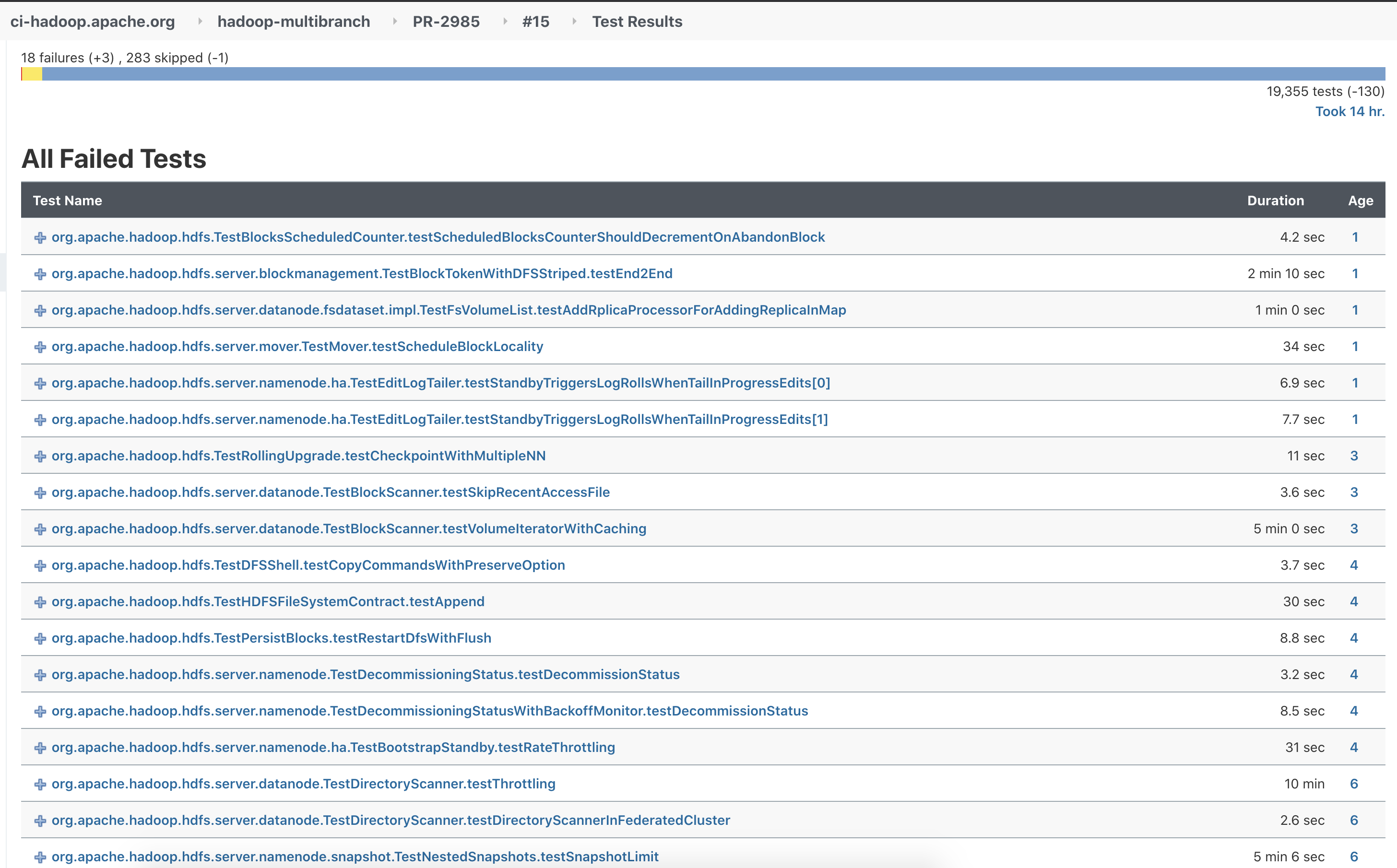

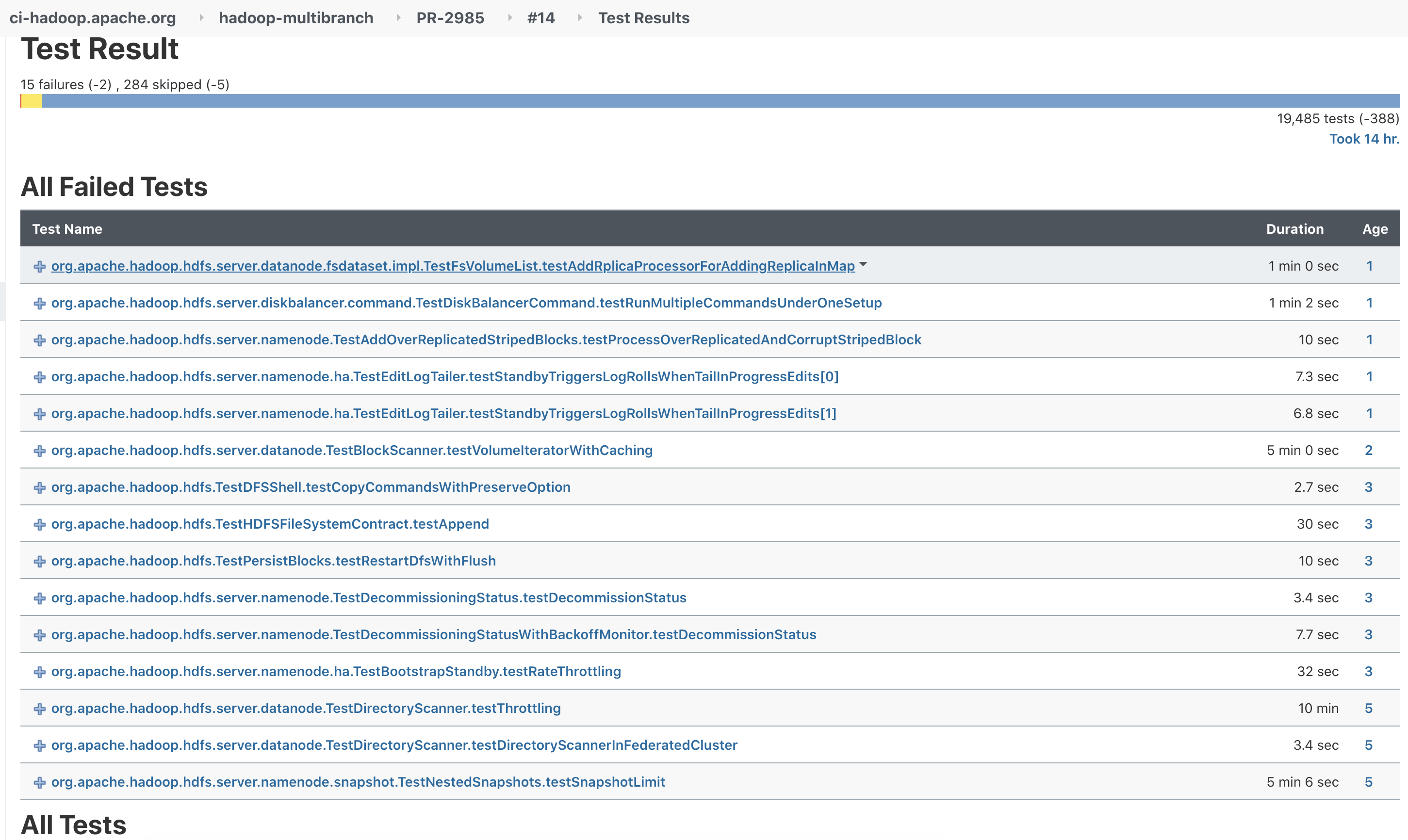

@virajjasani Thanks for making sure there is record of all the unit tests results. I can imaging how much time and effort it took to take a snapshot and upload it. |

|

@busbey There is one problem here though. Adding |

0db00ee to

5cfb6e8

Compare

I just saw this comment when page refreshed. I think this idea also looks nice and clean, but I believe if we cover hadoop-common and hadoop-tools with initial change, that one also looks clean and we have few usage in place for first commit. WDYT? |

5cfb6e8 to

ce13623

Compare

Describe the failure? Is it not complaining when a banned import is present? |

That's correct. It doesn't complain. We can proceed with either of these options:

|

This comment has been minimized.

This comment has been minimized.

This comment has been minimized.

This comment has been minimized.

|

@busbey I guess we can't do much about this Javac warning: |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I'm going to see if I can reproduce the enforcer failure and see what's up. Fix for the Javac warnings below.

hadoop-common-project/hadoop-common/src/main/java/org/apache/hadoop/util/Sets.java

Outdated

Show resolved

Hide resolved

ce13623 to

a314771

Compare

This comment has been minimized.

This comment has been minimized.

This comment has been minimized.

This comment has been minimized.

a314771 to

92a7595

Compare

This comment has been minimized.

This comment has been minimized.

92a7595 to

0005b92

Compare

This comment has been minimized.

This comment has been minimized.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Describe the failure? Is it not complaining when a banned import is present?

That's correct. It doesn't complain. We can proceed with either of these options:

- Keep the plugin present in affected sub-modules (as is the current state of this PR), or

- Do not use plugin for now and use it in the last PR with the only change to update main pom.

I had things work fine for me by adding the plugin to the build section for the hadoop-project pom. That's where we should eventually put the definition. Until all modules are ready for it though we either need to do specific modules like you've got here or put it into a profile that modules can opt-in or opt-out of. requiring opt-out for modules means you could mark the module we know will be done as follow-ups to this PR and avoid folks introducing a new use in some module that currently doesn't have any. I'm fine with either approach; I think the opt-out provides better coverage but the current definition per module is easier for folks to reason about if they're not already as familiar with the behavior of maven profiles.

I think this is close to landing. I have one correctness comment below, a minor license tracking concern, and some javadoc cleanup.

@amahussein please follow up here if there's anything you feel strongly about in reviewing that isn't covered in the current patch + feedback.

hadoop-common-project/hadoop-common/src/main/java/org/apache/hadoop/util/Sets.java

Outdated

Show resolved

Hide resolved

hadoop-common-project/hadoop-common/src/main/java/org/apache/hadoop/util/Sets.java

Outdated

Show resolved

Hide resolved

hadoop-common-project/hadoop-common/src/main/java/org/apache/hadoop/util/Sets.java

Outdated

Show resolved

Hide resolved

hadoop-common-project/hadoop-common/src/main/java/org/apache/hadoop/util/Sets.java

Outdated

Show resolved

Hide resolved

hadoop-common-project/hadoop-common/src/main/java/org/apache/hadoop/util/Sets.java

Outdated

Show resolved

Hide resolved

hadoop-common-project/hadoop-common/src/main/java/org/apache/hadoop/util/Sets.java

Outdated

Show resolved

Hide resolved

hadoop-common-project/hadoop-common/src/main/java/org/apache/hadoop/util/Sets.java

Outdated

Show resolved

Hide resolved

hadoop-common-project/hadoop-common/src/main/java/org/apache/hadoop/util/Sets.java

Outdated

Show resolved

Hide resolved

hadoop-common-project/hadoop-common/src/main/java/org/apache/hadoop/util/Sets.java

Outdated

Show resolved

Hide resolved

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thanks for the review. Addressed all concerns and haven't squashed commit to identify clear difference.

I think the opt-out provides better coverage but the current definition per module is easier for folks to reason about if they're not already as familiar with the behavior of maven profiles.

True, I think we can continue with current behaviour as is and as part of last PR, remove from all sub-modules and just keep on high level pom.

hadoop-common-project/hadoop-common/src/main/java/org/apache/hadoop/util/Sets.java

Outdated

Show resolved

Hide resolved

This comment has been minimized.

This comment has been minimized.

7602bb6 to

7c63f9a

Compare

This comment has been minimized.

This comment has been minimized.

hadoop-common-project/hadoop-common/src/main/java/org/apache/hadoop/util/Sets.java

Outdated

Show resolved

Hide resolved

hadoop-common-project/hadoop-common/src/main/java/org/apache/hadoop/util/Sets.java

Outdated

Show resolved

Hide resolved

hadoop-common-project/hadoop-common/src/main/java/org/apache/hadoop/util/Sets.java

Outdated

Show resolved

Hide resolved

This comment has been minimized.

This comment has been minimized.

|

Thanks @busbey, let me squash all commits into single one. |

…-common and hadoop-tools

67685bd to

c5ccb5c

Compare

|

Next time I'd recommend just having the committer take care of squashing. That'll save waiting to get QA results on the new commit ref. |

|

🎊 +1 overall

This message was automatically generated. |

…-common and hadoop-tools (apache#2985) Signed-off-by: Sean Busbey <[email protected]>

… own Sets in hadoop-common and hadoop-tools (apache#2985) Signed-off-by: Sean Busbey <[email protected]> (cherry picked from commit e4062ad) Change-Id: Id7c3b050d4179fea9b6d5cd904f9b680d55eab3e

… own Sets in hadoop-common and hadoop-tools (apache#2985) Signed-off-by: Sean Busbey <[email protected]> (cherry picked from commit e4062ad) Change-Id: Id7c3b050d4179fea9b6d5cd904f9b680d55eab3e

This list captures the current state of non-upstream changes in our branch that are not in the public repo. ---Changes cherry-picked to branch-3.3.6-dremio from branch-3.3.2-dremio--- The below changes were on branch-3.3.2-dremio and needed to be brought to branch-3.3.6-dremio to prevent regressing scenarios these changes addressed. HADOOP-18928: S3AFileSystem URL encodes twice where Path has trailing / (proposed) DX-69726: Bumping okie from 1.6.0 to 3.4.0 (CVE-2023-3635) DX-69726: Bumping okie from 1.6.0 to 3.4.0 (CVE-2023-3635) DX-66470: Allow for custom shared key signer for ABFS DX-66673: Backport HADOOP-18602. Remove netty3 dependency DX-66673: Backport MAPREDUCE-7434. Fix ShuffleHandler tests. Contributed by Tamas Domok DX-66673: Backport MAPREDUCE-7431. ShuffleHandler refactor and fix after Netty4 upgrade. (apache#5311) DX-66673: Backport HADOOP-15327. Upgrade MR ShuffleHandler to use Netty4 apache#3259. Contributed by Szilard Nemeth. DX-66673: Backport HADOOP-17115. Replace Guava Sets usage by Hadoop's own Sets in hadoop-common and hadoop-tools (apache#2985) HADOOP-18676. jettison dependency override in hadoop-common lib DX-52816: Downgrade azure-data-lake-store-sdk to 2.3.3 to support dremio version. DX-52701: Remove node based module by Naveen Kumar DX-32012: Adding BatchList Iterator for ListFiles by “ajmeera.nagaraju” DX-18552: Make file status check optional in S3AFileSystem create() Add flag to skip native tests by Laurent Goujon DX-21904: Support S3 requester-pays headers by Brandon Huang DX-21471: Fix checking of use of OAuth credentials with AzureNativeFileSystem DX-19314: make new kms format configurable DX-17058 Add FileSystem to META-INF/services DX-17317 Fix incorrect parameter passed into AzureADAuthenticator-getTokenUsingClientCreds by TiffanyLam DX-17276 Azure AD support for StorageV1 by James Duong DX-17276 Add Azure AD support in Dremio's hadoop-azure library for Storage V1 support unwraps BindException in HttpServer2 ---Changes picked up by moving to 3.3.6--- The below changes were changes on branch-3.3.2-dremio that did not need to come to branch-3.3.6-dremio as the public 3.3.6 branch contained the fixes already. DX-67500: Backport HADOOP-18136. Verify FileUtils.unTar() handling of missing .tar files. DX-66673: Backport HADOOP-18079. Upgrade Netty to 4.1.77. (apache#3977) DX-66673: Backport HADOOP-11245. Update NFS gateway to use Netty4 (apache#2832) (apache#4997) DX-64051: Bump jettison from 1.1 to 1.5.4 in hadoop/branch-3.3.2-dremio DX-64051: Bump jettison from 1.1 to 1.5.4 in hadoop/branch-3.3.2-dremio DX-63800 Bump commons-net from 3.6 to 3.9.0 to address CVE-2021-37533 DX-27168: removing org.codehaus.jackson Change-Id: I6cdb968e33826105caff96e1c3d2c6313a550689

No description provided.