Compress saved llama state #1247

Closed

Add this suggestion to a batch that can be applied as a single commit.

This suggestion is invalid because no changes were made to the code.

Suggestions cannot be applied while the pull request is closed.

Suggestions cannot be applied while viewing a subset of changes.

Only one suggestion per line can be applied in a batch.

Add this suggestion to a batch that can be applied as a single commit.

Applying suggestions on deleted lines is not supported.

You must change the existing code in this line in order to create a valid suggestion.

Outdated suggestions cannot be applied.

This suggestion has been applied or marked resolved.

Suggestions cannot be applied from pending reviews.

Suggestions cannot be applied on multi-line comments.

Suggestions cannot be applied while the pull request is queued to merge.

Suggestion cannot be applied right now. Please check back later.

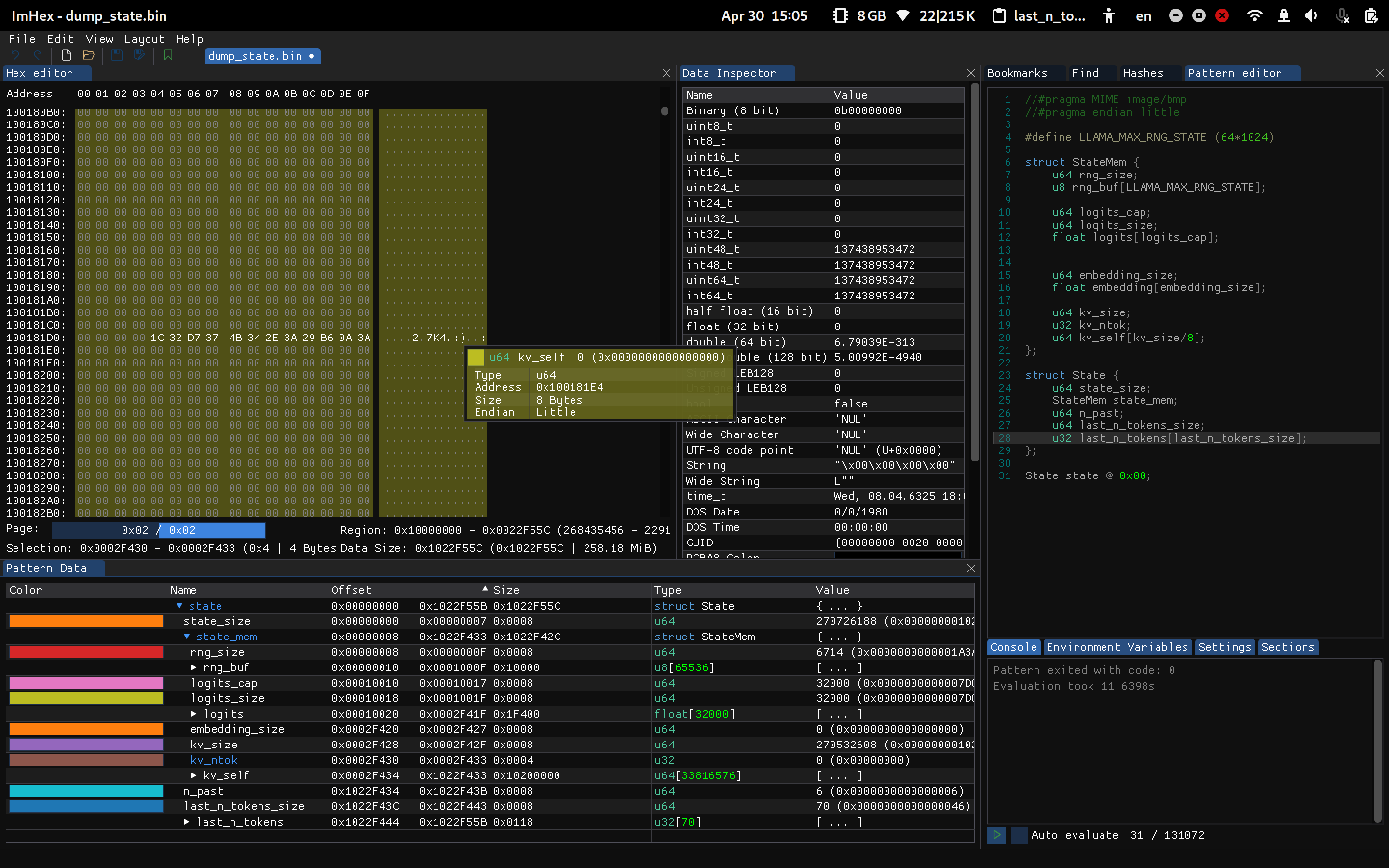

Compress saved llama state by using RLE compression. I have also tried lz4 and lzma compression, but it seems like a lot of entropy besides zeroes in the file, so RLE is the best choice for small-mid size prompts. Uncompressed size is 259M, while RLE compressed is 4.4M.

Some parts of the code is generated and needs to be refactored.

If someone is working on implementing prompt cache for

examples/main- please let me know.For our largest prompt reason-act compression results are following:

Runtime results:

Details