-

Notifications

You must be signed in to change notification settings - Fork 2

Event Queues. Why ? How ? When ?

Boring CMS documentation states:

Event Queues are needed to ensure data and cache coherence and support communication between Sitecore instances.

Hmmm.. Lets put it in a more human language:

Whenever you perform a data change (f.e. saving an item) on Sitecore instance, other Sitecore instances must react on that (f.e. remove related cache records, update a local copy of search indexes).

There must be a mechanism that allows sharing data changes between instances -> Event Queue.

- Performance

-

~650 SQL requests are needed to start CMS 7.2 & show a Content Editor with caching enabled ( reading every item only once from database ).

- Further attempts to show Content Editor would not provoke a single SQL to be executed.

-

~120 000 SQL requests ( **~ 185 times more** ) to do same without caching.

- Further attempts to show Content Editor would provoke tons of SQL to be executed.

- Maintaining search indexes

- Each instance has own indexes folder which needs to be updated according to data changes when OOB Lucene-based indexes are used.

Reading data directly from database on every call is too costly, so cannot go further without caching...

We need to keep track of modifications to update search indexes, thus a sort of storage is needed anyway.

- Indexes are so easy get outdated by missing even a single notification from service ( either network issues, or Sitecore down time )

- Service would require connection between Sitecore instances, and would break existing ones (3.2 Isolating the CM and CD Environments)

Every Sitecore instance would request fresh rows (raised by other instances & stamp higher than last processed) from EventQueue table in every content database (f.e. master, core, web) every 2 seconds.

The last processed stamp (updated once in 10 seconds) is stored in Properties table ( key/value storage ) with EQStamp_InstanceName pattern as a plain number.

If stamp is NOT found, only 10 minutes-old events would be processed.

Every read row/event would be de-serialized, and replayed locally as remote one:

- Saving an item locally would result in an item:saved event on a local instance, and item:saved:remote event to be replayed on other instances

- Whenever a publish operation is ended by publishing instance, a "publish:end" event is raised on publishing instance, and "publish:end : remote" is to be replayed on another instances

- This event is used to clean Sitecore HTML caches on Sitecore instances

- Modify a configuration by adding a child 'handler' node with EventHandler-like signature to 'configuration/sitecore/events' section, like:

<handler type="Sitecore.Publishing.HtmlCacheClearer, Sitecore.Kernel" method="ClearCache">

<sites hint="list">

<site>website</site>

</sites>

</handler>

</event>

- Use Sitecore.Eventing.EventManager.Subscribe API for custom events ( must be serializable )

- Use Sitecore.Events.Event.Subscribe API for known events like "item:saved"

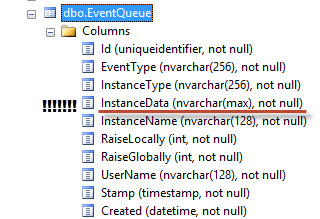

##EventQueue table structure

EventQueue table has following columns:

- [Id] - not used, will be removed some day

- [EventType] & [InstanceType] - formed as typeof() and GetType()

- [InstanceData] - Event data in JSON format

- [InstanceName] - which instance raised an event

- [RaiseLocally] & [RaiseGlobally] - bit flags, I`ve never seen RaiseLocally true

- [Stamp] - monotonously increasing number to find new rows

- [Created] & [UserName] - nothing to add to the self-descriptive column name

- Removing obsolete data from the table can be tricky, as could require a table lock

- Keeping a lot of data in each row is painful for MSSQL

- Keeping an excessive amount of records in frequently requested table is a bad idea

- Using relational database engine for simple operations with non-relational data (that could be done by free database engines) reduces the possibility of horizontal scaling

- Events could are raised by all threads of all Sitecore instances, whereas processing is done in a single thread. Thus a delay between adding a remote event, and processing could grow during bulk content updates ( f.e. massive publishing ).

As a result, an obsolete data could stay in caches for a while till removed. - An event/row can be removed by cleanup task before processed by all instances, thus Sitecore restart & index rebuild would be needed.

- Having too many records in a table could cause cleanup task to fail with timeout, and would keep consuming more and more resources.