-

Notifications

You must be signed in to change notification settings - Fork 699

[OpenCL] Optimize BatchedReduceAdd implementation #3190

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

[OpenCL] Optimize BatchedReduceAdd implementation #3190

Conversation

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@SplitInfinity has imported this pull request. If you are a Facebook employee, you can view this diff on Phabricator.

|

@SplitInfinity |

|

No, I am not going to modify non-OpenCL |

|

I think this PR has the right idea, but I'm not so sure about the approach. |

At least for now, I wouldn't be too worried about that -- currently backend-specific Nodes are complete black boxes*. They are never touched except for DCE/CSE. So I think only the backend itself would be able to make valid changes here that impact shapes, as otherwise they would also need to update the backend-specific Nodes which they don't understand. *If we end up adding functionality mentioned in #1830 then we may need to revisit this. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Nice! Overall, it looks very clean. How do you test it? Do we need to add any new tests?

I think A totally different approach could be to run a backend specific pass (not post-lowering) that would perform the rewriting at the very end of the graph processing pipeline or even as an IR pass after the usual IR generation. In this case nobody can overwrite its results and make them inconsistent. |

afe992b to

dea8c99

Compare

|

When I implemented this operator for OpenCL, we aready had tests for this operator that test reducing on axis 0 and axis 1 as well as producing a zero-dimensional result. I added another test in #2958 for axis 2 (which in that specific test case is also the last dimension), so I think we have enough tests. |

dea8c99 to

c145cc7

Compare

c145cc7 to

84541cf

Compare

|

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

LGTM!

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@SplitInfinity has imported this pull request. If you are a Facebook employee, you can view this diff on Phabricator.

Description: This commit reduces the time taken to execute a batchedreduceadd instruction in the OpenCL backend by moving the transfer of the input and output slice size data from execution time to addNetwork time. The slice sizes of the input and output can be computed using static shape information at compile time, so they don't have to be computed and transferred once per operation invocation at runtime. This commit introduces a post-lowering OCLBackend transformation that replaces BatchedReduceAddNode with a semantically identical OCLBatchedReduceAddNode that has two additional inputs for the slice sizes of the input and output nodes. The code to compute these slice sizes has been moved from OpenCLFunction::execute() to OCLBackend::transformPostLowering and modified to write into the payload Tensors of Constants that are used as the inputs to the previously mentioned OCLBatchedReduceAddNode. These slice sizes are then copied to the device with the rest of the constants needed by the function. Test Plan: All unit tests pass.

84541cf to

28e048d

Compare

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@SplitInfinity has imported this pull request. If you are a Facebook employee, you can view this diff on Phabricator.

|

@SplitInfinity merged this pull request in f06ef01. |

Description

This commit reduces the time taken to execute a

batchedreduceaddinstruction in the OpenCL backend by moving the transfer of the input

and output slice size data from execution time to

addNetworktime.The slice sizes of the input and output can be computed using static

shape information at compile time, so they don't have to be computed and

transferred once per operator invocation at runtime. This commit introduces

a post-lowering

OCLBackendtransformation that replacesBatchedReduceAddNodewith a semantically identical

OCLBatchedReduceAddNodethat has two additionalinputs for the slice sizes of the input and output nodes. The code to

compute these slice sizes has been moved from

OpenCLFunction::executeto

OCLBackend::transformPostLoweringand modified to write into thepayload

TensorsofConstantsthat are used as the inputs to thepreviously mentioned

OCLBatchedReduceAddNode. These slice sizes are thencopied to the device with the rest of the constants needed by the

function.

Test Plan

All unit tests pass.

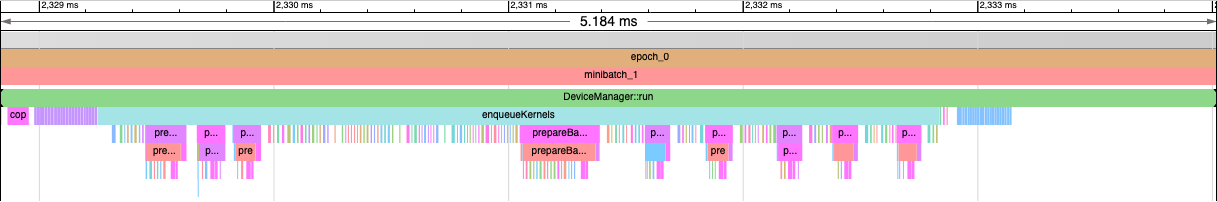

DLRM trace before this optimization:

DLRM trace after this optimization:

Time taken to process one minibatch has decreased by 20%.