-

Notifications

You must be signed in to change notification settings - Fork 214

Implements both Tensor and NdArray interfaces from same instance #92

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

|

Is it possible to have |

|

@Craigacp : unfortunately no, I thought of that but public interface TType extends TensorBut in this case, if I have an public interface TFloat32 extends TFloat, Tensor<TFloat32> // not allowed there because both `Tensor` and `Tensor<TFloat32>` are extendedA solution would be to have |

|

Maybe Though that's starting to get messy as well, and I'm not clear it would compile. |

|

yes, I tried to leave the Maybe it can now be replaced simply by It will still be strange to have |

|

Could we not make |

Yes it looks like our solutions are converging to this and that we should get rid entirely of |

|

Ok I did some tests. So dropping The only "negative" impact I would say is that now, you cannot just filter out at compile time tensors of the wrong datatype in the signature of a method. For example, static <U extends TNumber> flatten(..., Shape<U> shape, DataType<U> dtype)Now, since type families are not extended by the tensor class, you need to specify both boundaries, otherwise classes like static <U extends Tensor & TNumber> flatten(..., Shape<U> shape, DataType<U> dtype)I still personally prefer this new approach, and declaring both boundaries in the signature of such method can also be seen as being more explicit (I want U to be a "Tensor" of "Number"s). If we all agree on this, I'll push the new version in this PR draft. I'll need to refactor a bit the op generator though |

|

Could you push out that version so I can look through? I'm having some difficulty seeing the relationships between types, and its probably easier for you to push it than for me to make equivalent changes to my own fork. |

|

Here @Craigacp , I pushed it into this branch So I ended up tweaking it a bit more to cover most cases that I find useful. Now, So the tensor hierarchy is used to map the data to Java (primitive) types while the type families are used to classify the tensor based on their TF datatype. |

| } | ||

| return dims; | ||

| } | ||

| TF_Tensor nativeHandle(); |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This is a public method now right? That's kinda unpleasant.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I totally agree. But I did not found (yet) a way to avoid it.

|

Ok I now have a third implementation of pretty much the same thing: https://github.com/karllessard/tensorflow-java/tree/tensor-as-ndarrays-3 The tensor and type family relationships remain pretty much like in my second draft, the main difference is that instead of having This allows us to keep, for example, What is nice about it though is that I ended up extending the Ops tf = Ops.create();

TFloat32 tensor = TFloat32.scalarOf(1.0f); // very simple tensor just for the sake of this example

TFloat32 result = tf.math.add(tensor, tf.constant(2.0f)).asTensor();

assertEquals(3.0f, result.getFloat(), 0.0f);I still have to play around with generic types to make sure everything make sense and is inferred properly but before, I'll keep it like this for now and wait for your feedbacks or for the next community call to discuss more about it. |

|

Hi, @karllessard I've viewed this PR and the second one A few moments, which give me ambivalent feelings

I agree that proposed changes solves the problem of two worlds hierarchies merging (but I'm not sure about benefits). What do you think? |

|

One thing I'd like to note in passing about the name |

|

Hey @zaleslaw , thanks for reviewing, my comments below...

I got rid of these two issues the latest version, did I left something or did you looked at a previous version?

The "problem" I find is that type is carried everywhere as a generic parameter by

Again the newest version don't have

I totally agree. I realized though that my daily occupation requires me more time than expected and I might need some help to finalize the whole proposal and adjust the tests in consequence, please let me know if you think you can help me out with this. Thanks, |

|

I’m putting on hold my work on this PR as I’m focusing more on the saved model export now. I’m also starting to have some doubts if the few benefits of these changes are worth it, I’ll try to figure that out with a clearer mind. |

|

It's a good solution, we could return to it later, it will not be lost, of

course

вт, 25 авг. 2020 г., 4:43 Karl Lessard <[email protected]>:

… I’m putting on hold my work on this PR as I’m focusing more on the saved

model export now. I’m also starting to have some doubts if the few benefits

of these changes are worth it, I’ll try to figure that out with a clearer

mind.

—

You are receiving this because you were mentioned.

Reply to this email directly, view it on GitHub

<#92 (comment)>, or

unsubscribe

<https://github.com/notifications/unsubscribe-auth/AAJEUHMKI3AV67IL2TGR2R3SCMJKZANCNFSM4PLWU6OQ>

.

|

It is true that if we keep that latest design, it is pretty much backward compatible with the actual implementation and therefore can easily come up as an improvement for later. If we decide to shuffle a bit more the types though, better do it before our first release. Let's brainstorm back about it in a near future. |

|

I have checked in a |

|

@JimClarke5 since your PR only required |

|

Closing, in favor to #139 |

Hi everone,

I've been playing around lately with different components of the core API to see if it could be possible that both the

NdArrayandTensorinterfaces be implemented by the same object. This way, we can avoid this transition from one to another.For example, right now when we get hold on a tensor, we basically just wrap up the native pointer and expose a few methods to retrieve its shape and datatype. If we need to access its data directly from the JVM, we map its memory to an

NdArrayin a second step and keep a reference to that array for future access. This is done by invokingdata()on a tensor instance. e.g.This actual PR change the behaviour so that the

TTypeitself (TFloat32here) will implement both theNdArrayand theTensorinterface (Tensoris actually a class so needed to be converted to an interface). The same code above would look like this:It is also important to noticed that I made the changes totally backward compatible with the actual implementation. For example these lines will still work since

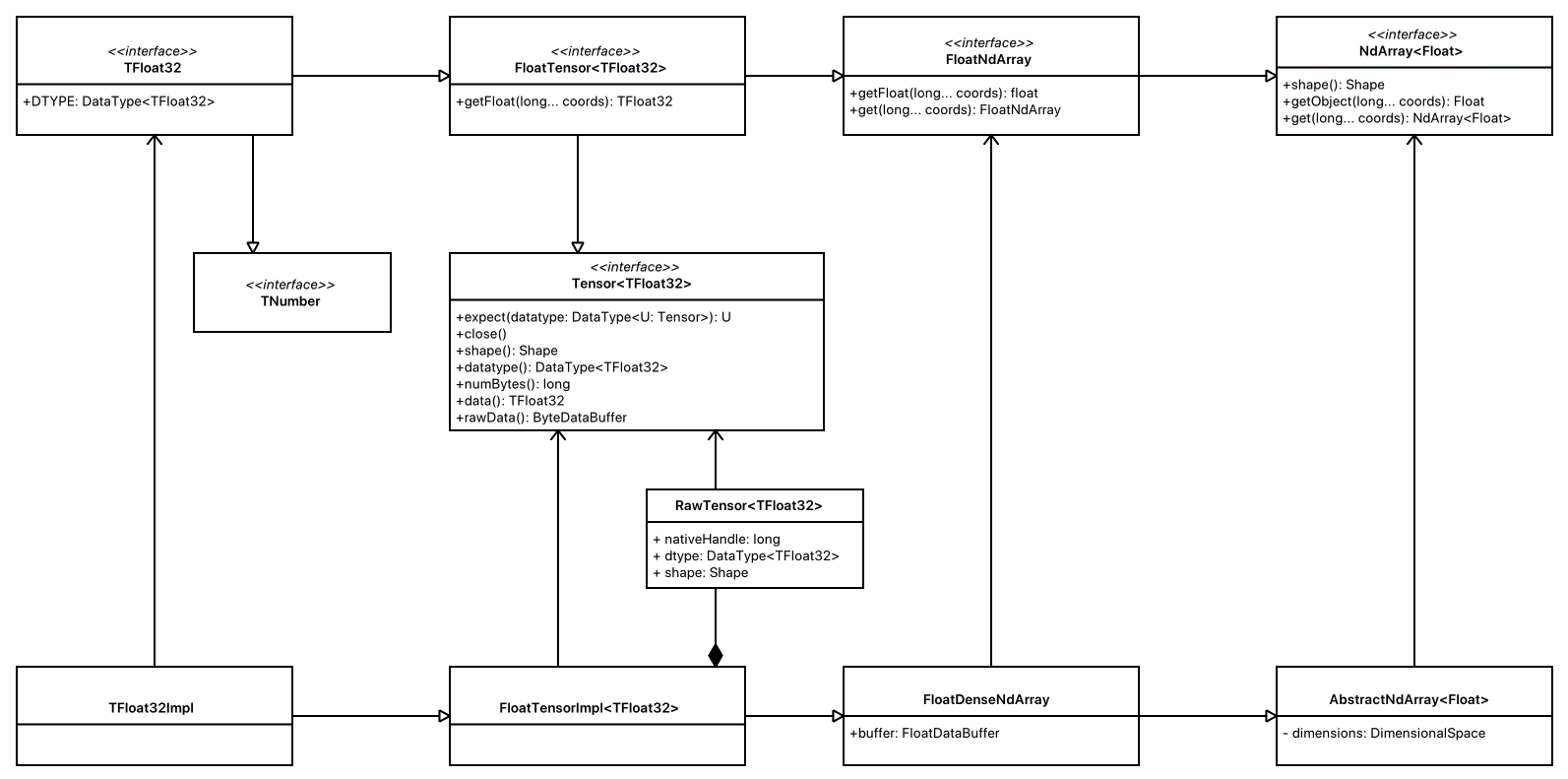

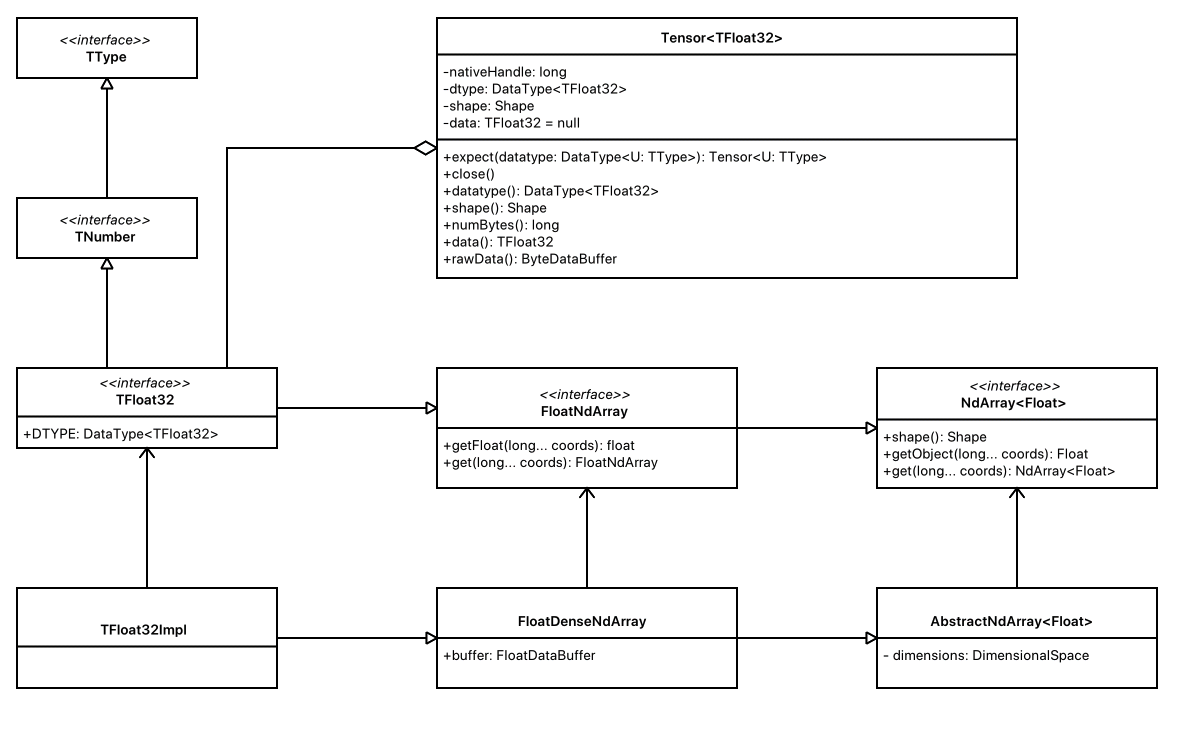

TFloat32now implementsTensor<TFloat32>:Please take a look and let me know if you think I should cleanup this work and make it a real PR or not. To help understanding better the proposed changes, here are two diagrams comparing both implementations, taking

TFloat32as example:Previous:

New: