-

Notifications

You must be signed in to change notification settings - Fork 278

Silence the log messages during a test run #1041

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Silence the log messages during a test run #1041

Conversation

We are using slow_retrieval_server.py for our tests only. That's why logging requests made by the server and printing them while running the tests doesn't give us any information. Signed-off-by: Martin Vrachev <[email protected]>

Currently, when we run the unit tests we get a lot of messages with the content: "code 404, message File not found" even a successful run. This is caused because tuf will always try to download a newer version of root.json, but as expected, at one point it will download the freshest version of root.json and when it tries to download a newer one it will fail with this error message. Signed-off-by: Martin Vrachev <[email protected]>

This message doesn't give us useful information during the test run and it's repeated more than 10 times when running the tests with python 3.7 Signed-off-by: Martin Vrachev <[email protected]>

|

I tested those changes to see if other errors appear. I checkout on commit 3851f38 just before my fix |

|

Thanks for the patch, @MVrachev! Your solution works nicely but it feels a bit hackish to blacklist a certain undesired output in A way to fix this in a more scalable fashion would be to configure the tests so that console output is only displayed if tests fail. I remember doing this for in-toto in in-toto/in-toto#240 (it might also be worth taking a look at in-toto/in-toto#183). Let me know if this helps or if you have any questions. |

Yes, I could do that, but even if you see the logs when the tests fail don't you think we should filter the |

|

I think you can, but I don't think it's necessary, assuming that the messages are only displayed on failure and we expect our tests not to fail. :) |

|

I have tried a couple of things and here are my thoughts. First, I saw we are using

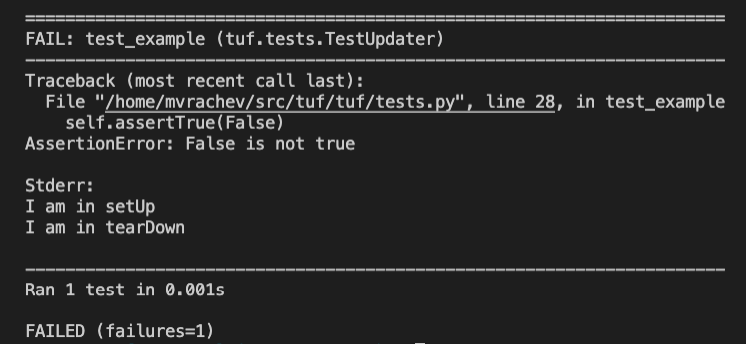

then if I redirect After researching what other people had discovered for that problem it seems like this is the right answer: Second, I thought then I will look again at what you @lukas are suggesting. and the result was: Then, I realized we would need to start all server_process from the |

WRT our chat before : the loggging does work but if you use a testrunner, it gets to decide what to output. In the example it decides to output only the failing test (and the class setup was obviously run much earlier). If you run the same test without a test runner (so no logging is initialized): you will see the output |

|

@MVrachev as you have proposed an alternative solution, should we close this PR? |

|

Yes, I think we can close that one. |

|

Closing due to being superseded by #1104 |

Fixes issue #: #1039

Description of the changes being introduced by the pull request:

Filter the

GETrequest messages fromtests/slow_retrieval.py:Filter the

code 404, message File not founderror messages:Remove

We do not have a console handlerwarnings.Please verify and check that the pull request fulfills the following

requirements: