-

Notifications

You must be signed in to change notification settings - Fork 119

Passwordless data mode #4049

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Merged

billy-the-fish

merged 7 commits into

latest

from

298-docs-rfc-update-docs-for-passwordless-integration-with-data-mode

Apr 25, 2025

Merged

Passwordless data mode #4049

Changes from all commits

Commits

Show all changes

7 commits

Select commit

Hold shift + click to select a range

c5ff939

added passwordless data mode + cleanup

atovpeko ab4cbcc

Merge branch 'latest' into 298-docs-rfc-update-docs-for-passwordless-…

atovpeko 221f12e

Merge branch 'latest' into 298-docs-rfc-update-docs-for-passwordless-…

billy-the-fish affed44

Merge branch 'latest' into 298-docs-rfc-update-docs-for-passwordless-…

billy-the-fish b11fd00

Update getting-started/try-key-features-timescale-products.md

atovpeko d115c78

Update getting-started/try-key-features-timescale-products.md

atovpeko 0409eac

Update _partials/_cloud-connect-service.md

atovpeko File filter

Filter by extension

Conversations

Failed to load comments.

Loading

Jump to

Jump to file

Failed to load files.

Loading

Diff view

Diff view

There are no files selected for viewing

This file contains hidden or bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains hidden or bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains hidden or bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

This file contains hidden or bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| Original file line number | Diff line number | Diff line change | ||||

|---|---|---|---|---|---|---|

|

|

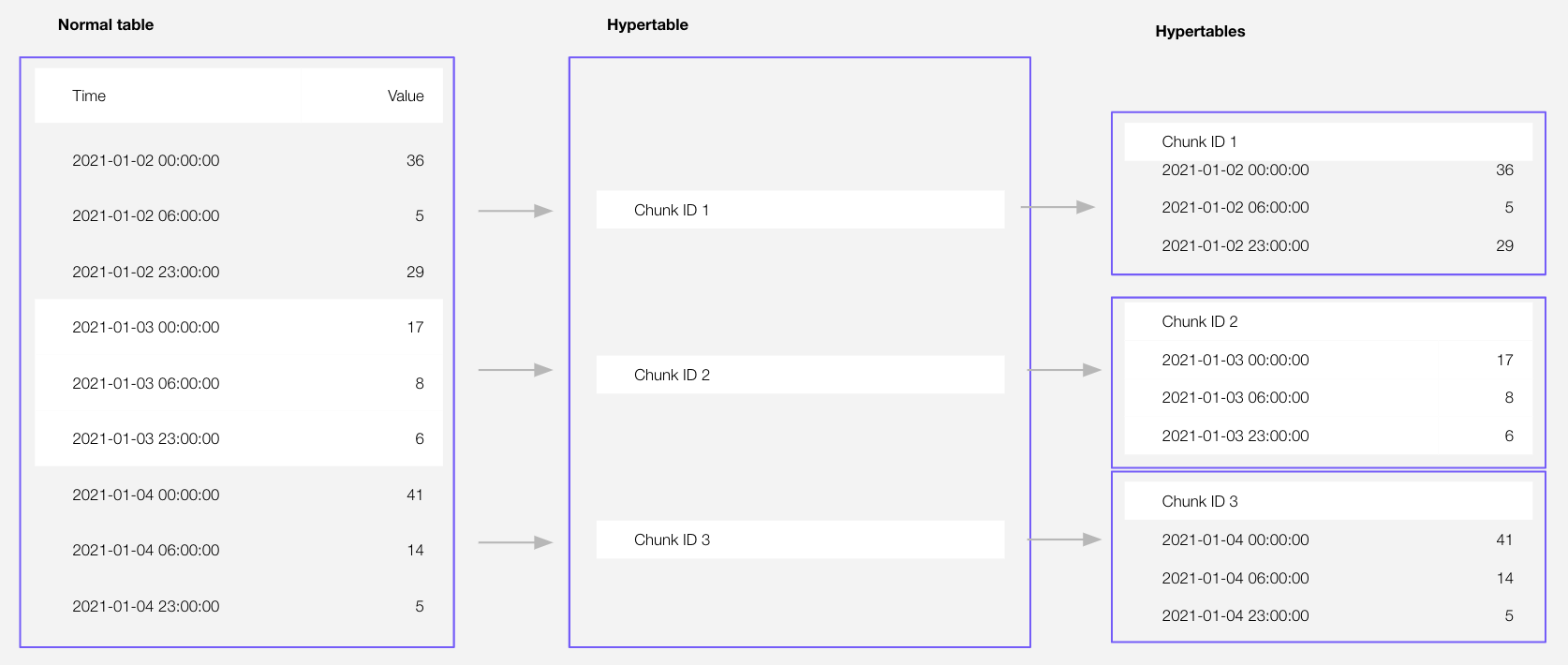

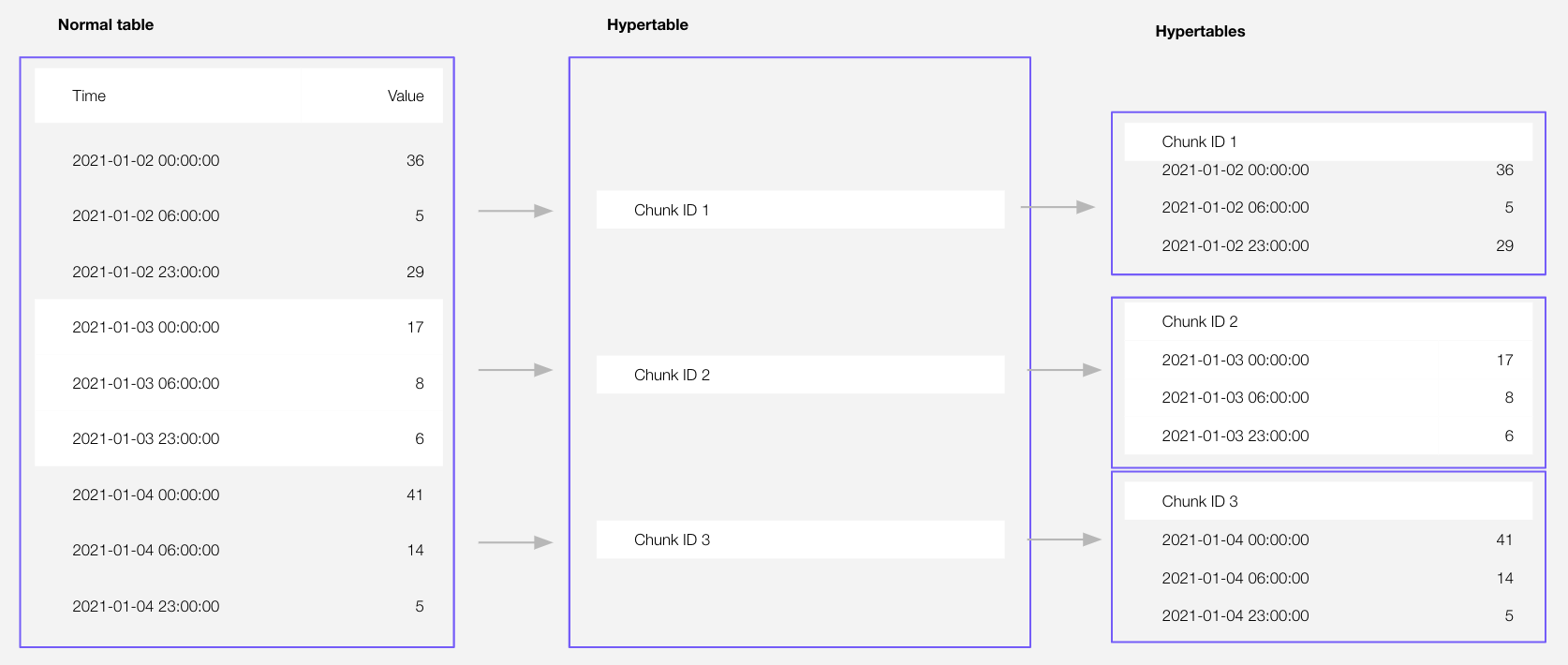

@@ -36,7 +36,7 @@ is made up of child tables called $CHUNKs. Each $CHUNK is assigned a range of ti | |||||

| contains data from that range. When you run a query, $CLOUD_LONG identifies the correct $CHUNK and runs the query on it, instead of going through the entire table. You can also tune $HYPERTABLEs to increase performance | ||||||

| even more. | ||||||

|

|

||||||

|  | ||||||

|  | ||||||

|

|

||||||

| $HYPERTABLE_CAPs exist alongside regular $PG tables. | ||||||

| You use regular $PG tables for relational data, and interact with $HYPERTABLEs | ||||||

|

|

@@ -106,7 +106,7 @@ relational and time-series data from external files. | |||||

| SELECT create_hypertable('crypto_ticks', by_range('time')); | ||||||

| ``` | ||||||

| To more fully understand how $HYPERTABLEs work, and how to optimize them for performance by | ||||||

| tuning $CHUNK intervals and enabling $CHUNK_SKIPPING, see [the $HYPERTABLEs documentation][hypertables-section]. | ||||||

| tuning $CHUNK intervals and enabling chunk skipping, see [the $HYPERTABLEs documentation][hypertables-section]. | ||||||

|

|

||||||

| - For the relational data: | ||||||

|

|

||||||

|

|

@@ -133,7 +133,7 @@ relational and time-series data from external files. | |||||

| </Tabs> | ||||||

|

|

||||||

| To more fully understand how $HYPERTABLEs work, and how to optimize them for performance by | ||||||

| tuning $CHUNK intervals and enabling $CHUNK_SKIPPING, see [the $HYPERTABLEs documentation][hypertables-section]. | ||||||

| tuning $CHUNK intervals and enabling chunk skipping, see [the $HYPERTABLEs documentation][hypertables-section]. | ||||||

|

|

||||||

| 1. **Have a quick look at your data** | ||||||

|

|

||||||

|

|

@@ -172,18 +172,20 @@ $CONSOLE. You can also do this using psql. | |||||

|

|

||||||

| <Tabs label="Upload data to "> | ||||||

|

|

||||||

| <Tab title="SQL Editor"> | ||||||

| <Tab title="Data mode"> | ||||||

|

|

||||||

| <Procedure> | ||||||

|

|

||||||

| 1. **In [$CONSOLE][portal-ops-mode], select the $SERVICE_SHORT you uploaded data to, then click `SQL Editor`** | ||||||

| 1. **Connect to your $SERVICE_SHORT** | ||||||

|

|

||||||

| In [$CONSOLE][portal-data-mode], select your $SERVICE_SHORT in the connection drop-down in the top right. | ||||||

|

|

||||||

| 1. **Create a $CAGG** | ||||||

|

|

||||||

| For a $CAGG, data grouped using a $TIME_BUCKET is stored in a | ||||||

| $PG `MATERIALIZED VIEW` in a $HYPERTABLE. `timescaledb.continuous` ensures that this data | ||||||

| is always up to date. | ||||||

| In your SQL editor, use the following code to create a $CAGG on the real-time data in | ||||||

| In data mode, use the following code to create a $CAGG on the real-time data in | ||||||

| the `crypto_ticks` table: | ||||||

|

|

||||||

| ```sql | ||||||

|

|

@@ -228,10 +230,10 @@ $CONSOLE. You can also do this using psql. | |||||

|

|

||||||

| <Procedure> | ||||||

|

|

||||||

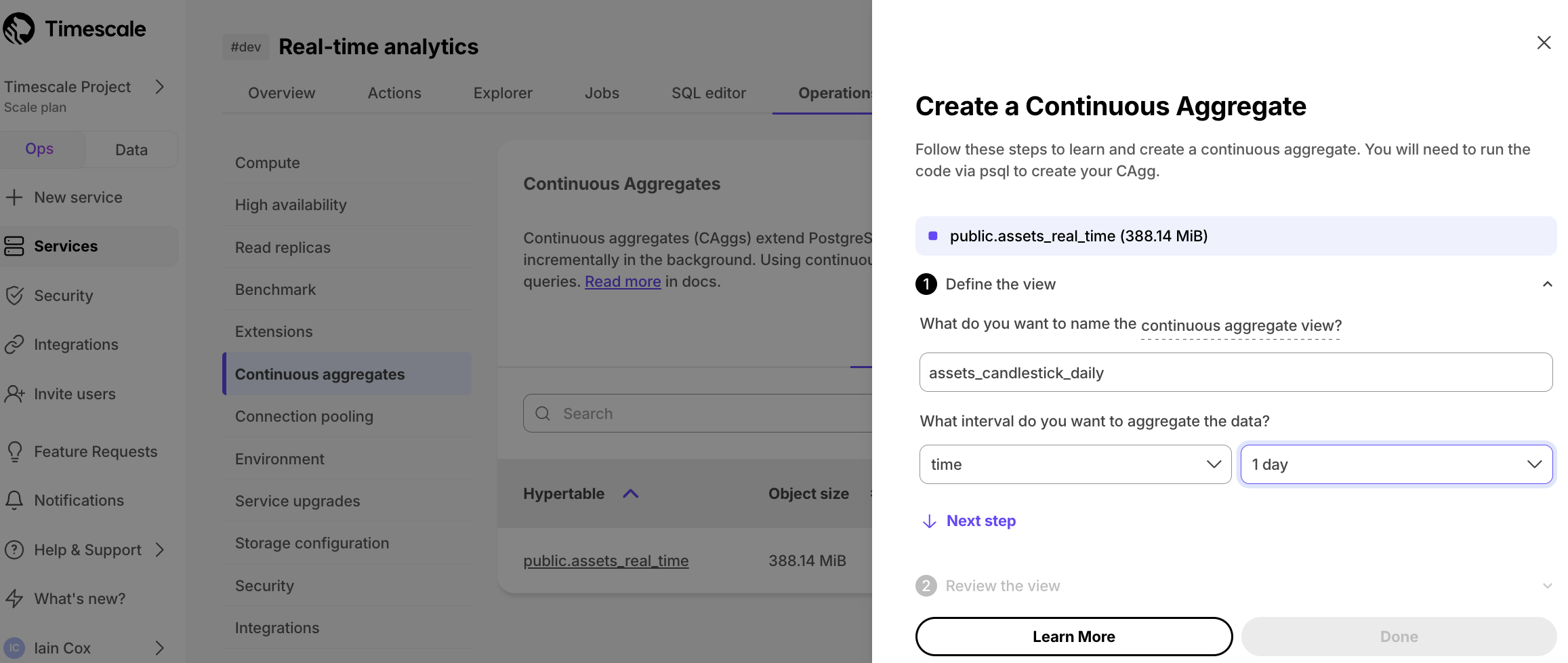

| 1. **In [$CONSOLE][portal-ops-mode], select the $SERVICE_SHORT you uploaded data to**. | ||||||

| 1. **Click `Operations` > `Continuous aggregates`, select `crypto_ticks`, then click `Create a Continuous Aggregate`**. | ||||||

| 1. **In [$CONSOLE][portal-ops-mode], select the $SERVICE_SHORT you uploaded data to** | ||||||

| 1. **Click `Operations` > `Continuous aggregates`, select `crypto_ticks`, then click `Create a Continuous Aggregate`** | ||||||

|  | ||||||

| 1. **Create a view called `assets_candlestick_daily` on the `time` column with an interval of `1 day`, then click `Next step`**. | ||||||

| 1. **Create a view called `assets_candlestick_daily` on the `time` column with an interval of `1 day`, then click `Next step`** | ||||||

| 1. **Update the view SQL with the following functions, then click `Run`** | ||||||

| ```sql | ||||||

| CREATE MATERIALIZED VIEW assets_candlestick_daily | ||||||

|

|

@@ -357,7 +359,7 @@ To set up data tiering: | |||||

|

|

||||||

| 1. **Set the time interval when data is tiered** | ||||||

|

|

||||||

| In $CONSOLE, click `SQL Editor`, then enable data tiering on a $HYPERTABLE with the following query: | ||||||

| In $CONSOLE, click `Data` to switch to the data mode, then enable data tiering on a $HYPERTABLE with the following query: | ||||||

|

There was a problem hiding this comment. Choose a reason for hiding this commentThe reason will be displayed to describe this comment to others. Learn more.

Suggested change

|

||||||

| ```sql | ||||||

| SELECT add_tiering_policy('assets_candlestick_daily', INTERVAL '3 weeks'); | ||||||

| ``` | ||||||

|

|

@@ -386,9 +388,9 @@ To set up data tiering: | |||||

| ## Reduce the risk of downtime and data loss | ||||||

|

|

||||||

| By default, all $SERVICE_LONGs have rapid recovery enabled. However, if your app has very low tolerance | ||||||

| for downtime, $CLOUD_LONG offers $HA_REPLICAs. $HA_REPLICA_SHORTs are exact, up-to-date copies | ||||||

| for downtime, $CLOUD_LONG offers $HA_REPLICAs. HA replicas are exact, up-to-date copies | ||||||

| of your database hosted in multiple AWS availability zones (AZ) within the same region as your primary node. | ||||||

| $HA_REPLICA_SHORTa automatically take over operations if the original primary data node becomes unavailable. | ||||||

| HA replicas automatically take over operations if the original primary data node becomes unavailable. | ||||||

| The primary node streams its write-ahead log (WAL) to the replicas to minimize the chances of | ||||||

| data loss during failover. | ||||||

|

|

||||||

|

|

||||||

Add this suggestion to a batch that can be applied as a single commit.

This suggestion is invalid because no changes were made to the code.

Suggestions cannot be applied while the pull request is closed.

Suggestions cannot be applied while viewing a subset of changes.

Only one suggestion per line can be applied in a batch.

Add this suggestion to a batch that can be applied as a single commit.

Applying suggestions on deleted lines is not supported.

You must change the existing code in this line in order to create a valid suggestion.

Outdated suggestions cannot be applied.

This suggestion has been applied or marked resolved.

Suggestions cannot be applied from pending reviews.

Suggestions cannot be applied on multi-line comments.

Suggestions cannot be applied while the pull request is queued to merge.

Suggestion cannot be applied right now. Please check back later.

Uh oh!

There was an error while loading. Please reload this page.